「利用者:Sftrabbit/GSoC 2013/Documentation/Summary Of Features」の版間の差分

(→Multicamera reconstruction) |

細 (1版 をインポートしました) |

(相違点なし)

| |

2018年6月29日 (金) 06:04時点における最新版

Summary of Features

Throughout Google Summer of Code 2013, I worked on two main features: focal length constraints and multicamera reconstruction. The constraints feature was completed during the summer period and multicamera reconstruction continues to be a work in progress.

Focal length constraints

This feature is complete and will be available in an upcoming Blender release

When reconstructing a scene, you must provide the focal length of the camera with which the footage was shot. This setting is given in the Camera Data panel. Often, the given value is imprecise and the reconstruction algorithm can produce a better reconstruction by adjusting the focal length. This refinement can be enabled by selecting Focal Length to refine in the Solve tool.

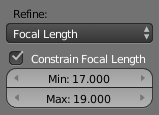

Sometimes this refinement can result in a focal length that is far from correct, simply because the algorithm believes it gives a more accurate reconstruction. In this case, it may help to provide a constraint, so that the focal length with the best reconstruction within this constraint is found. To apply a constraint, in the Solve tool, check Constrain Focal Length and provide the appropriate minimum and maximum values. The focal length given in the Camera Data panel will be used for the initial reconstruction and then refined.

As an example, consider some example footage which you believe was shot with a focal length of 18mm, which refinement adjusts to 25mm. However, you know that the true focal length should be somewhere between 17mm and 19mm. If you apply this constraint to the focal length refinement, a solution of, say, 18.3mm may be found.

The units of the minimum and maximum values match those chosen for the focal length given in the Camera Data panel. While the constraint is disabled, the values will automatically adjust to match the given focal length and can be freely modified when enabled. If the given focal length is outside the constraint range, it is first clamped to this range to perform the initial reconstruction. .

Multicamera reconstruction

This feature is a work in progress and the following describes how it is expected to be used. For details of the current implementation, see the technical overview

Solving with footage from multiple video cameras may help to provide a more accurate reconstruction of the scene. The extra cameras used to support the main footage are often called witness cameras. These cameras give extra coverage of the scene, viewing it from a different angle, which provides more information for the reconstruction algorithm to use to understand the geometry of the scene.

The general approach to perform a multicamera reconstruction will be to open multiple movie clips simultaneously and perform tracking on each of them. The user can then link together any tracks from different movie clips that correspond to the same point in 3D space. These associated tracks then provide the correspondence between the clips that the reconstruction algorithm can use to reconstruct the scene.

The internals for this feature have been implemented (see technical overview), but the user interface is under discussion. The UI itself may be fairly simple, but it will require significant refactoring of the Blender kernel to support data from multiple cameras. Currently a movie clip editing context is linked to only a single movie clip and there is no way to open more than one.