「利用者:NeXyon/GSoC2011/Proposals」の版間の差分

細 (1版 をインポートしました) |

|

(相違点なし)

| |

2018年6月29日 (金) 04:42時点における最新版

Proposal for Google Summer of Code 2011

3D Audio [accepted!]

Synopsis

This project will improve audio in blender further by improving the current system and adding new interesting features. The main goal for this project is to enable the user to place speakers into the 3D scene and render their animations with positioned audio sources and velocity dependent doppler-effects.

Benefits for Blender

Blender 2.5 has a new audio engine and caught up with what it missed before. Now it's time to get up to speed and show how useful audio in combination with 3D can be. Moreover, during the development a resampling system has to be built which enables blender to remove the currently mandatory requirement for SRC (libsamplerate).

Proposal

First there's some cleanup, refactoring and "minor" improvements I'd like to do, roughly including the following points (with estimated amount of work in parentheses [small/medium/large]):

- [medium] Improve memory management (eg. remove news/deletes by using a managed pointer, this will also improve memory management problems with python and enables bidirectional data exchange, which is currently not possible).

- [medium] Improve the Playback Handles, by making a useful class out of them like it's in the python API already.

- [medium] It should be possible to play a single Reader on a Device without a Factory. This enables the usage of microfones in the audio engine. Questionable is how to prevent or enable the possibility to play the same reader on multiple devices.

- [small] Something that also should be tried out is to change the Reader API regarding audio buffer access, the current architecture is bottom-up, top-down might give better performance though.

- [large] Dynamic changing of reader parameters like samplerate and channel count; this requires a dynamic resampling and a better channel mapping solution (mixer interface). It enables a future implementation of LFOs (Low Frequency Oscillators).

- [medium] Animation system improvements. The audio samplerate is a lot higher than the video framerate which is the base in blender and the current interaction with blender's animation system is really ugly, a better solution has to be found here with the animation system maintainer to get subframe accuracy and a better performance.

- [small] The streaming of the OpenAL device is a little hackish at the moment and needs some reworking.

- [medium] Better Channel (including multi channel!) support; a feature still missing from Blender 2.4x is panning, with a well-thought-out user interface this can be finally implemented.

With that prework done the implementation of a software 3D Device functionality-wise similar to OpenAL will be done and first tested with the game engine which supports 3D audio already. So this is basically implementing the AUD_I3DDevice interface (https://svn.blender.org/svnroot/bf-blender/trunk/blender/intern/audaspace/intern/AUD_I3DDevice.h) in software.

As a last step there will be a user interface created to place speakers in the 3D environment and change their properties finally adding nice 3D audio support for blender.

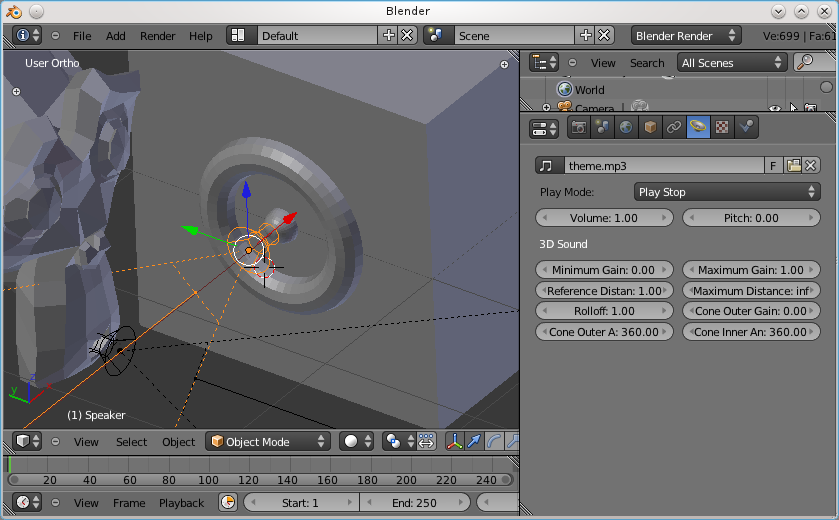

Here's a screenshot of how the UI can look like:

One thing missing here is actually setting the start time of the sound. It's actually not necessary to cut audio here, like it can be done in the sequencer, so to set the start time I thought about an interface similar to the dope sheet where you just set points to start the sound with the ability to set multiple start points.

The advantage: imagine a sound object placed at the end of the barrel of a machine gun with a single gun shot sound as sound file. Now you could simply set a start point for every shot.

Deliverables

- General improvements (optional parts)

- Software 3D Device (mandatory)

- Speaker objects (mandatory)

Development Methodology

Personally I prefer well documented and tested code, or let's say quality over quantity. As I have already been able to get a lot of experience in project management in the software development area, I know that it's very easy to underestimate the work to be done and how much time it takes. That's why the list of deliverables might look a little short and I categorize them into mandatory and optional targets.

Another thing that's very important to me is the user experience. I'll try to get as much people as possible to test my work and give feedback which I can build on.

Schedule

I will start working right away on April 25 right after getting accepted during the Community Bonding Period, as I know the community already. As my university courses run until the end of June, I won't be able to work fulltime during this period, but after exams when summer holidays started I'll work fulltime on the project.

I'll start by deciding with my mentor which of the general improvements are of higher priority and which can be kept for later, as all improvements there are more work than a typical GSoC project.

Starting with the begin of July I'd like to start with the other two main tasks (targetting half of the remaining time to finish each task) and after completion of those there might be time to do some of the remaining general improvements.

Why ...?

- ... I want to do this project: I've already worked on blender audio, this would be the next huge step in this area, which is very important for blender to be accepted in the audio area.

- ... I am the best for this project: I simply know blenders audio code best.

- ... I'm interested in Blender: Coming from the game development area I found Blender being an awesome 3D tool I will never want to stop using.

Lip Sync

Synopsis

This project will improve audio in blender further by improving the current system and adding new interesting features. The main goal for this project is improve the lip sync workflow for user, by supporting with modern automation algorithms and a revised workflow.

Benefits for Blender

Blender 2.5 has a new audio engine and caught up with what it missed before. Now it's time to get up to speed and implement some state of the art lip sync features.

Proposal

First there's some cleanup, refactoring and "minor" improvements I'd like to do, roughly including the following points (with estimated amount of work in parentheses [small/medium/large]):

- [medium] Improve memory management (eg. remove news/deletes by using a managed pointer, this will also improve memory management problems with python and enables bidirectional data exchange, which is currently not possible).

- [medium] Improve the Playback Handles, by making a useful class out of them like it's in the python API already.

- [medium] It should be possible to play a single Reader on a Device without a Factory. This enables the usage of microfones in the audio engine. Questionable is how to prevent or enable the possibility to play the same reader on multiple devices.

- [small] Something that also should be tried out is to change the Reader API regarding audio buffer access, the current architecture is bottom-up, top-down might give better performance though.

- [large] Dynamic changing of reader parameters like samplerate and channel count; this requires a dynamic resampling and a better channel mapping solution (mixer interface). It enables a future implementation of LFOs (Low Frequency Oscillators).

- [medium] Animation system improvements. The audio samplerate is a lot higher than the video framerate which is the base in blender and the current interaction with blender's animation system is really ugly, a better solution has to be found here with the animation system maintainer to get subframe accuracy and a better performance.

- [small] The streaming of the OpenAL device is a little hackish at the moment and needs some reworking.

- [medium] Better Channel (including multi channel!) support; a feature still missing from Blender 2.4x is panning, with a well-thought-out user interface this can be finally implemented.

The second part is the lip sync target which includes the implementation of an automatic lip sync functionality. Here are some papers about the phoneme detection in audio files I'd like to implement:

- http://www.lmr.khm.de/files/pdf/speech_berlin.pdf

- http://scribblethink.org/Work/lipsync91/lipsync91.pdf

- http://jan-schulze.de/file_download/14/Diplomarbeit.pdf (German!)

User interface wise I thought about something similar to the lip sync interface of most other lip sync tools, which would bring back the audio window, with the wave display and phoneme editor. After talking to several artists and other developers it might be best to link the phonemes to the visemes via (driver) bones with a range between 0 (viseme completely off) to 1 (viseme completely on) with a specific name per phoneme.

So the workflow might end up like this: First the animator creates the viseme poses for the given (fixed) set of phonemes and driver bones to control them with a specific name per phoneme. Then he opens the audio window which is split into a wave display and a phoneme editor, he can now run the phoneme detection algorithm and then edit its result or directly start the editing (add, change, move, remove, set strength, etc.). The rig is then automatically animated via these phonemes.

I'd love to work together with an artist to create a really nice example of how this can be used and works. Maybe I can enthuse one of the Sintel artists (Lee, Pablo, ...) and use Sintel for this.

Deliverables

- General improvements (optional parts)

- Phoneme detection algorithm (mandatory)

- Lip Sync Animation via Sound window (mandatory)

- Testing files (rig and sound files) (mandatory)

- User documentation (mandatory)

Development Methodology

Personally I prefer well documented and tested code, or let's say quality over quantity. As I have already been able to get a lot of experience in project management in the software development area, I know that it's very easy to underestimate the work to be done and how much time it takes. That's why the list of deliverables might look a little short and I categorize them into mandatory and optional targets.

Another thing that's very important to me is the user experience. I'll try to get as much people as possible to test my work and give feedback which I can build on.

Schedule

I will start working right away on April 25 right after getting accepted during the Community Bonding Period, as I know the community already. As my university courses run until the end of June, I won't be able to work fulltime during this period, but after exams when summer holidays started I'll work fulltime on the project.

I'll start by deciding with my mentor which of the general improvements are of higher priority and which can be kept for later, as all improvements there are more work than a typical GSoC project.

Starting with the begin of July I'd like to start with the lip sync work and after completion of those there might be time to do some of the remaining general improvements.

I have no clue how long the implementation of the phoneme detection algorithm really takes, but I hope not longer than half of that remaining period starting with July, because I guess the UI part will be quite some work to do. Anyway, you should already know that I prefer to finish things I start, so even if GSoC was over I'd finish the project.

Why ...?

- ... I want to do this project: I've already worked on blender audio, this would be a next huge step in this area and adds yet another important feature to blender.

- ... I am the best for this project: I simply know blenders audio code best.

- ... I'm interested in Blender: Coming from the game development area I found Blender being an awesome 3D tool I will never want to stop using.

About me

My name is Jörg Müller, I'm 22 years old, from Austria and study telematics at the Graz University of Technology. You can contact me via mail nexyon [at] gmail [dot] com or directly talk to me on irc.freenode.net, nickname neXyon.

In 2001 shortly after getting internet at home I started learning Web Development, HTML, CSS, JavaScript, PHP and such stuff. Soon after that in 2002 I got into hobbyist game programming and as such into 2D and later 3D graphics programming. During my higher technical college education I got a quite professional and practical education in software development also including project management also doing projects for external companies. During this time I also entered the linux and open source world (http://golb.sourceforge.net/). Still into hobbyist game development I stumbled upon the Blender game engine and was annoyed that audio didn't work on my system. That's how I got into Blender development, I am an active Blender developer since summer 2009 now, where I mainly worked on the audio system.

In my spare time apart from that I do sports (taekwondo, swimming, running), play music (piano, drums) and especially like to go to cinema.

- 09/2003 - 06/2008 Higher technical college, department electronic data processing and business organisation

- 07/2008 - 12/2008 Military service

- 01/2009 - 02/2010 Human medicine at the Medical Unversity of Graz, already doing curses of Telematics at the Graz University of Technology

- 03/2010 - today Telematics at the Graz University of Technology