「Dev:Source/Render/Cycles/ReducingNoise」の版間の差分

細 (moved Dev:2.6/Source/Render/Cycles/ReducingNoise to Dev:Source/Render/Cycles/ReducingNoise: remove namespace version) |

細 (1版 をインポートしました) |

(相違点なし)

| |

2018年6月29日 (金) 04:46時点における最新版

目次

Reducing Noise

Cycles has a number of sources of unnecessary noise for simpler direct light or limited GI setups. This document gives an overview of how we will attempt to improve the engine.

Bounces

There already exist ways to limit the maximum number of bounces for each ray type, caustics can be disabled, and using a node setup the world can only contribute light to some shaders. There is one big remaining control that is missing still:

- Glossy BSDF's are unified for direct and indirect light, as in the real world. An option should be added to control the amount of indirect light a closure contributes, so that glossy shaders can be used without being a glossy mirror.

Sampling

The other "unnecessary" noise comes from the sampling methods used:

Mix closure sampling will only pick one closure for direct lighting. This is done mostly to suit GPU rendering, the OSL backend already has an improved implementation, but it needs to be figured out how this can be done on a GPU.

- Each path vertex samples only a single light. This keeps render time of a single pass more limited, but causes noise. An option to sample all lights should be added, at the cost of slower rendering of each path.

- On every bounce, only a single path is followed. For mix closures this introduces noise. A non-probalistic rendering mode that expands the whole ray tree could be added, again implementing this on a GPU is a challenge.

Addressing these requires adding a sampler that renders each tile with all samples in one go. This should fit fine for non-viewport rendering. On the CPU this is relatively simple to do, so it will certainly be added. GPU rendering support for some of these may need to wait a little longer.

More Advanced Algorithms

Irradiance caching can speed up rendering of diffuse bounces. With more usage of glossy bounces and geometric detail this will have less impact, but implementing it is being considered.

In scenes with a HDRI background image or many are light sources, we will also attempt to improve sampling. Such situations will always give noise, but faster convergence should be possible.

Caustics and indirect light from small openings are difficult to render for a path tracer. These situations could be addressed with algorithms such as photon mapping or bidirectional MLT. However we will not focus on these at the moment, and rather advise to avoid such light setups or simulate them using tricks, as is done for most production / animation rendering.

Portals would also be useful to add, to make it easier for the path tracer to find e.g. a window where much light is coming from.

Adaptive Sampling Proposal

A computationally simple way to measure undersampled regions has been proposed. This could allow an unbiased interface setting for always achieving a given image quality while minimizing rendering time.

The concept could also be adapted to highlight undersampled regions and adjust in adaptive mode. Here is some detail:

http://blenderartists.org/forum/showthread.php?236453-Measuring-Noise-in-Cycles-Renders

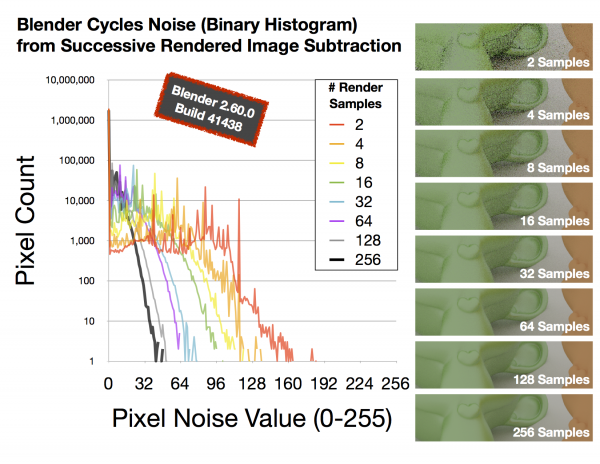

The concept works by doing a boolean subtract and convert to B&W of one render pass from a previous render pass. As the render is iterative and asymptotes to a perfect image, eventually successive render passes will no longer change pixel values. This algorithm essentially is a way to measure the rate of change of RGB pixel values over time, which will therefore work for any rendered scene or texturing node setup, even if the given texture is "noisy". The fundamental difference is that the rate of change of pixel RGB values will drop in a reproducible and easily measured way.

The sample needs only to be computed for much less than 1% of the render passes, and the boolean subtract of images that are already stored in the RAM takes much much less than 1 second to compute anyway.

Here is the data analysis: