「利用者:Phonybone/BlenderFoundation/2013」の版間の差分

細 (1版 をインポートしました) |

|

(相違点なし)

| |

2018年6月29日 (金) 05:57時点における最新版

目次

- 1 2013

- 1.1 Week 1 (1st September)

- 1.2 Week 2 (9th September)

- 1.3 Week 3 (16th September)

- 1.4 Week 4 (23rd September)

- 1.5 Week 5 (30th September)

- 1.6 Week 6 (7th October)

- 1.7 Week 7 (14th October)

- 1.8 Week 8 (21st October)

- 1.9 Week 9 (28th October)

- 1.10 Week 10 (4th November)

- 1.11 Week 11 (11th November)

- 1.12 Week 12 (18th November)

- 1.13 Week 13 (25th November)

- 1.14 Week 14 (2nd December)

- 1.15 Week 15 (9th December)

- 1.16 Week 16 (16th December)

- 1.17 Week 17 (23rd December)

- 1.18 Week 18 (30th December)

- 2 2014

2013

Week 1 (1st September)

Info Mostly spent the week reading through bug tracker reports, assigning and closing.

Next Week

- Continue bug tracker support, perhaps close/move pending particle bugs to ToDo

- Would like to start some compositor improvements (discussed with Sergey):

- Debugging cleanup: Make a separate file for graphviz output and general debug settings

- Avoid deeply nested function calls in ExecutionGroups. Use a "stack" for storing node input/output variables. Then make a flat loop instead of descending down from final outputs through the whole tree.

- Should make debugging much easier and perhaps give some speed improvement. In the long run would help making the compositor programmable, code generation is much easier with a structure like that.

- Refine the proposal for particle nodes roadmap. First rough outline here.

- General

- Compositor cleanup: Merge conversion operations into a single file (see also [r59820] by Sergey)

- Applied a patch by Henrik Aarnio for fitting compositor backdrop to region.

- Fixes

- Fix T36639, textures not reloaded after fixing paths.

- Fix T36628, Muting bump node alters material visibility.

- Fix T36194, Halo material on Layer 2 produces flares on layer 1.

- Fix T36113, Translate's wrapping has 1 pixel gap in X and Y after scale node.

Fixed: 4, Closed: 7

Week 2 (9th September)

Info Didn't get very many bugs fixed i'm afraid ...

Compositor improvements for avoiding nested virtual function calls turned out to be impossible without some wider redesign. Problem is that in order to pass color inputs to a pixel function we need to know potential coordinate transformations a node performs. These transforms are currently coded into the executePixel functions themselves, so we'd need to add (invertable) transform methods to each node which allow calculating the input coordinates. This would be a good idea in general because it also would yield better automatisation of "area of interest" calculation and the like, removing a number of typical error sources. But it's just not something that can be done in a few days. Considering that we are considering OSL for the compositor this could just be a waste of time.

Next Week Bug tracker

Make a proposal for the first particle overhaul stage (buffer system, attributes, point cache interaction, duplis). Most of it exists on paper, needs to go to wiki. Implementation should be pretty straight forward, especially if good separation of concerns can be maintained (e.g. keep particles agnostic of point cache details and dupli system).

A few review issues are also pending.

- General

- Helped Thomas Dinges implement volume shading for OSL in his branch [1]

- Cleanup and improvements to the compositor debugging and graphviz output: r60109

- A useful addon has emerged from previous work with Thomas on the SkyTexture node: SkyTex Objects - allows linking nodes to objects for vector inputs (controlling skytex sun direction among others).

- Fixes

- T36663 Bone properties sometimes vanish when mousing into properties window

- T36700 z-depth not rendering properly at (n*256)+1 dimensions.

- T36706 Adding a new input to the file output node in python immediately crashes Blender

- T36720 Gaussian blur incomplete result

- T36721 Gaussian blur produces corrupt image

- Uncommitted

- T36687 Animation channels can't be grouped in action editor (assigned to Joshua for review)

- T36226 Select Linked works not in touch with Prefs (currently still in codereview for some UI changes: [2])

- Fixes (unreported)

- r59993: Fix for makesrna collection property lookup C++ wrappers

Fixed: 1, Closed: 3

Week 3 (16th September)

Info More bug tracker work, few genuine fixes again.

Next Week Coordinate with Campbell and Brecht how to close more bugs - there are a lot of undecided reports awaiting confirmation/clearance. Finally finish the first part of the particle buffer proposal.

- General

- Cleaned up bug tracker states, moving a number of New reports to Investigate or Confirmed

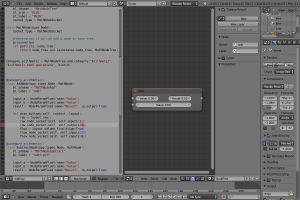

- pynodes framework system: This is a set of python modules to aid in construction of custom node trees.

- Used as part of an experimental addon for "modifier nodes" - a node system on the object level based on the existing modifier stacks:

- Fixes

- T36734 Matcap displays solid black (duplicates closed T36733, T36740)

- T36739 Cancelling transform after adding new nodes now removes the new node again

- T36755 EXR Layers are not fully updated on scene load or image refresh

- T36226 Select Linked works not in touch with Prefs (committed after a number of UI improvements)

- Fixes (unreported)

- Fix for OutputFile node, crashed with unconnected sockets on MultiLayer EXR formats

Fixed: 5 Closed: 8

Week 4 (23rd September)

Info Spent some more time working on the "modifier nodes" addon. While it's technically an addon for modifier scripting, the principles used there are fundamental to future particle nodes as well:

- Intermediate language specification (based on OSL, working title "blang" for Blender language)

- Fully-fledged parser system for this language (using the lightweight modgrammar package)

- Code generator to convert "blang" scripts into executable code (in this case python for modifier drivers)

- Node system as a UI layer with pynodes

Bug fixing fell a bit behind, not many reports in my areas of expertise though (nodes, compositor, OSL).

Next Week Will try to fix some of the more feasible particle bugs, check if there are true regressions.

I need to investigate requirements and designs for point cache systems, since it seems that this is crucial to implementing new particle buffers. Alternatively would consider working on the dupli system, this is the most important display mode of particles (apart from hair) and needs some love.

A number of node patches was suggested by Sjoerd de Vries for extending the display features for sockets. To avoid feature creep in the API i would like to implement an extended uiLayout system that allows placing sockets more freely.

- Fixes

- T36797 make linked node groups local does not work

- T36790 OSL point parameters of shader nodes not initialized correctly from UI inputs

- T36630 Particlesystem - boids - goal - collision

Fixed: 3 Closed: 5

Week 5 (30th September)

Info Started investigating point cache refactor based on Alembic. A git branch including some basic Alembic linking can be found here: point cache branch I wouldn't mind continuing this work in a SVN branch, but at this point i'm still learning about the Alembic API, so there is not much testable code yet.

Building Alembic itself can be a bit tedious, so i've collected instructions in the wiki: User:Phonybone/PointCache/BuildNotes

The Alembic API is not very well documented in terms of tutorials, so it's quite a steep learning curve. Some initial thoughts on how it can replace the current point cache can be found here: User:Phonybone/PointCache/InitialThoughts

Next Week Will continue point cache work. I'd like to get a simple read/write procedure working next to the existing cache system, so i don't have to change too much code before making it an actual replacement.

Alembic has generic support for writing out archives, but there is very little usable example code. To get something going asap i'd like to make use of the existing HDF5 writer classes, using external disk cache files. This could then be suplemented later on by an integrated writer class to store cache data directly inside .blend files. Having the option of im- and exporting standard file formats like HDF5 is certainly a welcome feature for pipeline integration in any case.

- Fixes

- T36853 Undo not working for Particles Hair - Free Edit

- T36850 Material Node Editor Crash Always

- T36694 Texture node groups tend to crash Blender a lot

- T36939 Objects with nodes appear in gray in viewport, using Solid shade, and the Blender Engine (or Game Engine)

- T36962 "Render emitter" option for hair is ignored in Cycles

Fixed: 5 Closed: 1

Week 6 (7th October)

Info

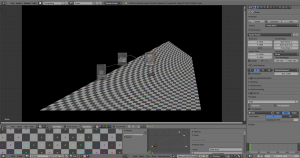

- Implemented a UI layout extension for nodes that allows more flexible placing of sockets. This layout is an extension of the UILayout RNA type, it uses the same uiLayout struct, but gets a few new node-specific functions in the RNA. Sockets can be placed in the layout like buttons. This allows reordering sockets and putting equivalent input/output sockets on the same row, e.g. for nodes that take an image and pass out a modified image.

- Default behavior: if sockets are not placed explicitly in the layout, they get drawn in the standard outputs-options-preview-inputs order.

- Potential future uses:

- Automatically disable sockets if they are not drawn in the layout.

- Allow vertical node layouts.

- Alembic point cache branch: Work has been slow there due to lack of clear documentation for the Alembic API. The basic structure is taking shape:

- New "pointcache" subfolder in blender/source/ for the point cache API and implementation in pointcache/intern/

- API is always available, but will use stubs if Alembic build option is disabled (might use current implementation then if feasible)

- PointCache DNA struct stores an anonymous runtime "PTCArchive" pointer holding an Alembic I/OArchive for continuous cache read/write.

- Specialized functions for the various point cache uses (particles, cloth, meshes, etc)

- Implementation uses Alembic Schemas to define the properties to store in the cache and how to extract them from the DNA

Next Week Will focus on the point cache.

- Goal is to get the basic infrastructure working

- Get particle caching working with both write and read as a first test case before doing all the other schemas.

- Investigate file formats and possible internal storage in .blend files (Comment by Steve LaViete on Ogawa as alternative to standard HDF5)

- Investigate baking, import/export of baked caches

- Investigate cache files for simulation init states, this depends on cached systems to allow it as a feature (particles probably would be difficult due to how they reset the buffer)

- Fixes

- T36981 Removing Sample line fails during render

- T36991 After rendering with Sampled Motion Blur, the moving objects place is wrong

- T37047 Expanded Enum Alignment glitching

- Fixes (unreported)

- Fix for Output File node operators: added a sanity type check to avoid using invalid node data and crash when calling from menu. r60574

- Check the node Add operator's type property before attempting to create a new node to prevent python exceptions. r60575

Fixed: Closed: 36984

Week 7 (14th October)

Info

- Finished a patch for improved node drawing based on uiLayout.

- Brecht objected to the idea of exposing socket functions in the draw_buttons node function though, since it would make it more difficult to implement common behavior in other node drawing situations like the tree view (only materials atm) or collapsed nodes. Agreed on a more limited patch which would implement the layout functionality for nodes, but not expose this as a uiLayout feature for now. This can be used to implement nicer socket arrangements, but keeps it out of the API so other drawing methods can be adapted more easily.

- Point cache is not much further yet. I've decided to start with the baking feature rather than the continuous cache writing used with single frame steps - this is easier to implement first because it avoids problems with creating/storing/freeing archive objects in arbitrary start frames.

Next Week

- For BConf next weekend i'd like to wrap up a few features for the modifier nodes addon, which spurred a lot of interest among addon writers and node afficionados. I need to implement some features for the Data/Object input nodes which allow using arbitrary bpy data (much like drivers), but require a few workaround for limitations of the RNA API.

- For point cache i want to get to a point where particle data can be cached fully in a "baked" archive. Then i'd like to do some size comparisons between current cache data with full accuracy (cache step = 1) and alembic archives.

- The Ogawa format is probably the best choice for point cache implementation in Blender. It can use a generic stream instead of only files, allowing direct storage in DNA data. Further it is much better suited for threaded write/read, which would be great for fast playback with interpolated data.

- Fixes

- T37057 Detach (Alt + D) doesn't work in nodes editor / compositor (r60742)

- T37064 Startup file gets corrupted, crash. Also reported by Dalai in T37057 (r60743)

- T37084 Backdrop not invalidating inside node groups

- T37110 After deletion of large scene, file still huge

Fixed: 4 Closed: 2

Week 8 (21st October)

Info Preparation for BConf 2013 Fixed a number of bugs in the modifier_nodes get it ready for some showcasing.

- Fixes

- T37175 Viewer node issue for newly toggled render passes

- Fixes (unreported)

- r60906 Wrong clamping result in OSL math node

Week 9 (28th October)

Info

- Wrapped up a few bugs found in the modifier nodes addon.

- Compositor work: Investigated issues with the sampling methods used in compositor. The EWA filtering methods require better information about local pixel transformation and especially jacobian 2D derivatives. See bf-compositor list for some discussion:

http://lists.blender.org/pipermail/bf-compositor/2013-November/000025.html

Next Week

- If feasible, implement coordinate transform functions for compositor nodes, giving the necessary data for sampling of non-linearly deformed images.

- Define a roadmap for physics work.

- Fixes

- T37192 Rendered preview causes crash when deleting a material node in shader node editor

- T37194 OSL script crashes blender

- T37260 Weird behavior in adding gears from extra objects Addon

- Fixes (unreported)

- r60989 Particle texture influence bug causing undefined (nan) values

Week 10 (4th November)

Info

- Have been working on improvements to the EWA (elliptical weighted average) filtering in the compositor. This is one of the biggest hurdles when working with tracking, according to Sebastian König. The current implementation is simply not correct, it doesn't have the full jacobian matrix for calculating image-space ellipses. So in addition to fixing the EWA sampling algorithm, the coordinate transformation functions in the compositor will need to be improved to support proper filtering.

- Implemented absolute grid snapping for nodes r61170

- Removed the deprecated "show cyclic dependencies" node operator r61172

- Removed the automatic link shifting when connecting to occupied sockets r61178

Next Week Still the old targets:

- Write down a physics framework roadmap

- Continue work on the pointcache system

- Fixes

- T37312 Backdrop value offset is not refreshed

- T37333 Bad default value in Color Balance

- T37348 Different behaviour in Node editor

- Fixes (unreported)

- Fix for error in r61159: the new gpencil_new_layer_col in UserDef is supposed to be a 4 float RGBA color, but has only 3 floats r61177

- Closed

- T37332 the particle systems "Adaptive / Automatic Subframes" option is always grayed out

- Patches

- 37274: Circle select for node editor, by Henrik Aarnio (hjaarnio) r61173

Week 11 (11th November)

Info Git/Phabricator migration week, not much happened otherwise ...

Created a python script to read and write binary point cache data. This was a request by Bassam Kurdali to deal with some jittering artifacts in baked cloth simulations. The script implements a low-pass filter method which smoothes out point movements over a few frames, controlled by a smoothing factor. Thread on blenderartist.org

- Closed

Week 12 (18th November)

Info Started working on design for the new point cache Alembic implementation. A new design task was opened to encourage discussion about actual workflow issues and how to adapt the existing point cache paradigms to fit in with the Alembic model. Design task

- Fixes

- T37545 SV+H color cube display changes H slider position when modifying S or V

- Patches

- c566e408e42836c Cleanup of compositor executePixel functions: renamed the 3 different variants to make it easier to tell them apart in IDEs and make future work on sampling and pixel transforms easier.

Week 13 (25th November)

Info Have been working on Alembic point cache branch all week. Point Cache branch on github

The DNA data for PointCache has been cleaned up to make replacement in particles possible. The particle code unfortunately uses direct access to the pointcache DNA, especially for the particle edit mode and trails display.

- "Multiple caches" lists have been removed. This feature is pretty useless because all it allows is comparing the current simulation results to a previously baked cache - but in order to actually revert to that previous simulation state one needs the full settings of the simulation data, which requires a .blend file version and then the cache is stored alongside it anyway. The code for cache lists was making things unnecessarily difficult and required some bad special case handling because of self referencing in the RNA.

- Memory cache distinction has been removed. All caches will be "disk based" by default now, which avoids the need to have 2 separate cases in every function and keep track of the disk/memory setting everywhere. This doesn't mean files necessarily have to be stored on disk, they can still be packed into blend files and loaded into memory if small enough, but it will be a secondary feature so the code doesn't have to take it into account everywhere.

- Keep the point cache state separate from user-defined settings. This includes all automatically set flags (such as BAKED, SIMULATION_VALID, etc.) as well as frame indices. This enforces cleaner design.

- Cache reading for particle data tested. Currently still require full cache export (aka "bake"), automatic cache writing is not yet implemented. Only positions are stored in the cache, priority is to get the basic workflow set up before adding all the cache data properties.

Next Week

- Continue working on point cache:

- Automatic caching should be implemented back. There are some design decisions that need to be sorted out, such as whether settings should be locked after "full bake" and how to indicate a valid cache state.

- Extend caching to mesh data. This is new territory for the point cache, so getting this tested is important for identifying possible design flaws and new requirements.

Week 14 (2nd December)

Did a lot of cleanup in the existing point cache code. The main challenge is to prevent the cache users (particles especially) from directly accessing the point cache DNA data, which causes a lot of problems. To enable a clean separation of concerns and enable a nice API it is necessary to at least wrap the existing features that do this behind some functions (trails display, particle edit mode), until they can be replaced by an Alembic-based implementation.

The cache baking feature has been removed entirely for now, since this will get some redesign (discussion in the [ http://developer.blender.org/T37578 design task ].

The basic idea is to not try to control the simulation state too much from the point cache side. This leads to conflicts between the simulation settings and the cache state and spaghettifies the code. Instead the simulation should use whatever data is available, with good tools to ensure a valid cache state as much as possible, while the cache is oblivious to the state of the simulation that generates it.

Week 15 (9th December)

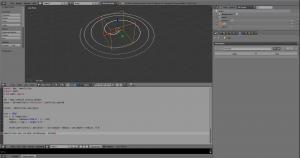

Reactivated the sleeping particle branch (as particle work has been explicitly requested). The goal is to finish the particle system implementation such that it can be accessed via the RNA api and scripted.

Overall concept separates the particle definition from its state. The particle system has a list of attribute definitions (and later defines node use, display features), while the state contains the actual data. Defining a new state for the next frame can happen outside of the particle system, which solves a number of intricate problems with consistency. Nodes will be used for this process eventually, but as a first test a python script should be able to do this.

Main challenge is to provide a consistent and flexible API for manipulating state data, especially for creating and removing particles and identifying them reliably. In the current Blender particles this happens with a simple array index, but this is no longer viable with a dynamic buffer. The new system will use unique identifiers for particles, which form a mandatory data layer. Looking up a particle by its identifier must be as fast as possible (using sorting and binary search), although eventually nodes will have their own runtime system for defining a particle state, independent from the DNA data structures.

Next week will add some display features, so the particle data can be visualized in the viewport.

I'd like to try merging the particle and point cache branches to test the integration. Particles would be the first system writing variable-length data sets into the cache, which could expose potential issues in the API.

Week 16 (16th December)

Worked on the new particle system DNA data structure and RNA/python API.

Particle State

State is a new concept in particles that should allow a clean separation between the integration code ("ParticleSystem") which places particles in the Object struct (currently still as a modifier) and the actual data for particles in a frame.

Particle System has one state that is regarded as the state for the current frame and should be immutable (not directly modified). To manipulate particle data every simulation or tool as well as the cache must create a new state, then replace the current psys state with that.

bparticles Python Module

The RNA API proved unsuitable for creating these runtime state instances. Main problem is that the RNA has no reliable means of allocating and freeing struct data, other than in special cases like collection iterators. This prevents us from creating temporary state instances and freeing them reliably once finished.

For accessing particle data it would also be preferable to implement particles as "virtual" wrapper types like iterators. The structure of the particle state data is such that each attribute layer is stored separately and non-interleaved (akin to the CustomData system in mesh data). There is no single data element that could be regarded as "a particle". To access different attributes of the same particle, a "particle iterator" is created (which is essentially just a plain buffer index) which can be used to efficiently access every attribute buffer.

For these reasons it became necessary to add a separate, non-RNA python module that implements a consistent and intuitive API for particles. This is very similar to the bmesh approach where a bmesh instance is first created either empty or by copying mesh data, then modified with various operators and finally written back to the mesh.

Creating and Identifying Particles

Using a fixed size buffer with a simple index like the current particle system is not feasible if they are ever to support large simulations. Instead of identifying particles with a running index, each particle has an integer identifier number ("pid" to distinguish from ID data blocks). This can be chosen freely in the code, it doesn't have to be contiguous or in any specific order. This gives a lot of flexibility for generating particles reliably, especially in multi-threading situations where calls to a central "id generator" would be random and create non-reproducable results.

The downside is that particle attribute data has to be sorted in some way to ensure fast lookups by pid. The easiest implementation would be binary search (ordering particles by their pid), but this could be combined with a hash table for almost-O(1) results. Atm it uses plain linear search.

Creating particles can be made very intuitive by automatically extending the state when writing to particles with non-existing pid's. Alternatively particles can be added explicitly, but since they then have only default attributes (apart from the pid) it's more convenient to use the create-on-write feature.

Here is a simple python scripting test to show how particle states are generated. Eventually a simulation, node system or cache reader would do the same thing (just using a more optimized API implementation):

And a video of a simple frame handler animation, demonstrating the basic principle of creating a new state to replace the current frame data:

Week 17 (23rd December)

Particle Physics Did some experimental work simulating particles with rigid bodies in the Bullet engine. The particle system integration will need some work to define how particles can be injected and sync'ed with the RigidBodyWorld, without making hard connections in the DNA (which would eventually lead to spaghetti code again). To keep the data storage system separate from the simulation it would probably be best to define "particle rigid bodies" as a feature of the rigid body simulation, rather than the particle system. These two parts are then connected on a higher level (node system). The particle system does not know about rigid body sim (or any other simulation), while the rigid body sim also has no pointer to a specific particle system. That connection is defined by context, on frame change particles get passed to the simulation, which generates a new state, which is then stored back in the psys.

Contacted Sergej Reich to discuss the planned "Simulation Framework" basics. Main concern is the exact point at which the simulation step occurs. Atm the rigid body simulation step happens *after* the animation system update (but before transform/modifiers/etc.). IMHO this is not quite correct: the simulation solver should base the result on the current state only, otherwise the input for the simulation is a mixed state of current frame (transform/mesh) and new frame (animation) data.

I'll try to come up with some explanatory diagrams and then post a code.blender.org article on these design issues, so we can continue the discussion on a broader public stage.

PyNodes While deprived of a functional development environment, went back to some python scripting work: Simplifying the "pynodes framework" system. This could be used for prototyping particle nodes quickly, before eventually moving critical parts into C/C++ code. The existing modifier nodes addon already has the basic ingredients for a language-based node system. The only showstopper atm is the complexity of the pynodes framework python code, which needs to be radically simplified to make it a viable option for future node systems (socket properties and node groups in particular).

Week 18 (30th December)

Dupli Objects

I've added a basic dupli object support for particles. This is currently implemented as part of a general "ParticleDisplay" stack, which contains a list of typed display features connected to a particle system. The general idea is that we can add multiple visualizations to particles, such as

- Basic symbols for particles (dots, circles, orientation arrows, etc.)

- Dupli objects

- Debug helpers for individual attributes, such as velocity vectors, identifier numbers, colors

Probably this concept will need to be refined a bit more, i tried to keep it very simple for now so it can be modified later on.

Dupli object display uses a list of object references. These are identified by index in the particle data, so each particle can specify one of the dupli objects with a simple int attribute. Unlike current particles, which include randomization weights in the dupli object list, the new particles leave this mapping entirely up to the simulation.

Next Week As discussed on IRC we should improve several aspects of our dupli system.

- Constructing long doubly-linked lists (ListBase) of DupliObject is time consuming and leads to memory fragmentation and cache misses. We can avoid these issues by returning DupliObjects in fixed-size batches instead of such lists.

- Code-wise it would help to define a DupliGenerator type, with callbacks for creating duplis from the scene. This gives a much more concise specification of what a dupli generator should actually do and should avoid the bloated messy anim.c code we have right now.

- Customizable dupli instances could be a very powerful feature. Each DupliObject would have optional custom data associated to it, which can then be used by shaders or simulations. For example a custom color can be assigned to each dupli, then used by the common material (such things can currently only be done semi-random based on the assigned ID number or dupli transform).

- scorpion81 suggested a different approach to fracturing and "shard" meshes which i think is a very elegant solution: Instead of using a modifier that assigns one shard to each particle, the mesh could be instanced with duplis where each dupli instance only displays one of the shard partitions. This moves the responsibility for displaying and simulating shards out of the core fracturing system (separation of concerns). This would be based on the custom dupli data proposal above: each shard is identified by an index associated to the dupli instance of the mesh.

Bullet Integration

I have extended the Bullet blenkernel API to support particles.

A number of changes are necessary to the way our RigidBodyWorld manages bodies, shapes and constraints. Currently the ownership of rigid body references is on the Object DNA side. This works ok for persistent ID blocks (Object), but it causes problems when using rigid bodies for more dynamic particle data which is not suitable for managing the allocated memory. It is also not very efficient to allocate large numbers of btRigidBody instances one-by-one with the standard "new" allocator, so i've implemented a MemPool-based system. This moves the responsibility for allocating and freeing rigid bodies into the Bullet API itself, which is nicer design-wise (again, separation of concerns).

The integration of Bullet sim in particles is very rudimentary atm, it just generates RBs for all particles if a dupli collision shape exists. Designing a good high-level glue layer for connecting particle data with simulation systems is required. This layer should eventually be implemented as a node system to make it controllable by users in a transparent way.

Here is a simple demo video showing a combination of python scripting with the Bullet simulation. The script just does the initial setup for new particles prior to the Bullet sim step (frame_pre_handler), after that any update happens purely through Bullet sync. It shows nicely how the state concept works: both the py script and the Bullet sync method construct a particle state (from a current state copy) and then modify it, before finally declaring it as the new current state.

2014

Week 19 (6th January)

Info A new patch is up for review for improving the dupli code here. This is purely a cleanup/structural improvement patch for the code without functional changes, to make future improvements easier without breaking behavior. It should help prevent matrix hacks and hard-to-track side effects of the dupli generators, which are frequently causing difficult bugs. Duplis can very easily be stored in more efficient containers with these changes, e.g. mempools or plain arrays, which helps reduce memory fragmentation, cache misses and overhead from frequent alloc.

Also investigated a potential improvement regarding 3D viewport drawing of duplis. OpenGL has a number of optimized instance drawing features that we are currently not using, which could significantly increase performance when drawing large numbers of duplis (i.e. from verts, faces, particles). Even without these OpenGL features the dupli display could be optimized a lot by drawing objects in order rather than switching between instances based on the generating object as it happens now. More details in the bf-committers mail. This may require deeper changes, so i'd like to postpone this a bit (too many lose ends in point cache and particles still).

Next Week I'd like to return to the point cache branch to implement on-the-fly caching. This will need investigation on the Alembic side to figure out how archives can be written continuously and the results read by instances, without rewriting the whole cache file every frame.

- Fixes

rBebf23b51448e4 crash from shader node add/replace tree view template.

rBe23bcbbb6d787 Fix for crash in anim render

rBcc35ad2b3d1b9 Fix for random crash in localized node group freeing while tweaking group default values

rB10b5ad5bae9e6 socket interface 'type' enums are not initialized

Week 20 (13th January)

- Info

Not much actual code result to show for this week. I've had to fix a number of bugs regarding transform matrices in the dupli system patch (D189). Now the dupli system at least does not require modification of the DNA data, which makes the code much more transparent and will also allow multithreading (if necessary, currently dupli generation is not the worst bottleneck and numbers are relatively small).

The point cache on-the-fly writing turned out a bit more complicated than expected. It looks as if writing out an Alembic archive that could then be used immediately as a data source for another object is very inefficient. I need to talk to some experienced Alembic coder or ask on their mailing list about this issue. It could also work by only ever using the latest state for instances - maybe this could be clarified at the kickoff meeting.

Much of the week i spent discussing with Martin Felke (scorpion81) about the fracture system. He's made a proposal page, currently just a list of ideas: [3]

In essence, we want to keep the "fracture mesh" (FracMesh) system separate from all particle simulation and rigid bodies. It's a fairly straightforward data container for shard-based meshes, where more shards (partitions/mesh islands) can be added efficiently and algorithms can store their results. All FracMesh data is handled in local object space.

Storage of this FracMesh can be in a modifier for now. This modifier can directly display the FracMesh as well, as a sort of debugging feature. A particle system then is responsible for defining transforms for individual shards. Display uses an extended dupli system, creating instances of the FracMesh with only one shard visible on each instance.

Particles can also generate rigid bodies using collision shapes from shards. Eventually this can work as a feedback mechanism for dynamic fracturing, using Bullet collision contact points in the FracMesh local space as input to the algorithm.

- Next Week

Since it seems the fracture system is making progress, i'd like to work further on the particle system to get it ready for that purpose and make the two work in tandem.

High-level structure needs to be sorted out. I've come up with a structural plan, roughly going from the more abstract to concrete data:

- Nodes / Tools / Scripts

- High-level "source code" for a particle system. Particle systems are not necessarily limited to objects, they can also be used in tools, e.g. for sculpting.

- Attributes / Solvers / Modifiers

- Description of the particle data and integration according to the "source" level. Different attributes may be needed depending on nodes. A solver is a function that defines particle states iteratively during simulation, while a modifier further gets applied on top of the result (like mesh modifiers).

- State / Cache

- Actual low-level data storage

Week 21 (20th January)

Info Committed the dupli generator patch (D189) and a subsequent patch to avoid hidden matrix hacks (D254). These changes will come in handy later for the fracture/particle system (see below).

I've been working with Martin Felke (scorpion81) on his fracture code (github repository). The mesh storage format used there was based on a persistent BMesh instance, which is not suitable for storing in blend files and causes a lot of overhead for copying geometry data. We replaced this with regular Mesh types (MVert etc.). The FracMesh structure gets stored in a modifier, at least for the time being. The modifier result is simply a union of the fractured mesh data as a kind of debug display.

For integrating fractured mesh partitions ("shards") into the scene as visible entities and rigid bodies in Bullet simulation the particle system will be utilized. Instead of directly storing transforms for shards inside the mesh structure, the particles will handle such transforms and communicate with the Bullet API. The fracture system can thus be kept simple, it works purely in Object space as a specialized mesh data format.

Beside this i've been working on bug fixing, particularly on the Lens Distortion node. This took quite a bit of time, but the resulting cleanup makes the node much more understandable. There is still an issue with filtering noisy images, potentially using EWA sampling could help with this (T37462)

Next Week I want to prepare some design proposals to be discussed at our meeting next weekend. This should give an overview of the ideas which crystallized recently for physics simulations in general, caching and nodes/scriptability. Caching in particular should be discussed in relation to the big number one topic: asset management. Beside these general design considerations i want to give more details on particle system goals and already implemented features. If time permits i'd like to push forward with the fracture particle integration as a proof-of-concept.

Fixes

T38221 node fcurves in compositor get deleted when muting a node.

T38291 depgraph tagging was wrong for OBJECT_OT_constraint_add_with_targets

T38128 snapping to node border uses node centers

T38011 Lens Distortion node area of interest calculated incorrectly

c24a23f Unreported particle emission bug: Fast moving parents lead to choppy emission of particles

Week 24 (10th February)

- Info

Compositor code cleanup project: This is an extensive overhaul of the class structure used to describe and construct the nodes and operations graphs in the compositor. The goal is to simplify the methods for converting Blender DNA nodes first into compositor representations and then operations (the actual compositor functionality).

The code for this is currently terribly convoluted and unnecessarily dispersed. It requires far too much effort to understand how operations are connected even for single nodes due to the way control flow passes through different classes.

The first stage of this cleanup adds a new NodeCompiler class which handles all the conversion details from nodes to operations instead of having this logic inside the graph structure (ExecutionSystem). The compiler will prepare the operation graph and perform any optimizations and grouping, then initialize the ExecutionSystem which will not have to modify the graph itself afterward.

Further plans for the compositor (after this large scale cleanup is merged) will include

- Stricter hierarchy for ExecutionGroups (makes several pieces of code redundant)

- Avoiding recursive function calls (shallower callstacks, easier debugging, better performance)

- Clarification of resolution determining (also easier to follow without recursion)

- New sampling system implementation

- Including support for cubic b-spline sampling with prefilter by Troy Sobotka:

- Implementation of explicit pixel transforms and canvas compositing features

- Next Week

Wrapping up the compositor cleanup is high priority. Detailed review of the code is difficult due to lots of renaming, moved code and many touched files, so the plan is to do testing as much as possible and then merge into master early in the next release cycle. Deeper changes to the execution system will be held back for later to prevent unexpected complications.

As time permits i then want to get back to the particle system and implement a UI level component system to make it testable for a wider audience (apart from scripting)

- Fixes

T38529 Blur node size 0 doesn't work.

30c9fe19a31f6f9 Fix for crash caused by effectors doing precalculation during DAG updates.

Week 25 (17th February)

- Info

This week has been almost entirely bugfixing.

- Next Week

Bugfixing again has highest priority until release.

Would like to discuss D309 with J. Bakker/M. Dewanchand and figure out a way forward for the compositor.

- Fixes

- T38643 Frame labels are invisible with default theme.

- T38487 Wrapped translate node in combination with other buffered nodes (Blur) causes crash due to chained read/write buffer operations.

- T38650 Crash from enum item functions returning NULL instead of a single terminator item.

- T38651 Compositor Normal Node Sphere unchangable when Reset All To Default Values is used on it.

- T38680 File output subpath file-select operator uses absolute path

- T38506 Bokeh blur node - size bugs with OpenCL

- T38488 Single pixel line artifact with Rotate and Wrapped Translate nodes

- T38603 Output File node sockets were drawing the regular socket label in addition to the actual specialized socket ui

- T38720 Clear preview range operator missing notifier to redraw timeline

- T38717 Copy Vertex Group To Selected fails when all Vertex Groups are empty

- T37719 NodeTrees lose users on undo

- Unreported

- 4789793f0903fe0 Fix for bad imbuf creation by compositor viewers if resolution is (0,0)

- Cleanup

- 907be3632c60646 Replace the int argument for user count mode when restoring pointers on undo with a nicer enum

- Closed

- T38653 Shapekey data path obtained from right-click -> copy data path seems incomplete

Week 26 (24th February)

- Info

Mostly bugfixing and tracker maintenance.

- Fixes

- T38794 ScaleFixedSizeOperation was not taking offset into account when calculating depending-area-of-interest

- T38798 Can get stuck in world nodes when switching from Cycles to BI

- T38801 Dupli objects with modifiers exhibit bad transform artifacts in Blender Internal renderer

- T38773 Inconsistent conversion of colors and float values in Blender Internal shader nodes

- T38811 Cycles particle ids are inconsistent when using multiple particle systems

- T38846 Render layer checkbox is not refreshed

- T37334 Better "internal links" function for muting and node disconnect

- Fixes (unreported)

- ffa94cb7133bf0d Single layer renders were broken

- Others

- d59f53f7b7dac5e Support for generic OSL shader parameters in the Cycles standalone XML reader

Week 27 (3rd March)

- Info

Some bugfixes and tracker maintenance.

Investigating depgraph integration for physics sims: To make interaction of simulations and layering possible we need to organize sims as part of the depgraph rather than including them inside individual modifiers.

- Updates for simulated objects require a pre-animation sync step before any of the other scene data is modified at the start of the frame update. Simulation solvers need to store relevant dynamics info (forces, dynamic colliders) in advance as part of their internal state for a proper forward integration step.

- Interacting simulated objects need to be treated as a single node with sub-nodes of some kind in the depgraph. Their dependencies on each other can not be represented properly in the depgraph model at this point.

Joshua Leung's GSOC 2013 project could provide a viable basis for further work on physics sims, but needs updating to current master and completion.

- Fixes

- T38942 Blender Internal rendering can lead to wrong obmats of nested dupli objects

- T38969 RenderResult in RenderPart can be NULL if multithreaded renders are cancelled early

- Fixes (unreported)

- c169413a0f28a96 Fix for potential memory leak in Bullet API: freeing dynamic arrays should use the delete[] operator instead of the plain pointer delete

- 3aedb3aed7c8c2a Fix for invalid custom data checks in armature and lattice functions

- 08444518e62b4c7 Removing ParticleSystem->frand arrays to avoid memory corruption issues in threaded depgraph updates and effector list construction

- ef51b690090967f Fix for displace node regression: Was adding a (0.5, 0.5) offset, even for zero displacement