「利用者:Nazg-gul/GSoC-2013/ThreadedObjectUpdate」の版間の差分

(→Thread balance visualization) |

細 (1版 をインポートしました) |

(相違点なし)

| |

2018年6月29日 (金) 05:57時点における最新版

目次

Threaded Dependency Graph: Threaded Objects Update

Threaded object update means that it's possible to handle objects update from the same dependency graph in multiple threads. From user perspective it means much higher interactivity when changing the current frame, doing a playback or altering objects from the scene in other ways.

Technically speaking, short-term solution is to call object_handle_update form multiple threads (for sure taking dependencies into account). Final design will be much more granular, but this might only happen after merge with Joshua's new dependency graph.

Static vs. Dynamic scheduling

Generally speaking, there're two ways of scheduling tasks: static and dynamic.

Static scheduling splits the jobs into tasks and schedules them to threads before threads start.

In context of threaded dependency graph this would mean we first split tree into branches and send the whole branch to the thread.

This is simplest implementation, but it doesn't work in practice because no one could predict how much time branch will take to update. It'll likely will lead to situation when one thread crunching heavy branch and other threads will be idling.

Dynamic scheduling means all the tasks which are currently ready for update are sent to worker threads, and threads are allowed to spawn new tasks.

In context of threaded dependency graph it means task is denoted by dependency graph node. Once all the dependencies are met for the node, task for this node is created and sent to the queue.

This gives much better CPU utilization and even allows to update objects which depends on the same rig in multiple threads.

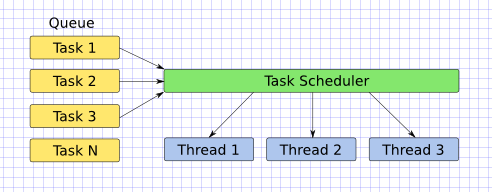

Task scheduler

Task scheduler is a central thing for the threaded object update.

At this point the task scheduler ported from Cycles' one is used. It's a simple implementation which doesn't try to schedule task in CPU-cache friendly manner, but so far it shows pretty nice results.

In the future we might consider switching to TBB scheduler.

Task scheduler does have a queue where the tasks are stored. When there's a thread which isn't busy, it gets task from the queue and starts crunching the numbers. This also means no one shall add task to the queue unless all it's dependencies are met.

Scheduling

As was mentioned above, having a dynamic scheduling is the way to go for this project. Here it's described how scheduling is implemented internally.

Rough algorithm of how tasks are handling is:

- Add root DAG node to the task queue (root node does not depend on any other obviously).

- Task run() function calls an update function which corresponds to the node type (as for short term for until granular update is supported it's just object_handle_update).

- Then it checks for whether all the dependencies are met for node's children and schedules child nodes for which all dependencies are fully met.

- Algorithm runs for until there're tasks in the queue.

Now, let's see how to check whether dependency graph node is ready for update.

Taking into account the fact, that current dependency graph doesn't store node's parents ad traversing parents is rather slow, here's simple optimization for this process:

- Let's call number of node parents a valency.

- Before running the threads we count number of parents for every node and store this number in the node.

- When node is handled, we decrease valency of all the children by 1.

- If it happen so some child has got valency of 0, it means all the dependencies are met and node could be scheduled.

Code snippet

Here's a small simplified code snipped which demonstrates API calls to task scheduler and dependency graph to perform threaded objects update.

static void scene_update_object_func() {

if (object) {

BKE_object_handle_update_ex(evaluation_context, scene_parent, object, scene->rigidbody_world);

}

/* Update will decrease child's valency and schedule child with zero valency. */

DAG_threaded_update_handle_node_updated(node,scene_update_object_add_task, pool);

}

static void scene_update_object_add_task() {

BLI_task_pool_push(task_pool, scene_update_object_func, node, false, TASK_PRIORITY_LOW);

}

static void scene_update_objects() {

TaskScheduler *task_scheduler = BLI_task_scheduler_get();

TaskPool *task_pool;

ThreadedObjectUpdateState state;

/* Fill in the state */

task_pool = BLI_task_pool_create(task_scheduler, &state);

DAG_threaded_update_begin(scene, scene_update_object_add_task, task_pool);

BLI_task_pool_work_and_wait(task_pool);

BLI_task_pool_free(task_pool);

}

Thread balance visualization

There's a debug if-defed code in the scene.c now which allows to visualize load of the CPU threads while doing threaded update. This helps seeing time periods when some threads were doing nothing (due to not met dependencies yet, for example).

To do such an analysis, first step is to define DETAILED_ANALYSIS_OUTPUT in scene.c. This would enable the whole bunch of debug prints which them night be parsed.

Wouldn't go into details of the print format, but here's the script which parses the output.

This also helps visualizing how much time was spend on actual objects update and how much time was spent for viewport display.

There's an example output of the visualizer at the right. It was done with some scene from the Tube open movie project. The very left "island" of bars represents scene evaluation after file was just opened. After this playback was started. This is represented by repeated "islands" after the larger gap.

This larger gap is caused by human latency hitting AltA after the file was open. Gaps between "islands" represents viewport draws (in the example viewport was set to bounding box for maximal CPU utilization by scene update functions).

Still to come

Currently there's no granular updates happening at all. In the future task's run() function will simply run dependency node's callback function (instead of calling object_handle_update).

Also, some modifiers (like subsurf, multires, also some BMesh operations) are using OpenMP. This leads to quite enough of thread overhead and better not be done.

We might use the same task system in that areas, so they don't lead to thread overhead and still nicely threaded if called outside of threaded object update.