利用者:Matthias.fauconneau/GSoC2011

目次

- 1 Google Summer of Code: API and Tracker Improvements for libmv

- 1.1 Project

- 1.2 Summary

- 1.3 Weekly progress

- 1.3.1 Week 1: May 23 - May 27

- 1.3.2 Week 2: May 27 - June 3

- 1.3.3 Week 3: June 3 - June 10

- 1.3.4 Week 4: June 10 - June 17

- 1.3.5 Week 5: June 17 - June 24

- 1.3.6 Week 6: June 24 - July 1

- 1.3.7 Week 7: July 1 - July 8

- 1.3.8 Week 8: July 8 - July 15

- 1.3.9 Week 9: July 15 - July 22

- 1.3.10 Week 10: July 22 - July 29

- 1.3.11 Week 11: July 29 - August 5

- 1.3.12 Week 12: August 5 - August 12

- 1.3.13 Week 13: August 12 - August 20

- 1.3.14 Later

- 1.4 Documentation

- 1.5 Links

- 1.6 Screenshots

Google Summer of Code: API and Tracker Improvements for libmv

Project

Improve and document libmv API so that Blender can easily leverage all features provided by libmv.

Improve and test libmv tracking and reconstruction implementation for fast and robust match-moving.

Develop a test platform to guide the development of libmv API, allow easy testing of its implementation, and provide a documented example of the usage of libmv API.

Develop a calibration tool to compute lens calibration profile from calibration footage with an artist friendly interface.

Summary

- Week 1-3: Debug and improve API, Create Qt Tracker Test Platform UI.

- Week 4: 3D reconstruction UI support.

- Week 5: 3D reconstruction API and documentation.

- Week 6: Vacation. Keir Mierle finished 3D reconstruction algorithms.

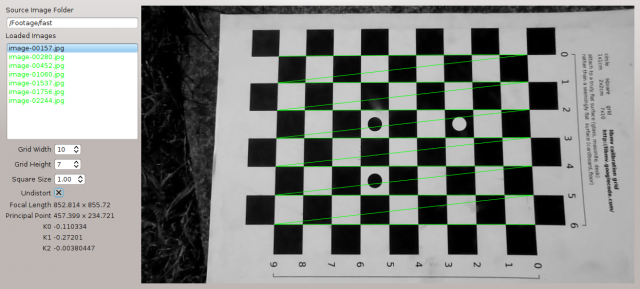

- Week 7: Qt OpenCV Checkerboard Calibration Tool.

- Week 8: Improve Tracker and Calibration UIs, Help Blender integration, Add feature detection.

- Week 9: Improve UIs and detection, Optimize KLT tracker.

- Week 10: Lens distortion API, Image distortion, Unit testing.

- Week 11: Implement Cubic B-Spline Interpolation, Research on tracking methods.

- Week 12: Implement SAD Tracker, Synchronize with Tomato, Algebraic Lens Distortion Estimation.

- Week 13: Finish distortion estimation API and UI, SAD detector, Implement Affine Tracking, Add Laplace filter.

Weekly progress

Week 1: May 23 - May 27

- Tested libmv command line tools (undistort,tracker and reconstruct_video).

- Fixed a bug in reconstruct_video.

- Learned the work-flow for pull requests to libmv's github repository.

- Generated the doxygen documentation.

Week 2: May 27 - June 3

- Spent time understanding libmv codebase

- Long discussion on libmv-devel about our objectives.

- Initial implementation of a test Qt tracker GUI (load images, play the sequence, seek, step frame by frame).

- Drafted a high level interface allowing applications to use libmv with minimal knowledge of its internals.

Week 3: June 3 - June 10

- Optimize convolutions.

- Work on our Qt test application:

- Implement track selections and zoom view (to assert KLT accuracy).

- Make selections behave correctly (introduce TrackItem which stay selected on frame changes).

- Add a dock widget which will hold details on the currently selected marker.

- Add a View to the detail dock which stay zoomed on the current selection.

- Support track editing (i.e move markers).

- Display pattern search window.

- Split the application in two files.

- Support backward tracking.

- Add button to toggle automatic tracking of selected markers.

- Support marker/track deletion.

- Implement track selections and zoom view (to assert KLT accuracy).

- Work on our public simple tracking API:

- Improve interface and implementation

- Add Doxygen documentation to public Tracks and Region Tracker API.

Week 4: June 10 - June 17

- Change Reconstruction API to match client needs.

- Add GL abstraction.

- Add 3D View.

- Display bundles.

- Display cameras.

- Implement bundle selection.

- Implement camera selection.

- Implement Object support:

- Add objects.

- Display objects.

- Select objects.

- Link active object to selected bundles.

- Make bundles, cameras and objects persistent.

- Re-implement 2D Tracker View to use GL.

- Add GL Zoom Views.

- Display 3D Scene overlay on 2D Tracker view.

- Add Camera parameters GUI.

- Add Solve button and a solve() stub.

- Add low order lens distortion for reprojected bundle preview.

Week 5: June 17 - June 24

- Add documentation to MultiView and Reconstruction APIs.

- Add Simple Pipeline API header and documentation.

- Implement keyframe selection.

- Implement Tracks::MarkersForTracksInBothImages correctly.

- Use Reconstruction methods.

- Fix various bugs:

- OpenGL includes for MacOS.

- Only lens distort in overlay

- Fix creation of first two keyframes.

- Add minimum track counts to reconstruction methods.

- Remove track from selection when marker become hidden.

- Implement CoordinatesForMarkersInImage correctly.

- Fix EnforceFundamentalRank2Constraint.

- Remove random test data generation.

- Hook NViewTriangulate to simple_pipeline/multiview Intersect API.

Week 6: June 24 - July 1

Keir Mierle finished 3D reconstruction.

"The elevator sequence now reconstructs with 0.2 pixel average re-projection error"

Week 7: July 1 - July 8

- Use P_From_KRt from projection.cc.

- Remove momentum in scene view.

- Build OpenCV 2.2

- Qt OpenCV Calibration Tool

- Detect calibration checkerboard using OpenCV Calib3D.

- Compute camera intrinsic parameters

- Undistort images and corners to verify parameters

- Output custom XML lens calibration profile.

Week 8: July 8 - July 15

- Update README with instructions to build Qt OpenCV Calibration tool.

- Add Video support using FFmpeg to Qt Tracker.

- Add Video support using FFmpeg to Qt Calibration.

- Miscellaneous UI fixes

- Faster grayscale conversion

- Compute search window size from pattern size and pyramid level count.

- Provide advice to the Blender integration project.

- New parameters make tracking much more robust.

- Add raw on-disk video cache.

- This is useful for faster testing iterations. It avoids waiting for the footage to decode on startup. Using file memory mapping, we let the OS handle caching. The OS disk cache also has the advantage of being kept in memory between runs.

- Make Qt Calibration tool easier to build.

- Add "Good Features To Track" feature detection.

- Since reconstruction need many tracks to work accurately, it becomes cumbersome for an artist to select enough easy to track uniformly spaced markers. Feature detection help artists by creating an initial set of good to track markers.

- Add visualization of image filtering operations.

- Add FAST detector

- This detector is faster and doesn't need as much tweaking, though we need to test if it detects feature which are compatible with KLT.

- Refactor Qt Tracker.

- As Qt Tracker is not only a test platform but also a sample application of libmv API usage, I'm keeping the different aspects of the application separated in their own modules, each corresponding to one aspect of libmv.

- Developed new Detect API.

- This API was developed in harmony with Blender developers to ensure straightforward usage.

- Added Doxygen documentation.

- Improve CameraIntrinsics API.

- Better Calibration Settings UI.

- Import XML calibration profile format as saved by Qt OpenCV Calibration tool.

- Support anamorphic video content.

- Improve Marker Zoom View.

Meanwhile Keir is working on auto-calibration. This method infer the camera intrinsic parameters directly from the tracked footage, it is not as accurate but it doesn't require the user to shoot calibration footage.

Week 9: July 15 - July 22

- Improve caching.

- Improve sequence and video loading.

- Support deprecated FFmpeg API.

- Support old Qt versions (<4.7).

- Improve detection.

- Add optimized version of KLT tracker (4x faster).

- Avoid duplicate pyramid filtering.

- Keep filtered pyramid for next frame.

- Read on epipolar geometry, fundamental matrix estimation, RANSAC outlier detection.

Week 10: July 22 - July 29

- API: Support creating FloatImage without copying data.

- Merge uncalibrated reconstruction.

- New Distortion/Undistortion API:

- Compute lookup grid for fast warping of sequences.

- Support distortion/undistortion, float/ubyte, 1-4 channels using generic programming.

- Bilinear sampling.

- Process 1920x1080 images in 25ms for ubyte and 50ms for float (on A64 2GHz).

- Learn Unit Testing workflow and prepare Intersect and Resect unit tests.

- Remove tests depending on dead code.

- Fix CameraIntrinsics test.

- Add Intersect test.

Fast image distortion and undistortion still need to be integrated to Blender compositor.

Ideally, the renderer should support distortion directly instead of resampling the rendered image.

Week 11: July 29 - August 5

This week was dedicated to research ways to refine tracking precision.

Efficient second order minimization (ESM) would be an interesting method to investigate, but it might require a significant investment to implement since there is apparently no available open source example to help a quick integration (unlike the traditional KLT method).

In the meantime, we can improve our interpolation to provide smooth function for the tracking algorithm to work on. B-Spline interpolation is the fastest and most accurate method for a given order. Particularly, Cubic B-Spline are the best tradeoff of speed vs precision. B-Spline can also be easily differentiated in exact smooth derivatives which are useful to KLT.

Generally, high quality interpolation is useful for many problems like texture filtering and image transformation.

I created a small sample application showing an image warped using cubic B-Spline interpolation. Nearest and linear interpolation are provided for comparison.

Week 12: August 5 - August 12

- Sum of Absolute Differences Tracker.

- This method is simpler, more robust, faster and accurate.

- Improve subpixel precision.

- SSE2 optimization.

- API for affine tracking (implementation on hold).

- Collaborate with Tomato.

- Synchronize trees.

- Adapt and Merge simple_pipeline patches.

- Re-merge deprecated KLT tracker.

- Discuss priorities and issues to resolve before end of GSoC.

- Improve CameraIntrinsics.

- Lens distortion estimation

- Research various existing methods.

- Select "Algebraic Lens Distortion Estimation".

- Implement a reference application.

It seems the KLT tracker doesn't work correctly. For example,this video doesn't track at all. I couldn't find any obvious issue in libmv KLT implementation so its model might be too limited.

So I took the initiative to implement a much simpler (and easier to debug) tracker.

Right now, this tracker simply computes the Sum of Absolute Differences (SAD) for each integer pixel position in the search region and then refine subpixel position using a simple square search. This algorithm is used for motion estimation in video encoders.

In my tests, I've found this method more robust, faster and accurate. I think we should test further and provide this alternative to Tomato users.

Tomato users are now trying to solve more complex scenes where approximate calibration of camera intrinsic parameters prevent accurate reconstruction even with precise tracks.

The new defined priority is to implement manual straight line calibration, to allow estimating intrinsic footage from the tracked footage instead of relying on external tools to perform full calibration on checkerboard images.

I researched various methods to perform lens distortion estimation and I had to learn the more general techniques which are applied on this problem.

The easiest solution would be to use the "Algebraic Lens Distortion Estimation"[1] method which has sample code available. This project was under GPLv3 but the authors agreed to release it under GPLv2 for Blender usage.

I'm developing an application[2] to test the method and provide a reference which will allow the interface to be easily ported to Blender.

[1]Algebraic Lens Distortion Estimation [2]Reference Application

Week 13: August 12 - August 20

- Lens distortion estimation.

- Complete reference implementation.

- Create and document a simple C interface.

- "Moravec"(SAD) detector.

- Detect features which are unlikely to drift.

- Detect features similar to a given pattern.

- Affine Tracking.

- Support in Tracker API.

- Support in Qt Tracker UI.

- Implemented for SAD Tracker using coordinate descent.

- Optimize integer pixel search.

- Tweak coordinate descent.

- Added regularization (area and condition number).

- Add Laplace filter to be used to avoid failure from smooth lighting changes.

- Improve UI.

- Document API.

The SAD detector is more suited to tracking since it try to choose patterns which are unlikely to drift by computing SAD with neighboring patches. It could be improved to better avoid edges.

The SAD Tracker now supports full affine transforms (rotation,scale,skew). This was necessary to make tracking usable without adapting at each frame. Since there wasn't much time left for tracker improvements (the KLT tracker was good enough until recently), I implemented a simple brute-force solution: To optimize all 6 affine parameters, I use the coordinate descent method. While this made the tracking 10 times slower, there is much potential for optimization if ever necessary. In any case, the performance is still comparable to the slow pan only KLT tracker (10ms/marker).

Later

Blender will need to integrate straight line lens distortion estimation, image distortion/undistortion, make affine tracking interface easy to use and expose the laplace filter.

Libmv will need to be refactored to remove all unused and unsupported parts. It should focus on its initial goal: structure from motion and multiview reconstruction. This simplification of libmv design will ease the maintenance, reduce compile time, decrease binary size, increase performance, and help attract contributors.

Documentation

Documentation can be found on this wiki, in code and in git log.

API is extensively documented to make it easy for non-specialist to use the algorithms.

Qt Tracker also provide an example to better understand the Tracker API.

Two smaller sample applications were also developed:

Qt Lens Distortion implement lens distortion estimation and image distortion/correction through a simple documented API. It also provide a minimal UI to test the implementation which can be used as an example.

Cubic B-Spline Interpolator implements the best cubic interpolation and allows to compare with nearest, bilinear and a commonly used low quality cubic interpolation.

I created a separate page with guidelines to leverage best our algorithms and to help users understand the parameters which are exposed in UI.

Links

Blender public FTP with test footage