利用者:Jwilkins/VFX/Blender Foundation Proposal

目次

Viewport FX Proposal

Introduction

This proposal should be read as an expansion of my Google Summer of Code 2014 Proposal. In that document I proposed bringing the bulk of the work I started in 2012 into a state of completion, but it could also be read as an abandonment of more ambitious features. I limited that proposal to make sure that the compatibility and performance enhancements from Viewport FX get into the hands of users as soon as possible. However, given longer term funding, I believe that Viewport FX can be completed in a form closer to its original goals.

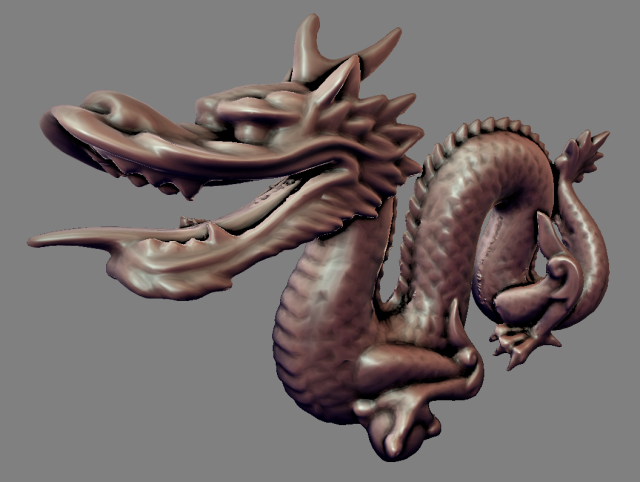

Viewport FX was conceived out of a desire to do more complicated visualization of surfaces in the viewport. My specific desire was to create what artists call a "crevice shader" for sculpting mode which would shade recessed areas differently than raised areas. (The image above is an example of what I'd like to do.) The shader is similar to the current matcap mode, but requires something that could be called "viewport compositing" to do correctly, since it needs to combine two separate matcaps according to depth as a post-process, similar to something the game engine might do. An ad hoc solution could have been written, but that would be piling more code on top of already overly complicated viewport code paths. After seeing and hearing what artists would be able to do if they had more control over how the viewport was drawn, it became clear that what is needed is the ability to write add-ons that change the way the viewport draws.

Examples

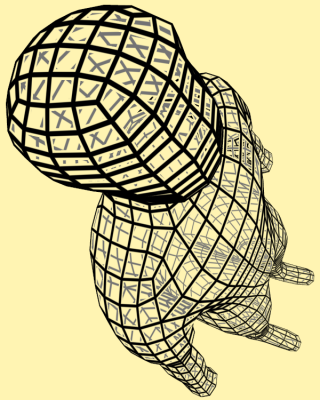

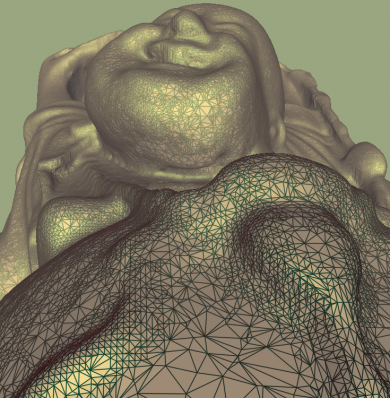

These images are from a paper about Advanced Wireframe Drawing on programmable hardware. Some older work is here: Single Pass Wireframe Rendering.

Rather than implement dozens of such visualization modes in standard Blender, the goal of Viewport FX is to let creative people make their own and share them.

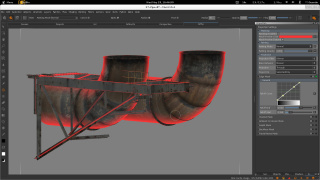

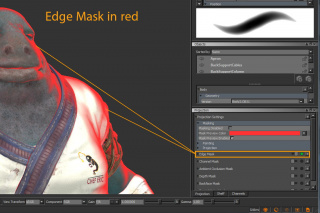

The ability to easily modify the viewport would not just be limited to add-ons. If a developer wanted to create a new tool he would be able to more easily implement the visualization for that tool. For example, an edge mask for painting and sculpting could be implemented by overriding aspects. Below is an example of what that might look like that from the 3D paint program MARI.

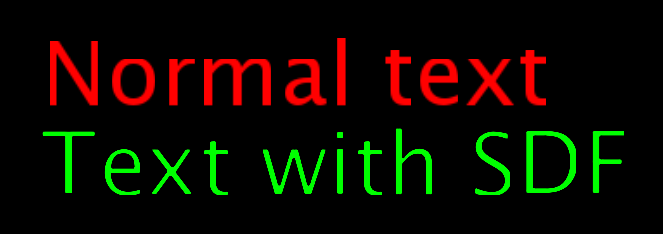

Font rendering using signed distance fields is one possible feature that becomes easier to experiment with when font rendering has been isolated in such a way that an add-on write or developer can just drop in a new shader.

Synopsis

Previous Work

Work has already proceeded on Viewport FX through the Google Summer of Code program in the years 2012 and 2013. The long development can be attributed to the fact I decided that it would be better for Viewport FX if Blender supported OpenGL 3.0 and while I was at it, OpenGL ES for mobile. This has resulted in the bulk of the initial effort being technical API work which should yield no visible differences to users except for eventual performance increases and the ability to run Blender on tablets. The concrete results of the previous work have been that the Viewport FX branch runs on the OpenGL 3.2 Core Profile, the MALI OpenGL ES Emulator, and the ANGLE OpenGL ES Library (which is implemented using Direct3D). Since a lot of changes have been made under the covers to make this possible, documentation for the new APIs has been written for developers. However this is still all a work in progress as my Google Summer of Code 2014 Proposal and the Viewport FX Issues page can attest to.

Future Work

In addition to the work outlined in the Summer of Code proposal, what needs to be done to achieve the goal of "viewport drawing add-ons" is a full implementation of what I call "aspects" or "shading classes". To understand aspects think of how the drawing of solids, wireframes, fonts, materials, and images all work in their own specific ways. We could write one giant shader for all of these aspects, or we could write one shader for each of them. The advantage of writing one shader for each case is that the specialized shaders will be simpler and if we want to change how one works, the changes do not affect the other shaders. Currently, this is the extent of how aspects are implemented in Viewport FX, because they are only modules into which different kinds of drawing code are divided.

I would like to expand on aspects so that add-ons can be written that override existing aspects. These overridden aspects can achieve specialized visualization purposes. This will require that the current aspects be further divided into sub-aspects based on what they are drawing (e.g., an add-on writer would need to know if the solid aspect is being used in object, edit, sculpt, or paint mode). Viewport compositing can be implemented by allowing aspects to render to off screen buffers and then combine those buffers in a final pass (nothing like a node editor would be needed, but one could be provided in the future if desired).

Approach

It is difficult to explain the approach I am taking and avoid getting very technical. When drawing anything in the Viewport FX version of Blender the programmer must first choose an "aspect". As implemented an aspect is a set of features that a shader is expected to have. For example, the "basic" aspect can handle lighting and texture mapping while the "raster" aspect handles stippled lines and stippled polygons. There is also a separate aspect for drawing font glyphs. At the low level each aspect has its own separate GL shading language program, and the advantage of this becomes apparent, if for example, somebody wanted to implement a font renderer based on signed distance fields instead of alpha blending.

If an aspect is overridden, the new code is expected to understand the same parameters that the default aspect implementation understood. For example, the basic aspect can enable or disable lighting and can apply a single texture map among other things. A new raster aspect implementation must understand how to apply stipple patterns, but needs to know nothing about lighting or texturing.

It should be apparent that applying a new aspect implementation globally would be undesirable. For example, one might want a different wireframe visualization for the viewport, but not for the UV editor. For that reason, when declaring which aspect is needed, there is also the option of providing a more specific aspect context. The aspect context lets one separate the viewport and the UV editor so that their implementations of the raster aspect can be overridden separately.

The final component of aspects is support for viewport compositing. (This is not anything as sophisticated as Blender's compositor.) Viewport compositing is the ability to have an aspect implementation perform rendering to multiple offscreen buffers and combine the results in a final operation. Screen space ambient occlusion, deferred shading, and bloom are examples of draw algorithms that require this kind of post process pass.

Risks

Are alternative viewport visualizations modes just a gimmick?

I provided examples that I hope will ease any concerns that this would just be a gimmick. The viewport is not just a place to view an approximation of your final result, but a place to visualize your work in ways that help you understand it better. Of course some alternatives to the standard may be gimmicky (I joked about adding a lens flare mode as soon as it was possible...), but certainly other visualizations will be practical.

Are users are not as interested in this kind of power as they appear?

Although the users I've communicated with about this seemed very enthusiastic, it is certainly possible that your average Blender user might not care much. For them there are still the performance and increased compatibility benefits from the work that has been done on Viewport FX.

Should this be reserved for Blender developers and not be exposed as part of the add-on API?

It would certainly save some incremental amount of work to not expose a Python interface for Viewport FX, but then this becomes more of an internal project that appears to mostly benefit developers. All of the examples I have given could be done in some ad hoc way, but by putting the effort to make things more general we can open it up to the broader community who will make things that might surprise us. Python and GLSL are more "democratic" than C.

Schedule

The schedule for the Summer of Code version of this proposal was 3 months and does not include extending aspects to be overridable. That schedule assumes that I will be able to work 40 hours a week for 12 weeks.

For this proposal I would like to extend the schedule by working for 20 hours a week for an additional 16 weeks. (The same amount of time as 2/3rds of a Summer of Code.)

(This is still really rough. If you have any questions please contact me.)

Milestones

- Make it possible to override the aspects as they currently exist in Viewport FX.

- Identify and implement all of the different aspect contexts in Blender.

- Implement an off screen buffer API that can be used by aspects.

- Add ability for aspect implementations to trigger a viewport compositing step.

- Extend the Python API so that aspects implementations can be implemented in Python.

(This is still really rough. If you have any questions please contact me.)