利用者:Psy-Fi/Workflow Shaders

目次

Workflow Shaders

So, what is a workflow shader? Basically, it's a visualization of data.

When people work in 3D, blender automates this process by requesting the relevant data for the user and presenting them in a pre-configured way for every object mode.

In blender there are no "workflows", but instead there is a set of pre-made visualization modes (solid, wireframe, bounding box, textured, material, rendered) which are then modified for every object mode in ways that are not really transparent to the user. The drawing code of blender currently does a lot of pre-processing to basically convert the internal data representation of blender, to a form that the legacy OpenGL would understand (such as converting weight values to colors). However, shaders change all this. It is now possible, with shaders, to feed data directly to the GPU and let users arbitrarily request and manipulate these data in a meaningful way, instead of hardcoding specific modes.

Let's take as example weight painting visualization:

It basically maps a value (weight) to a color ramp.

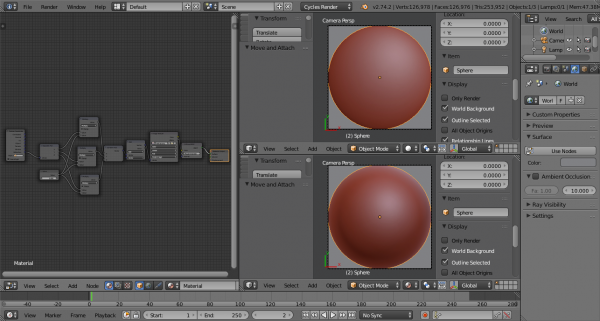

Another example is a matcap shader. This is almost possible even now, though the setup is a bit complicated, since we are missing some functionality to make this elegant:

What OpenGL cannot easily do is topology dependent operations, such as computing normals from faces adjacent to a vertex for instance (actually it -can- do that but we'll have to be clever in how we pass data to the GPU and it will require modern OpenGL too).

User Benefits

So how does that work user side? Basically, blender will have a list of those workflow shaders. The program will be offering some default shaders of its own, and users will also be able to either modify those or add their own. Each shader will be relevant in certain modes (sculpt, vertex paint etc), users will be able to assign their workflow to any mode they want, provided they want this workflow shader to be available there. For instance, the weight paint workflow shader above, does not make much sense in vertex paint mode probably, however users will be able to allow or forbid such a shader if they want. The catch here is that the data that the shader requests have to be available. Here we will probably add ways to get active data layers easily as well as named layers. There will also be a "global" mode which will allow users to set any of those shaders as an override to all current workflow shaders, exactly like matcap works currently.

Such workflow shaders can of course be combined arbitrarily with a material (indeed, seeing materials -is- a workflow) and be overlaid on top of it. Generally, it should make any combination users want possible. The focus now in the blender code base moves away from coding preconfigured visualizations and goes to making data available for real time display easily and effectively. In the future we could allow users to paint arbitrary data layers on their 3D models (instead of having hardcoded color or weight layers), and connect those data in meaningful ways through their shaders to get an effect they want. For instance, paint how metallic a PBR shader should be.

For all these to work, we should of course provide some "closures", similar to how cycles treats its materials. It would be nice to allow use of certain light groups for those closures. For instance, a PBR shader using the 3-light setup of blender, or a solid shader that uses all scene lights (with some care to avoid bugs such as this https://developer.blender.org/T44095)

Workflow assignment

Workflows could be assigned per mode, or per object or per group. Some users might even want to use two workflows at once. We will probably find use cases for these as users come with requests, but for now we should be able to at least replicate the way modes work.

Data Invalidation

Ideally, the new code will be properly hooked to the depsgraph and operations will be able to only invalidate certain parts of the display buffers of an object. This should help with minimizing the update cost.

Integration with external engines

External engines will mostly need integration with the viewport to provide some sort of material visualization. Obviously, a render engine should be able to register its own workflow shaders or even hook its own data to the shaders if needed, but this is more involved than working with the existing nodes or using a GLSL source node (a feature that will be part of the new viewport). It probably makes sense for external engine materials to only provide uniform GLSL data, since it's unlikely that they will be generating their own mesh data, but this is to be decided.