利用者:Lukasstockner97/GSoC 2016/Documentation/Updated workflow proposal

目次

Updated Workflow and UI proposal

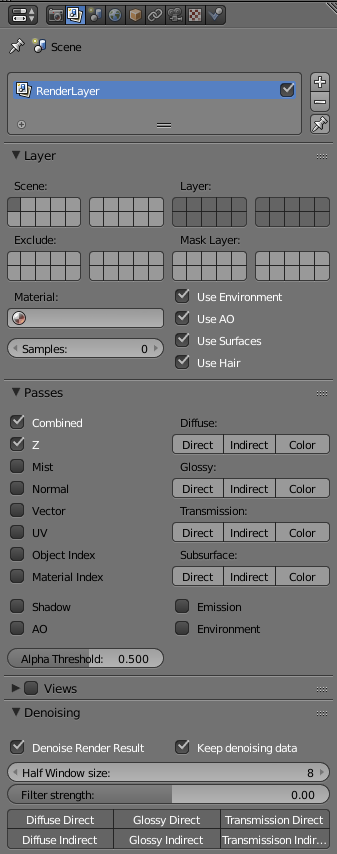

UI Layout

Originally the options were supposed to be in the render properties, but it actually makes more sense to have separate options for each RenderLayer. A new option in this design is "keep denoising data" - its function is explained below.

Workflow

The proposal included a single checkbox for enabling denoising right after rendering, mainly to save memory. However, a much-requested feature in the feedback regarding the proof-of-concept version was to allow tuning of the parameters and re-filtering without re-rendering. The main goal is to reduce the need to tweak parameters, but always producing the best result fully automatically isn't really realistic. Therefore, the option is being split into two options:

- "Denoise Render Result" will work just like the old option did, denoising right after rendering, before passing the result to the compositor.

- "Keep denoising data" will store the feature passes that are needed as input for the denoiser as additional render passes in the render result.

When "Keep denoising data" was activated during rendering, the render result can be denoised manually by the user using the current settings by pressing a button either in the image editor or the render result input in the compositor. The additional passes would not be displayed in the UI like the regular ones, instead their presence would be indicated by the manual denoise button.

Implementation

Internally, I'd implement this as a generic "post-process" operation in the Render API which allows renderers to store additional information/passes in the render result and use it to update the result when called.

Animation denoising

This workflow has an additional benefit: If the denoise features are present in the render result, they would also be stored in Multilayer EXR output. That way, temporal denoising or EXR sequences using neighbor frames could be implemented either directly in Blender or through the standalone Cycles binary. Of course, that would also require an option to run only the compositor on EXR sequences. In short, the pipeline for standalone rendering would be: Render all frames to Multilayer EXR files -> denoise all frames -> run the compositor on all frames.