利用者:Keir/TrackingDiary

目次

- 1 Status

- 2 Changelog

- 2.1 Saturday, October 13th, 2007

- 2.2 Friday, September 29th, 2007

- 2.3 Thursday, September 28th, 2007

- 2.4 Tuesday, September 25th, 2007

- 2.5 Monday, July 30th, 2007

- 2.6 Thursday, July 19th, 2007

- 2.7 Tuesday, July 17th, 2007

- 2.8 Sunday, July 15th, 2007

- 2.9 Saturday, July 14th, 2007

- 2.10 Wednesday, July 4th, 2007

- 2.11 Tuesday, July 3rd, 2007

- 2.12 Monday, July 2nd, 2007

- 2.13 Sunday, July 1st, 2007

- 2.14 Monday, June 25th, 2007

- 2.15 Tuesday, June 19th, 2007

- 2.16 Monday, June 18th, 2007

- 2.17 Sunday, June 10th, 2007

- 2.18 Friday, June 8th, 2007

- 2.19 Thursday, June 7th, 2007

- 2.20 Wednesday, June 6th, 2007

- 2.21 Tuesday, June 5th, 2007

- 2.22 Monday, June 4th, 2007

- 2.23 Sunday, June 3rd, 2007

- 2.24 Saturday, June 2nd, 2007

- 2.25 Friday, June 1st, 2007

- 2.26 Thursday, May 24th, 2007

- 2.27 Thursday, May 24th, 2007

- 2.28 Wednesday, May 23nd, 2007

- 2.29 Tuesday, May 22nd, 2007

- 2.30 Monday, May 21st, 2007

- 2.31 Sunday, May 20th, 2007

- 2.32 Saturday, May 19th, 2007

- 2.33 Friday, May 18th, 2007

- 2.34 Thursday, May 17th, 2007

- 2.35 Wednesday, May 16th, 2007

- 2.36 Tuesday, May 15th, 2007

- 2.37 Monday, May 14th, 2007

- 2.38 Sunday, May 13th, 2007

- 2.39 Saturday, May 12th, 2007

- 2.40 Friday, May 11th, 2007

- 2.41 Thursday, May 10th, 2007

- 2.42 Wednesday, May 9th, 2007

- 2.43 Tuesday, May 8th, 2007

- 2.44 Monday, May 7th, 2007

- 2.45 Sunday, May 6th, 2007

- 2.46 Saturday, May 5th 2007

- 2.47 Friday, May 4th 2007

- 2.48 Thursday, April 27th 2007

Status

This page is now deprecated. The project has been renamed to libmv. Please visit the new project home and, for those keeping track, my current project diary.

Below here is all outdated!

What works

- Simple feature tracking via the KLT algorithm

- Robust three-frame reconstruction

- Robust merging of projective reconstructions also via AMLESAC

- Automatic projective reconstruction of a sequence

- Metric upgrade of a sequence with fixed and know camera calibration.

- Metric bundle adjustment

What doesn't work

- Autocalibration

- Correction for radial distortion

- Features beyond points (lines, squares, etc)

- Bundle adjustment for scenes with varying cameras (zoom, or bag-of-photos-from-different-cameras)

The Plan

- Determine ground truth 3D locations of the tracks from the blender file.

- This is not as easy as it sounds, as the 2D tracks projected rays don't necessarily intersect at the a single point on the model due to tracking errors.

- I will have to detect tracking outliers via the ground truth data; still not sure what criteria to use.

- Write RANSAC driver for alignment of 3D point sets (to align ground truth data to my metric reconstruction)

- Write evaluation code to compare camera pose and point locations to the ground truth after aligning the reconstruction.

- Write autocalibration code

Changelog

Saturday, October 13th, 2007

- I have robust ground truth alignment working nicely now.

- It works pretty on the test sequence. Most of the points are within 0.25% of their true position relative to the scene size.

- I rewrote all file formats to use a custom JSON parser

- I started writing an ICVS paper for submission on November 12th.

- I added custom attributes to Blender's ImBuf, and then exported those into resulting EXR files.

- Using the custom attribs, I now embed camera data into the EXR files by default.

Friday, September 29th, 2007

- I re-rendered my test sequence and also generated ground truth using my new code (see yesterday's patch).

- I re-tracked the test sequence with 1000 feature points. I also build the sub-reconstructions for tripples of subsequent frames. I used the full 64 frame set this time, instead of only the last 35 frames. I discovered my RANSAC code still has some problems in cases where the EM code discards too many samples. Right now I have no sanity check on how many samples get discarded due to numerical issues during the EM fit of inlier/outlier distribution. I'll have to fix that.

- My plan is to add a 'maximum discard fraction' knob. Yet another knob, but I don't see any easy way around this one.

- Turns out I already wrote that knob; I pushed the fraction up to 60% from 30% at it appears to work nicely.

- My plan is to add a 'maximum discard fraction' knob. Yet another knob, but I don't see any easy way around this one.

- I updated my patch to properly handle points which do not hit the scene. Now parts of the image which miss scene geometry get (x,y,z) indicating a point on the plane at infinity, and w=0 accordingly.

Thursday, September 28th, 2007

- I rewrote my world coordinate patch to be a Render Layer, rather than a node; this is much cleaner and is definitely the right approach.

- I also wrote a camera output node, which adds the camera matricies into the RenderResult struct, and then outputs them as 4x4 CB_VALUE CompBuf's.

- The patch is cleaned up and ready for merging with upstream: https://projects.blender.org/tracker/?func=detail&aid=7426&group_id=9&atid=127

- I figured out how to add a radial distortion node fairly easily. I will probably do that soon.

Tuesday, September 25th, 2007

- I dropped off the face of my thesis work during crunch time at my internship and didn't make much (any) progress on the tracker; however, now I'm back.

- I started supervising three students at U of Toronto to enhance blender's nodes to better compete with Filter Forge.

- I wrote a composite node which outputs the world coordinates for each pixel. I need this for evaluation.

Monday, July 30th, 2007

- I figured out where in the Blender code to calculate ground truth for the tracker. Sadly, since the required data is not easily exported via the Python API, I'm going to do a one-off hack. Which is unfortunate, because this could be useful functionality for generating more testing data for other vision problems.

- For now I'll do the one-off hack and re-visit the issue after my thesis is done.

- This week I'm in Science paper death march mode, so definitely no updates! We (http://astrometry.net) are trying to get a paper into Science.

Thursday, July 19th, 2007

- Things have been very intense at work lately (working 9-midnight most days) so things are not moving on the libmv front at the moment...

Tuesday, July 17th, 2007

- I forgot to include a link to the .py file for the exported point cloud and cameras for the curious.

Sunday, July 15th, 2007

- I wrote code to save and dump a set of reconstructions, saving the trouble of re-running the solver on 3-frame subsets of the full sequence.

- The merging now gets to the end, however there is a failure at some point so all tracks past frame 25 get lost. This is not good!

- Found the bug: I wasn't forcing the right ordering in the points going to the bundle adjuster. This was working because of the way the STL was ordering things before, however it was just a coincidence.

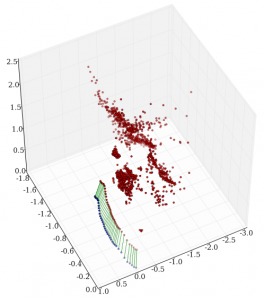

- After some other tweaks and such, the reconstruction ran right to the end. My code to force the points to be in front of the cameras appears to work! The orientation is wrong, so I'll have to fix that tomorrow, but other than that it looks fantastic.

- I'm debating how to proceed; should I implement a two-frame with resection method to compare against the three-frame kernel strategy? I could trivially implement a two-frame merging strategy too, since I have so much of the code in place already.

Saturday, July 14th, 2007

- In the process of figuring out what was wrong with the exporter, I found out it wasn't the exporter which was the problem, it was that I was not forcing the cameras to face forward during my solving. The solution is that I need to enforce chirality.

- I wrote a iterative solution to force points to be in front of cameras by doing a breadth-first traversal of the bipartite graph formed by the visibility matrix of points/cameras. It is non-trivial, which is why I was not surprised it didn't work at first. Then, I managed to prove that the algorithm must terminate at the correct solution, provided the bipartite graph is fully connected (i.e. it is not two disconnected graphs).

- I kept looking for a bug in my code, because clearly the reconstruction must be fully connected. However, eventually I became suspect. I already had code to export a track matrix, and it showed that the graph was connected. However, I incorrectly assumed that the visibility matrix was the same as the track matrix (as it should be by construction); this led me to write an exporter to show the actual track matrix as the reconstruction code assumed (I have separate TrackedSequence objects and Reconstruction objects). Sure enough, the reconstruction's track matrix was disconnected. This was because of a && which should have been a || in a boolean statement in my merge code.

- I then realized I was also missing two cases from the merge code, which I fixed.

- Unfortunately, this means that in the projective merge, when it looked like the alignment of subsequences was working, in fact it was not. When I wrote the projective RANSAC code, I noticed that as long as I chose a good two-point-match, I didn't need to prune the resulting merged reconstruction. I assumed this was because I was doing a rather thorough job of eliminating any outliers before the merge stage (in the individual 3-frame reconstructions).

- With the fixed merge code, the reconstruction would fail on the 37 frame test sequence because eventually one of the points which was visible in two distict reconstructions that were merged would become a total outlier in the transformed reconstruction.

- I added a 'prune-outliers' code which would estimate outliers and prune them after a transform. This helped, however the knob which decides how to prune outliers is tricky to set; set it too high, and only a few hundred points make it through the whole reconstruction process, set it too low and the reconstruction fails at some point because of gross errors.

It is worth nothing that I am completely shocked that the camera track and point cloud (which I don't have images of here, but I did explore in the Blender export) look remarkably good, considering the track matrix was completely severed! Every 2 frames the tracks would be cut off; the only thing keeping the reconstruction coherent was the overlapped cameras.

I am trying to figure out if there is other problems with my outlier-trimming code (which collects a set of outilers, then rebuilds a new reconstruction, because delete operations are very inefficient).

I also started writing a bipartite graph class, which will handle many of the operations I've needed up to now.

Wednesday, July 4th, 2007

- Spent more time investigating the camera export problem. I'm still mystified about why it's wrong!

Tuesday, July 3rd, 2007

- First day at Google!

Monday, July 2nd, 2007

- Wrote Blender exporter. I just bake out a simple .py file which recreates the scene. I used the output of voodoo tracker as a start, though I am not yet making IPO curves because the camera export is currently not right.

- Noticed that while before forcing orthogonality, the reprojection error was average 0.3 pixels, afterwards it was 13 pixels. That is before bundle adjustment, but still, that's pretty bad. I'm not sure how to cope with this yet.

- GASP Bundle adjustment pulled rms reprojection error down to 0.1 pixels! In other news: bundle ajustment code worked first try.

- Wrote EuclideanReconstruction class and associated boilerplate.

- Wrote converter code to take a projective reconstruction in metric form, and create a euclidean reconstruction which is closest (which means enforcing the orthogonality constraint).

- For the record: the closest orthogonal matrix to a matrix A is easily found via SVD: for A = u*s*v^T, the closest (in the Frobenius sense) orthogonal matrix is G = u*v^T.

- Wrote euclidean bundle adjustment routine

Sunday, July 1st, 2007

- I'm now in Mountain View, California! I start at Google on Tuesday, so things are slowing down now.

- I got the file I/O working (with crunchy unit tests) for saving tracks and reconstructions (though not collections of reconstructions; I may need to do that). I am not yet switched to FITS because I had some issues with the QFITS documentation, but it will be somewhat straightforward to jam my existing serialized data into a QFITS file and add metadata goodness.

- At YYZ / on the plane / at PHL / on the other plane I wrote hierarchical merging code which (in theory) should work better than the sequential merging I have now

- And indeed, it does! For the 37 frame test sequence I'm using, it gets 0.2 pixels mean reprojection error compared to 0.4 pixels mean reprojection error

- Furthermore, the first three columns (aka the R matrix) are much closer to orthogonal after metric upgrade than they are with the sequential merging code

- Sadly, the hierarchical code failed when I used 100 RANSAC samples for the merge; I bumped it to 500 and then it works. Right now the merging code is not terribly efficient!

- Right now, if a track passes the initial outlier rejection phase (when triplets are reconstructed) then it will never vanish. Even though I have a low reprojection error with hierarchical reconstruction, there are still some points hanging around with >3px reprojection error. I should add a trimming phase to the merge which drops points which are still not within error tolerance of the previous reconstructions.

- I wrote a utility that makes a projective reconstruction from a tracked sequence file, and has switches for things like sequential vs hierarchical merging, number of RANSAC rounds for projective merges, etc.

- I started writing the code to convert from a rotation matrix to Rodriguez rotation vector, however I am not sure how to gracefully handle the singularities at 180 degrees. There is some code online, however I do not trust it (a matrix is not an identity just because it is with in 0.1 on all elements of a true identity matrix!).

Monday, June 25th, 2007

- Pushed qfits IO library into libmv, integrated build, etc. I thought to myself, wouldn't it be funny to use FITS for all the files like we do at astrometry.net? Wait, that's not crazy!

- Though the QFITS API isn't the nicest thing around, so I wrote a packed binary file format for tracks and feature locations

- Last few days I've been dealing with pre-Google-internship issues and some other personal stuff, which has slowed progress.

Tuesday, June 19th, 2007

- Got C++ metric upgrade code to work

- I need to implement serialization because running the whole reconstruction stack everytime is way too slow!

Monday, June 18th, 2007

- This past week I've implemented a couple different metric upgrade strategies in Python with the help of Maxima and Yacas symbolic algebra packages.

- Yesteday and today I implemented the one that seems to work the best in C++, though it remains to be tested.

- I got the Voodoo tracker autocalibration code to work by tweaking the weights used in their linear solution. This suggests that it may be possible to use it for metric upgrade after all.

- In investigating the metric upgrade and autocalibration, it is clear that some of my problems come from my naive merging strategy (i.e. sequential merging) rather than smarter hierarchical merging which distributes error over the entire sequence.

- I also found that running various numeric conditioning on the cameras often made the solutions *worse* compared to the original cameras. I am still not sure why this is the case.

Sunday, June 10th, 2007

- Rewrote some of my Python autocalibration code while testing the bset method for performing a metric upgrade, when given the calibration data. My first method didn't succeed on the 35 frame reconstruction, and after fully implementing the algorithm used in voodoo tracker, i find that doesn't work well either.

Friday, June 8th, 2007

- Discovered to my chargrin that my fancy new MLESAC variant where I estimate both the noise and the inlier share rather than just the inlier proportion, has already been published, and indeed, as I have found, they find it works very well. I think their method of estimating sigma is not as good as mine though; I use full expectation maximization, where they use some other method which I don't understand yet (looks hackey).

- Thought about where to go next on my project.

- Debated implementing one of the CVPR 2007 SDP-based autocalibration methods.

- The first is by a whole pile of people, and appears to be the first paper to support autocalibration which directly enforces all the constraints on the dual absolute quadric. Implementation appears to be tricky to do in C, as it uses a bunch of fancy matlab toolboxes for the linear matrix inequality relaxing, as well as support libraries to solve the polynomial optimization via the LMI solver.

- The second doesn't look as scary, but does not estimate the principal point. They use a search around the center of the image to find the principal point. Probably not hard to implement, but slow.

Thursday, June 7th, 2007

- More API work

Wednesday, June 6th, 2007

- Discovered two exciting new papers on radial distortion determination from point correspondences.

- The first is from 2005; I'm surprised I didn't find it before. In the paper, Li and Hartley decouple the estimation of radial distortion from the fundamental matrix calculation by using the det(F)=0 constraint. The implementation is particularly simple, and involves repeatedly solving many 6-degree polynomials, then doing kernel density estimation on the resulting roots to find the best estimate of the distortion. I am going to try again to extend their method to the 7-point 3-view problem.

- In this paper by Zuzana Kukelova, a minimal solution to radial distortion and the F matrix from 8 point correspondences is developed. They also use kernel density estimation on the roots to find the best distortion estimate. However, the implementation looks very complicated; I will likely stick with the method in the Hartley paper.

- I realized that to upgrade from a projective reconstruction to a metric reconstruction given the correct K matrix, it is simply a matter of finding Q_infty via KK^T = P^T *Q_infty * P using pseudo-inverse, then finding H as the matrix such that H^-1*Q_infty*H = I~, where I~ is the 4x4 identity matrix with the lower right element zero.

- I am probably going to give up on autocalibration for now.

Tuesday, June 5th, 2007

- Spent some more time staring at the VXL API. There is obviously a large amount of functionality there, but is it ever badly organized!

- Tried the 35 frame reconstruction in my autocalibration system. It didn't work. I'm investigating now.

- Also looked to see if there were any recent autocalibration papers of interest. There is one in CVPR2007 which finds a minimial solution for radial distortion and the fundamental matrix with 8 points. This is exactly what I was working on the other day! Sadly, the paper is not available yet. I will probably take another crack at 7-point 3-view minimal distortion parameterization once I see how this paper handled it.

- First successful track of all 35 frames of my rendered test sequence!

- With 0.3 pixel mean reprojection error (or 0.5 RMS)

- Sadly, still in a projective frame.

- Squashed some bugs in the RANSAC projective merging code.

- It now works nicely, but it works by taking each sample (which is a pair of 4d points) and generating a single reprojection residual after a fit (in the single overlapping view). This is strictly not what we want to do, because actually the transformed point now generates a residual reprojection error in all images it appears in in the other reconstruction. Ultimately, this means I need to refactor the RANSAC code to not assume 1 sample generates 1 residual.

- Found and fixed a subtle bug in my MeasurementVisitor code

- Added API redesign notes; though I'm putting that on hold until post-September.

- Checked out this summary of the competition. libmv has a long way to go!

- It appears designing a good API for libmv is a very tough task, because not only is libmv an API which exposes solver functionality, it is also an API that needs to be extensible by other researchers to add new solver functionality. I still haven't figured out how to do that cleanly with C++.

- Looked at how VXL handles some of the API issues. I still think VXL is an API disaster, but it gets a couple things right. The disaster part is largely because most of the code VXL has that I might want to use is scattered around the 'contrib' module, and is both badly documented and incomprehensibly organized (by organization that wrote the code and then by some abbreviation, rather than by functionality.)

- In the future, I may switch my current system for storing tracks and features. This is why my current visitor pattern is good; it lets me abstract the iteration from the underlying representation.

Monday, June 4th, 2007

- Rewatched the excellent presentation by Joshua Bloch on API design. I highly reccommend it to anyone designing an API. It made me realize some errors I've made in my new libmv design.

- In response I started writing use-cases, a preliminary API, and some trial code written to the not-yet-written API. This will have to wait until after I graduate; but at that time, I will refactor the codebase again. Writing up the use cases clearly illustrated the need for some things I hadn't thought much about before, such as the API for applications wishing to integrate solving rather than doing research, and how to provide an evaluation framework for comparing new algorithms to old ones.

- Found the bug: somehow a PointStructure is sneaking into the ProjectiveReconstruction which has no measurements; this should be impossible. Investigating.

Sunday, June 3rd, 2007

- Spent some time trying (and failing) to track down weird merge bug

- Finished implementation of the ProjectiveAlignmentRANSACDriver to robustly estimate a aligning transformation if the direct method fails

- Testing remains

- Right now I am still fitting the two rayleigh distributions; however, this is strictly incorrect because now each residual is a sum over the errors for a particular choice of point pairing. The underlying problem is that for alignment, a choice of sample 'subset' gives rise to many many residuals per sample rather than a single one. My current RANSAC class assumes samples map 1-1 to residuals. I'll have to think about how to generalize this in the RANSAC class, as this will likely come up again in the future.

- Added code to recursively copy a reconstruction

- Wrote robust_oneview_align which tries the direct method of alignment, but checks error stats to see if a failure occured and runs the RANSAC version. Right now I'm using 2*quadrature as the bounds on the new sigma2.

Saturday, June 2nd, 2007

- Added error calculation to the reconstruction class which estimates a sigma2 (for a Rayleigh distribution) for each point, and for the overall reprojection error.

- I will use this when merging to detect a merge gone bad; for example, if the sigma2 of both reconstructions is 0.5 pixels, then I don't expect the merge to have > 0.75 sigma2.

- I moved some furniture around such that I can use the merge code as a RANSAC kernel

- Surprisingly, you only need two point correspondences to calculate H between them if your two reconstructions have a 1-view overlap!

- Reread the 2006 overview paper by Zisserman and Fitzgibbon

- Realized a neat way to integrate radial distortion with trifocal estimation via Zisserman's combined fundamental/radial estimation paper. I propose

- Choose 9 tracks across 3 frames

- Calculate F and lambda as in Fitzgibbon's paper between the first two frames

- Undistort the 9 points in all three images

- Run the 6-point 3-view algorithm on a subset of the 9 undistorted points

- This becomes the RANSAC kernel; for each fit via above, use the following to calculate the distribution of residuals (on which MLESAC runs)

- Optimally transfer all points from the first two views to the third by

- undistort points in all images

- triangulate from first two to get 4d homogenous point

- reproject in 3rd image

- re-distort point location and calculate residual

- This is the RANSAC kernel. Unfortunately, it will take much longer to converge than the 6-point algorithm. In theory, there is enough information to estimate lambda from 7 points in 3 images, but I can't figure out how.

- I checked out OpenCV CVS; it looks like OpenCV is starting to grow some trifocal code and other non-trivial multiview geometry code. It's clearly not ready yet (or at all documented) but I will keep an eye on this. They are using a variant based on reduced fundamental matricies, rather than how mine works.

Friday, June 1st, 2007

- Reworked python connection code; now I can plot (slowly) the tracks across time and the reprojection errors. Sadly matplotlib is slooooooooooow if you have to manually draw lines in a python loop.

- I tried the most naive method for making a large reconstruction: sequential one-frame merging, aka reconstruct tripples with one frame overlap, and merge them as you go along. It seems to work well up until there are about 9 frames, then an outlier must somehow creep in causing the mean reprojection error to go from 0.4 pixels to 300 pixels.

- Likely the solution is to add both hierarchical rather than sequential merging, and also to detect when a merge is going to introduce significant errors and either

- remove the offending points or if that doesn't work

- fail miserably

- I definitely need to figure out how to properly propagate covariances. Right now I am not storing the error stats in each sub-reconstruction; this might be an easy way to reject new outliers in a parameter free way.

- Likely the solution is to add both hierarchical rather than sequential merging, and also to detect when a merge is going to introduce significant errors and either

- Projective merging works. First 5-frame reconstruction with a reprojection error of 0.2 pixels constructed!

- Note: aliasing rules break (aka no copy is made) resulting in invalid ublas computations when you cast a pointer to a matrix and use assign. The following code was broken for me: x->assign(dot(M,*x). I had to make a temporary to store the product first.

- Note: Don't use gesdd from the mimas bindings; it dies in weird ways. Instead use gesvd.

- Note: If code in a library is exiting on an error condition (rather than segfaulting or otherwise causing an error which allows GDB to catch the stack), one can still figure out where the call was by putting a breakpoint on exit. In retrospect, this is obvious, but previously I've wasted time debugging OpenCV programs which would 'crash' by exiting, leaving no meaningful stack to figure out where the offending call is. Now I know to simply 'b exit' in gdb.

- Fixed a few small bugs in bundle adjustment code

- Implemented the one-view merging code; still debugging.

- I reorganized some of the code and implemented a visitor class to abstract some of the iteration ugliness of the data structures; this should make changing the underlying data structures easier in the future if need be. The code is also shorter.

- No posts and slower work for awhile because I went to Montreal and Ottawa for a few days to visit friends and present at CRV2007.

- Though I did spend some time trying to derive a version of the six-point three-view algorithm which also estimates radial distortion using the division model. I definitely got some of the way, including making a 'division projection camera' which is a 3x5 matrix, and finding a 2-parameter pencil of division cameras for a single view of 7 points. In theory, 7 points in 3 views is an exact minimal parameterization for three cameras, the radial distortion coefficient (for each view) and the world positions for the 7 points. However I am stuck on how to discover the world position of the last two points, because the rank-2 constraint used in the original algorithm does not apply in this case.

Thursday, May 24th, 2007

- Optimal 2-view triangulation code works. Found a bug which makes me suspicious it ever worked in my old codebase.

Thursday, May 24th, 2007

- Bundle adjustment works.

- Debugging optimal triangulation code.

Wednesday, May 23nd, 2007

- Mainly debugging and reading more about general sparse least squares optimization.

Tuesday, May 22nd, 2007

- At meeting with James, decided later on I will try a different strategy in the EM fitting for dropping points; I will introduce an 'auxilary' distribution which consumes other points

- Though first I will make more diagnostic plots.

- More SBA debugging

- Found this paper about Carmen, a OOP style reconstruction framework. I realize now I should change my object hierarchy (which is already close to what Carmen has) to also have mapping classes for handling camera/structure pairs.

- Spent some time looking at the SBA code. It's not actually that bad. I realize it is actually not that hard to implement my own sparse bundle adjustment, and if I structure it like Carmen, it will be more general than SBA (and easier to extend). However, it'll have to wait until after I defend (not enough time for it now).

- For the curious, check out Triggs et al's seminal bundle adjustment paper.

Monday, May 21st, 2007

- Wrote projective factorization code (totally untested)

- Fixed up the build system so it doesn't spew tons of nasty compile lines; instead, only each filename is printed.

- Got RANSAC to run

- After much trouble with the EM mixture model fitting, I realized the problems all stem from when the probability for a particular sample vanishes across all mixing components. I tried a few strategies to deal with this; but eventually, I found the best method is to simply drop the sample from further iterations. With this modification, the EM always converges, at least in my testing so far.

- Added SBA and bundle adjustment for projective reconstructions

- Still buggy, for some reason the initial error is very wrong.

Sunday, May 20th, 2007

- Wrote general pseudo inverse via SVD

- Got six point code running; need to make tests actually verify results.

- Added visibility tracking code via STL multimaps.

- After much debugging, the six-point three-view code runs.

Saturday, May 19th, 2007

- Got the mimas lapack wrappers to compile after some wrangling

- Added more of my own wrappers to make C++ behave more like MATLAB / Python

- Big port push; ported most of the track_estimation.c code to libmv; including the six-point-three-view code, and optimal two-view triangulation code.

- I also fleshed out some design ideas for the RANSAC framework.

- Looks like I'm a bit behind my goal for tomorrow night but not insanely so.

Friday, May 18th, 2007

- Ported EM fitting framework for fitting residuals; this time the system is MUCH more flexible.

- To fit a mixture, just pass in a vector<> of Distribution*'s, which are then used to fit an EM mixture model where the unobserved variables are the mixing coefficients and the class membership variables, and the parameters to be estimated are inside the Distribution's themselves (which only need maximize_parameters(), probability(), and log_probability() functions). So far I have Rayleigh and UpwardRamp distributions.

Thursday, May 17th, 2007

- Found silly bug in plotting code (needed 600-y instead of y.)

- [DONE] Moving onto compressing 1-frame tracks.

- Found some subtle memory problems; C++ can be complex at times.

- Note: When taking a reference within a vector<...>, it becomes invalid if that vector is resized.

- Note: Be extra careful when inheriting from the standard containers. They do not have virtual destructors, so be careful.

- Reference counted pointers (aka boost's shared_ptr<>) are quite neat; I may switch my code to use them soon.

- Started MLESAC framework.

Wednesday, May 16th, 2007

- Reorganized test code. Wrote tests for homogeneous parameterizations and jacobian of said parameterization.

- KLT tracker integration.

- Re-wrote schedule section of this page taking into account the recode

- Compiled a Boost::python test module. I'm going to use boost::python to make libmv a module in Python.

- Found num_util to make working with numpy arrays easier.

- Talked with #blender about image processing options; decided to stick with purely grayscale for libmv and expect the controlling application to load images.

- Re-rendered test_track.blend sequence, converted to pgm, and unceremoniously checked said 50mb of binary files directly into my thesis bzr repo.

- Integrated KLT tracking code; it now builds the relevant structures and connects tracks together.

- Boost::Python integration; discovered that to get numpy passing working, I need:

- ar::array::set_module_and_type("numpy", "ArrayType");

to

ar::array::set_module_and_type("numpy", "ndarray");

in the num_util example code. - Used this example to get the Python module to build with Boost::python under scons.

- ar::array::set_module_and_type("numpy", "ArrayType");

- Buggy python connection is working now; I can run the KLT tracker from python and look at the track lengths.

Tuesday, May 15th, 2007

- The new project will be named libmv, for multiview.

- Set up hosting with launchpad. Bugs, questions, and bzr branches are hosted there now.

- For anyone curious about the code, use the launchpad branch:

- Currently it's totally useless and is just a skeleton.

- Made cheesy logo (see launchpad page)

Monday, May 14th, 2007

- Finishing off python literal code

- Thought about the future of the project. Discussed integration issues with the blender people, and I realize that depending on OpenCV is not so good.

- Furthermore, the limitations of the current C based implementation are starting to sink in. This has really slowed down my coding today.

- If I want to build a framework for other vision researchers, then depending on Blender is not good.

- C++ is the real way to do this project, which was hammered home by reading more of VXL's source. VXL takes some things much too far in its object orientation, especially by reimplementing the STL, however they have made some of the right abstractions.

- Unit testing with the current code is hard because of it's dependence on much of Blender's archecture; so I started looking at Boost::test and other testing frameworks. If I am to convert my existing OpenCV C code to C++, I better unit test it as I go! Then, I found

- Finally, the unit test library I've been waiting for: testsoon. It takes exactly the philosophy I do with respect to tests; it has incredibly minimal boilerplate and no manual test registration. It supports suites and fixtures. It is a single header file. It uses scons to build by default, and uses mercurial for distributed source code management. I like everything about it already.

- uBLAS is a much nicer choice than OpenCV's matrix primitives, albiet without an immediate implementation of SVD and overconstrained Ax=b solvers.

- UPDATE: mimas, a Boost-based vision library, has wrapped LAPACK for SVD and least squares, among other things. This is exactly what I need. I'll probably take just these parts of mimas, as it has swaths of other functionality that I don't need.

- testsoon depends on Boost

- Boost has boost::python for extremely trivial interfacing of c++ and Python code.

Sunday, May 13th, 2007

- Considered the OpenGL camera model (which is the same as Blender's raster render camera model) to make sure the pseudo-depth calculation does not make it unsuitable for representation in the traditional pinhole model of P = K[R|t]. In conclusion, the pseudo depth (see this reading for more details) only affects the normalized z coordinate, which is used for deciding whether or not a particular triangle should be rasterized. The x and y image plane locations are related in a straightforward x* = f*(x/z, y/z) way, which is easily represented as K = diag(f,f,1).

- Discovered that it's easy to switch Blender to use Python 2.4, and then plot via matplotlib in the embedded python interpreter.

- C strings can be exec'd in the python environment via: PyRun_String( string, Py_file_input, globaldict, localdict );, where Py_file_input is a constant, localdict can be null.

- Wrote code to dump registered set to a python literal

Saturday, May 12th, 2007

- Found another, subtle bug which manifested itself in the drawing code; don't alter linked lists your iterating over or weird things might happen!

- Made drawing code look nicer

Friday, May 11th, 2007

- Nailed pointer bug for drawing nice circles to show reprojection error

- Wrapped more list traversal code -- the jazz with having a link structure (next/prev) offset by two in the TrackPoint3D is causing lots of problems. Should have used C++!

Thursday, May 10th, 2007

- [DONE] Investigating bug in MLESAC code discovered yesterday

- Not sure how the code worked before.

- For today:1

- [DONE] Finish code review to find awkward data structure points before going to gym with Ilya to discuss

- [DONE] Fix MLESAC bug where the set for a new 3D point is not correctly assigned.

- Projective merging via cameras

- I added incremental bundle adjusts

- Fixed double free problem

- [DONE] Bundle adjust resulting merge

- Fix EM inlier/outlier estimation; total log prob should never be greater than zero!

- Drawing code to visualize reprojection error as circles via OpenGL

Wednesday, May 9th, 2007

- Went through code to find operations which are awkward given the current many-link structure. It looks like I'll need to switch to some sort of set-based system.

- Awkward operations

- n^2 loop to find correspondences across nframes (i.e. set of tracks which exist in all of a set of frames)

- Finding correspondences between 3D points across two RegisteredSets.

- Finding common cameras between two RegisteredSets.

- Adding 3D points to a set (because you need back pointers to the track structure's first occurrence in a frame).

- Discovered some bugs while reviewing code to find awkward operations

Tuesday, May 8th, 2007

- More debugging of jacobian calculation

- Still doesn't work

- Debated architecture issues with Ilya; tomorrow I will go over code to find awkward operations and see if there is a better way of structuring things.

Monday, May 7th, 2007

- More SBA work

- Wrote first pass for bundle adjustment of projective reconstructions.

- Initial version uses the SBA simple driver (which makes far more sense than using the expert driver; why do functions have such a bad rap?)

- Currently problems in traversing the data structures; I really need to figure out a better way of storing visibility and whatnot.

- I'm having problems with getting LAPACK linked in; for now I just added an extra lib to BF_OPENCV_LIB instead of having a new BF_LAPACK_LIB (which i have, but it doesn't get added to the environment for some weird reason).

- First successful bundle adjustment of a tripple.

- I encountered some precision issues when inversing the mapping from the disk to the sphere, which can likely be solved by transforming each reconstruction as recovered by the six point algorithm by the first camera, such that the first camera is P = [I|0].

- Implemented analytic Jacobian for bundle adjustment in a projective frame. It is apparently not correct yet. However, I am having problems since the the six point algorithm makes points right on the edge of where they should be wrapped in the parameterization I'm using. I will have to do the normalization now.

- Implemented normalization; indeed it fixed the problem near the boundary.

- I found some problems with the jacobian calc; it still doesn't work. The output of SBA's chkjac function is pretty useless, I am debating forking it.

Sunday, May 6th, 2007

- Went to blender dev meeting

- Discussed with ccherrett Voodoo and Icarus; he's going to write a page about the competition.

- Discovered error in my Jacobian checking code; I had it right all along!

Saturday, May 5th 2007

- Took relevant derivatives for geometric error given a projective reconstruction

- Implemented said derivatives in python; realized I need to do fancy homogenous parameterization. Found jacobian. Realized my jacobian is wrong. Grr!

- Read SBA docs

- Made SBA SConscript and integrated it into blender's scons build system as an extern lib

- Found error in HZ page 625; correct parameterization of n-dimensional homogenous vector vbar is as n-1 dimension v, where vbar = (sinc(||v||/2)*v^T/2, cos(||v||/2))^T and lives on the unit n sphere. In HZ they are missing the divide by 2 on the first component. Also, note that this assumes sinc(x)=sin(x)/x, not sin(pi*x)/pi/x as is the case in most numeric packages.

Friday, May 4th 2007

- Changed textures in pre-rendered test scene so that the tracked features are more uniformly distributed.

- Beefed up the schedule/diary page

- Meeting:

- Decided that I should implement bundle adjustment now instead of at the end; this way when merging reconstructions, I can be sure any problems come from the merge process, not from low-quality three-view reconstructions.

Thursday, April 27th 2007

- Added two methods for aligning two projective reconstructions; the first solves for a homography via SVD to align the three-space points, the second uses the pseudo inverse to solve for a homography to align cameras for the case where there are two overlapping cameras.

- Wrote code to merge two sets after they are aligned