利用者:Sftrabbit/GSoC 2013/Documentation/Technical Overview

目次

Technical Overview

Focal length constraints

DNA

The relevant DNA types are found in source/blender/makesdna/DNA_tracking_types.h.

The MovieTrackingSettings DNA struct contains the following relevant properties:

refine_intrinsics- bit flags identifying intrinsics to refine. Some combination ofREFINE_FOCAL_LENGTH,REFINE_PRINCIPAL_POINT,REFINE_RADIAL_DISTORTION_K1, andREFINE_RADIAL_DISTORTION_K2. Only some combinations are currently supported.constrain_intrinsics- bit flags identifying which intrinsics to refine. Currently, the only valid flag isCONSTRAIN_FOCAL_LENGTH. This could potentially expand in the future.focal_length_{min/max}- these are the values that define the constraint range. They are stored in pixel units.

RNA

The relevant RNA structs are found in source/blender/makesrna/intern/rna_tracking.c.

The following properties have been modified or added:

refine_intrinsics- corresponds directly to therefine_intrinsicsin DNAuse_focal_length_constraint- a boolean property that sets theCONSTRAIN_FOCAL_LENGTHflag in DNA.focal_length_{min/max}_pixels- corresponds tofocal_length_{min/max}in DNA. The minimum must always be less than the maximum (seerna_trackingSettings_focal_length_{min/max}_pixels_set).focal_length_{min/max}- used for setting the focal length in millimeters. The conversion to and from pixels is performed byrna_trackingSettings_focal_length_{min/max}_setandrna_trackingSettings_focal_length_{min/max}_get.

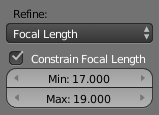

User Interface

The user interface is implemented in release/scripts/startup/bl_ui/space_clip.py.

The constraints fields of the Solve panel are enabled when any option from the Refine select box containing "Focal Length" is chosen. This is determined by checking if "FOCAL_LENGTH" is a substring of the value of settings.refine_intrinsics. The minimum and maximum fields are then only enabled when settings.use_focal_length_constraint is true.

If tracking.camera.units is set to 'MILLIMETERS' (in the Camera Data panel), the minimum and maximum fields correspond to the focal_length_{min/max} RNA properties. Otherwise, they correspond to the focal_length_{min/max}_pixels RNA properties.

Kernel

A number of changes have been made to source/blender/blenkernel/intern/tracking.c. First of all, the function BKE_tracking_camera_focal_length_set has been added to ensure that the constraint values update to match the given focal length when the constraint is disabled

The reconstruct_refine_intrinsics_get_flags function converts from the DNA properties to MovieReconstructContext properties, which are similar their DNA counterparts but take values from the libmv API (see below).

When the reconstruction job begins, BKE_tracking_reconstruction_solve calls both cameraIntrinsicsOptionsFromContext and reconstructionOptionsFromContext to convert these context properties to the appropriate libmv options structs. The former applies the CLAMP macro to the focal length to ensure it is not outside the constraint boundaries.

Libmv API

Constraint data is passed to libmv in a libmv_ReconstructionOptions object. This struct is defined in extern/libmv/libmv-capi.h. It now has the following properties that currently mimic the DNA properties (but there is no need for them to be identical):

refine_intrinsics- some combination ofLIBMV_REFINE_FOCAL_LENGTH,LIBMV_REFINE_PRINCIPAL_POINT,LIBMV_REFINE_RADIAL_DISTORTION_K1, andLIBMV_REFINE_RADIAL_DISTORTION_K2.constrain_intrinsics- currently the only accepted value isLIBMV_CONSTRAIN_FOCAL_LENGTHfocal_length_{min/max}- constraint values.

Libmv internals

The constraint properties primarily affect bundle adjustment. Previously, only a single option was passed to the bundle adjustment algorithm as an argument. Now that this has grown, a BundleOptions class type has been introduced in extern/libmv/libmv/simple_pipeline/bundle.h. These options do not all take the same form as the properties passed into libmv, however:

bundle_intrinsics- flags specifying which intrinsics to include in bundle adjustment. See theBundleIntrinsicsenum for a list of flags.constraints- flags specifying abstract constraints to apply during bundle adjustment. Unlike previous properties, these constraints may not refer to a particular intrinsic. Currently, the accepted values areBUNDLE_NO_CONSTRAINTS,BUNDLE_NO_TRANSLATION,BUNDLE_CONSTRAIN_FOCAL_LENGTH.focal_length_{min,max}- as before, these are the constraint values to apply to the focal length under adjustment.

Libmv uses Ceres Solver for bundle adjustment by minimizing the reprojection error. Ceres is an unconstrained minimizer, however, so it will adjust the function parameters without bounds. To apply a bound to the focal length, the ConstrainFocalLength function applies a transformation to the focal length parameter (if the appropriate options have been set). This transformation is simply arcsin scaled and translated such that the upper and lower limit of its result are the constraint bounds. The transformed variable will be unconstrained when minimised, but will always give a focal length within the bounds when transformed back using UnconstrainFocalLength.

constrain(f, min, max) = arcsin(-1 + ((f - min) / (max - min)) * 2) unconstrain(f, min, max) = min + (max - min) * (1 + sin(f)) / 2

Multicamera reconstruction

Libmv API

The libmv API for multicamera reconstruction is almost identical to as it was before. One small change is that the camera intrinsics argument to libmv_solve is an array, where each set of intrinsics applies to a single camera. However, since array type arguments in C are transformed to pointer types, this is merely a semantic difference. Instead of passing a pointer to a single libmv_CameraIntrinsicsOptions object, the client passes a pointer to the first element of an array of libmv_CameraIntrinsicsOptions objects. The elements each correspond to a different camera.

Tracks storage

The Tracks class stores Markers which correspond to points in the images that libmv uses to reconstruct the scene. Markers, prior to my changes, had two important members: track, which identifies the track that the marker belongs to; and image, which identifies the image that the marker corresponds to. To accommodate multicamera reconstruction, a marker also needs to be associated with a particular camera.

My first approach was to have a camera member to identify a camera, and then the image member would identify which frame of the camera that marker is associated with. So two markers could have the same image but could actually be associated with different frames from different cameras. This, however, meant many changes to the way the libmv algorithms worked. It also interprets the image identifier as "frame", although libmv doesn't actually concern itself with the order of images in a video.

Instead, I took a different approach, in which the image identifier is unique for all frames from all cameras. That is, the first frame of one camera and the first frame of another would have different image identifiers. A new camera</code identifier would then be used to annotate the markers to associate them with a particular camera. All markers with a specific image identifier should also have the same camera identifier.

struct Marker {

int image;

int track;

double x, y;

int camera;

}

This works well because libmv only needs to keep track of which cameras the markers belong to. This information isn't actually used in reconstruction. The algorithms only need to receive a bunch of markers from images - they don't even need to be from frames of a video. When reconstruction is complete, libmv can use these camera identifiers to pass the reconstructed camera orientations back to the client.

The Tracks class has a number of member functions that provide access to Markers based on their associated camera. For example, MarkersForCamera will return all the markers associated with a particular camera.

Reconstruction storage

Similarly, the reconstructed camera positions/orientations have associated physical cameras. That is, the EuclideanView reconstructed from markers with a specific camera identifier will have the same camera identifier. This allows the client the understand which of the reconstructed views correspond to which cameras and frames. AllViewsForCamera can be used to get all views reconstructed for a particular camera.

Libmv internals

All of the internal algorithms needed to be updated to be multicamera-aware. For those algorithms that rely on having images from a single camera (such as the two frame reconstruction initialization), this simply meant extracting tracks for the first camera and using only those.

For bundle adjustment, the multiple sets of camera intrinsics passes into libmv need to be used for calculating the reprojection error for each marker. This works by adding the appropriate intrinsics to the parameters of each marker's cost function according to its camera identifier:

problem.AddResidualBlock(new ceres::AutoDiffCostFunction<

OpenCVReprojectionError, 2, 8, 6, 3>(cost_function),

NULL,

&ceres_intrinsics[camera](0),

current_view_R_t,

&point->X(0));

The OpenCVReprojectionError cost functor then uses these intrinsics to compute the reprojection error for each marker.

Whenever an algorithm produces a reconstructed camera view, it adds it to the EuclideanReconstruction object and associates it with the appropriate camera.

Terminology

The following terminology was decided on to avoid confusing clashes of names:

- View - a specific instance of a camera at some orientation and point in space (giving an image of the scene)

- Multiview reconstruction - reconstruction of a scene from multiple views

- Camera - a physical video camera

- Multicamera reconstruction - a form of multiview reconstruction, where the views are associated with some number of video cameras

Previously, views were known as cameras, which meant that a EuclideanReconstruction contained EuclideanCameras which were each associated with a particular physical video camera. Now, a EuclideanReconstruction contains EuclideanViews instead.

Code clean-up

Unified libmv API

While studying the libmv source, I noticed that libmv_solveReconstruction and libmv_solveModal had a lot of code in common. They were each part of the libmv C API and would be called based on whether the client code wanted to perform a modal solve or not. I thought a better approach would be to have a single libmv_solve function that will use the modal solver when a certain option is passed.

To this end, libmv_ReconstructionOptions now has a motion_flag option that may have the LIBMV_TRACKING_MOTION_MODAL flag set. If it is set, the modal solver will be used. Otherwise, normal reconstruction will take place.

This cleans up the libmv interface by moving the decision of whether to perform a modal solve or not into libmv and also makes clear the steps that are common between both solvers.

C/C++ interface consistency

I also noticed that these was a lot of inconsistency between naming conventions within the libmv C API. This is probably caused by the fact that it's an interface between C and C++, which each have their own code styles, and there's no real guideline for this situation. To fix this, the following rules were made:

- All identifiers exposed by the API begin with the library name followed by an underscore. In this case,

libmv_.

- Types are in camel-case and begin with a capital letter. For example,

libmv_CameraIntrinsicsOptions.

- Functions are in camel-case and begin with a lower-case letter. For example,

libmv_cameraIntrinsicsInvert.

- Types that are exposed by the API should be typedefed unless only a declaration is provided in the API. That is, if the definition of the type is hidden within the library, the type should not be typedefed.