Dev:Ref/Requests/LipSync

目次

Introduction

We are four students interested in developping a new feature in blender3d. We all know C/C++ programming and 3d design. We plan to integrate a lip-sync interface in blender (inspired by software such as papagayo or Jlip-sync). The first steps of our project were supervised by our university but we are still working on it after the study term.

Our target is to provide an user friendly interface to support the lip sync process using audio file and text as input.

Our first idea has been discussed on IRC #BlenderCoders and we have made some change to the project according to what we have been told. The discussion can be viewed here: IRC #BlenderCoders 25/02/2007(about lip sync)

We have already experimented lip-sync with papagayo: lipsync.swf

You can send your suggestions to blender forum or by mail sharpTeam38@yahoo.com.

Progress

12 june 2007 :

- The animation is made using action strips instead of only shape keys

- The strips representing the sentences, words and phonemes can be moved and resized

6 may 2007:

- The text entered by the user is broken down into sentences, words and then phonemes

- Sentences, words, and phonemes are dispayed in strips

22 march 2007 :

- university documentation (pdf)

- Starting implementation

- Phonemes are retrieved from the dictionary

- A new space for the lip-sync editor has been added

Lip sync proposal

Here is the current state of our project, we have made several changes since our draft proposal :

We have decided to use actions for the animation to allow everyone to use his own rigging (shape actions, driven shape keys, lattices...)

Interface has changed as well, indeed, we are not using panels any more. The lipsync editor will allow the user to create a new project, load text and sound and access a mapping editor where the user would be able to link phonemes to actions.

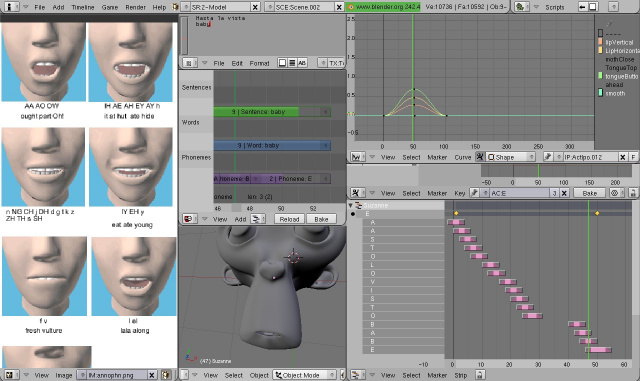

GUI

Video

A temporary demo of our current progress

We have just created few shapes to show what's our project about. The mapping editor is missing for now and many things are under development.

click right >> "save target as" , and open it with VLC: http://discoveryblender.tuxfamily.org/lipsync-wip-demo.mov

Workflow

- Create several actions for the mesh to animate

- Add a new "lip sync project" from the lipsync editor listbox

- Load an audio file

- Load or type a text

- Click on the "process" button

- Adjust timing in the lipsync editor (move and resize strips according to the audio)

- Load the mapping editor

- Create a new "mapping project" from the mapping editor listbox

- Link phonemes to actions from the mapping editor

Lip sync draft (march)

This was our first draft for the project :

We plan to integrate our interface in a new editor : lip-sync editor.

We have chosen to use the text editor so a text could be loaded from the hard drive or typed by user easily.

Features:

- Phonemes will be created according to the text loaded with the audio file. Then user will be able to synchronize it by moving the words.

- Each phoneme could be linked to several shapes by user: For each phoneme user would be allowed to choose a percentage of each shape key he has created so far. For instance a "Ai" phoneme could be a combination of 50% of shape 1, 25% of shape 2, etc

So the more the user create shapes, the more the animation will be detailled but only few shapes will be needed to make it.

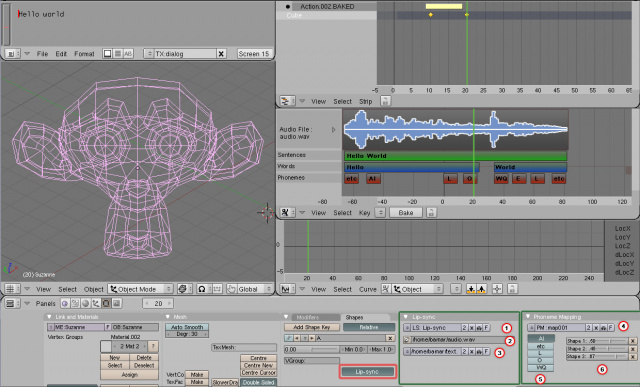

GUI

References

- 1 Open/change lip-sync project

- 2 Load audio file

- 3 Load text input from the text editor

- 4 Open/change mapping phoneme

- 5 Select a phoneme from those produced by the text analysis

- 6 For the phoneme selected, choose a percentage of each shape key created

Shortcuts in action editor

- L key: change language linked to selected word or sentence.

Resources

Information on converting phonemes to visemes

http://forums.polyloop.net/tutoriaux-carrara/4607-c5-rigging-skinning-bones-deformations.html

lip sync software

http://www.annosoft.com/sapi_lipsync.htm http://www.lostmarble.com/papagayo/index.shtml

http://users.monash.edu.au/~myless/catnap/ http://jlipsync.sourceforge.net/ http://yolo.sourceforge.net/ http://www.thirdwishsoftware.com/magpie.html http://www.thirdwishsoftware.com/magpiepro.html

http://www.inria.fr/rapportsactivite/RA2005/parole/uid45.html