利用者:Broken/ShadingSystemScratchpad

< 利用者:Broken

2009年10月3日 (土) 13:43時点におけるwiki>Brokenによる版 (→Issues with the current system)

Shading System

Goals

- Clean up mess of current single ubershader

- Allow specific shaders specialised for specific situations (hair, skin, glass, etc)

- Shaders should be 'physically based by default' - i.e. you shouldn't have to set things up specifically as a user to get physical results. You should have to work to break physical shading, rather than to achieve it.

- Deeper level control via:

- nodes ?

- internal C shading API ?

- potential shading language derived from shading API ?

- Natively support volume/atmospheric shading

- Support BRDFs - i.e. vector I/O, as well as colour for use in reflections, photon mapping, path tracing, etc

- Or more explicitly, materials should be consistent and work predictably over various different rendering techniques. From simple local illumination, 'fake' gi techniques, to full photon mapping or path tracing. The system should provide the necessary information to be able to scale up and down.

- All editable shader properties should be able to cope with a wide variety of inputs, eg.

- Textures

- Node connections

- Animation

This means (i presume) not taking information from hard-coded material structs, but passed as parameters.

- Should be able to simplify and package up shading networks - like current node grouping, but with better control over what inputs/outputs are exposed

Issues with the current system

- All raytrace shading happens inside a single tight loop, and is recursive within this - i.e. starting from the top of the material evaluation chain when an new intersection to be shaded is found, it re-does it all internally. This has several problems, particularly regarding integrating new, raytrace-dependent functionality like volume rendering. This should be moved up a level, so that raytrace isn't something special, but just part of normal shading calculations that gets executed when a surface is shaded.

- The current 'tangent shading' system (by means of the Tangent V button) is very wrong. The technique of replacing the normal with the tangent may work in some situations like hair, where its simulating an infinite cylinder, but for normal materials it gives incorrect results, and prevents any control over the level of anisotropy vs isotropy. This should all be replaced by constructing a proper reflection coordinate system, with tangent vectors available, and shading within that, with respect to additional anisotropy/rotation parameters.

- This should also be part of a BRDF system, where blurry reflections, photon bounces, etc, can use the same reflection model.

- Note: there are other advantages to using the reflection coordinate system (N = Z axis, tangent = X axis, bitangent = Y axis). After transforming to this coordinate space, dot products are easy - just the Z component of the vector, and other trigonometric functions are greatly simplified.

- The current texture stack is not scaleable, difficult to use, inflexible, and clunky. I've written about some of the usability problems [[1] with the texture stack before, but there are also issues within the system.

Shadows

- Unify shadbuf and ray shadow code

- Shadow 'tracing' api should take lookup coord as input (for lookups in volumes etc)

- ray shadow / transmission code, take custom intersect callbacks/intersection types (i.e. ignoring volumes?)

Ideas

- System made of discreet parts, represented as either nodes or shading language functions

- Some can be complex, i.e. a full encapsulated physical glass shader

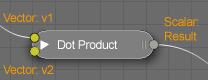

- Some can be very low level, i.e. a dot product

eg.

Each node can have an equivalent API representation, which can be used within larger shaders, which are then represented as nodes, with the relevant inputs and outputs.

DotProduct(ShadeState s, scalar result, vector v1, vector v2)

- Better delineation between nodes/materials.

- A material is nothing more than a container with a series of final inputs for other shaders/nodes to plug in to.

- You can connect whatever you like to those inputs - a fancy shader, an entire network, a simple dot product - all are valid.

- The final material has inputs equal to the defined render passes, with a minimum of colour/alpha/photon (vector). This allows you to keep render pass flexibility with custom shading.

- If you create a node network group, it would be nice to be able to access it programatically , with the relevant inputs/outputs, like any other node/function.