利用者:Frr/NodeThoughts

Blender's nodes are wonderful, but I think now we're starting to come up against the limitations of the current design. These are some of my ideas for how to improve nodes after Blender 2.5. This page is a work in progress and may include errors of logic, half-baked ideas and trace elements of lunacy.

目次

The current system

- Texture, Material and Composite nodes are separate. Each has its own tree type, its own data types and its own way of evaluating the tree. We can't even talk about "a node tree" in the code without lots of little if/elses scattered everywhere asking whether we're talking materials, textures or compositing.

- Nodes which are shared between tree types have their source code duplicated, sometimes with small changes to take account of the way different trees are evaluated.

- Nodes can be grouped into node-groups, but these are tied to one tree type and also they don't nest. The interface is different when editing a group than it is when editing a tree. Inputs and outputs are done differently. Also, groups must be edited inside a tree, they can't be edited on their own.

- It's tedious for programmers to add new nodes. I believe there are about 6 places in the code where changes have to be made to add a new node type, even more if you want your node available in different trees.

- Adding new data types and node tree types is even harder than adding new nodes. Simply put, the node system is not extensible.

What would a better node system look like?

- Elimination of 'tree types'. Which nodes you can use should depend on data types. That is, the compositor should be able to use any nodetree that outputs a bitmap. A texture should be able to use any tree that outputs a texture. Which other nodes are used in the tree should be up to the user. PyNodes should also work everywhere (right now there is scattered code to get PyNodes to work everywhere they should.)

- Removal of the distinction between 'groups' and 'trees'. Right now, a tree can contain instances of groups but a group can't. A group can have arbitrary inputs but a tree's inputs are defined by its type. It would be better to have only trees, and give them all the abilities of groups.

- Nodes should be able to have variable numbers of inputs. One Add node should be able to add 12 numbers. This could be implemented as a single input with type "array of floats" which the UI could display as multiple inputs.

- At the moment, texture mapping in shader nodes is achieved with a 'texture' node. Ideally, users should be able to define their texture as a tree of texture nodes, group it, and use that group directly in the shader tree. The group could have whatever inputs the user wants. "Tile size", "Funkiness", "70s charm", whatever. Then those parameters could be driven by the shader tree.

- Interface changes. Nodes' interfaces can be generated from their RNA now, rather than with complicated draw functions in drawnode.c (another place to add code for each new node!)

- An easier way to add nodes to the tree. XSI has a nice system where you drag the nodes from a tree-view on the left into the node space. You can filter nodes by name and make your own categories with frequently-used nodes.

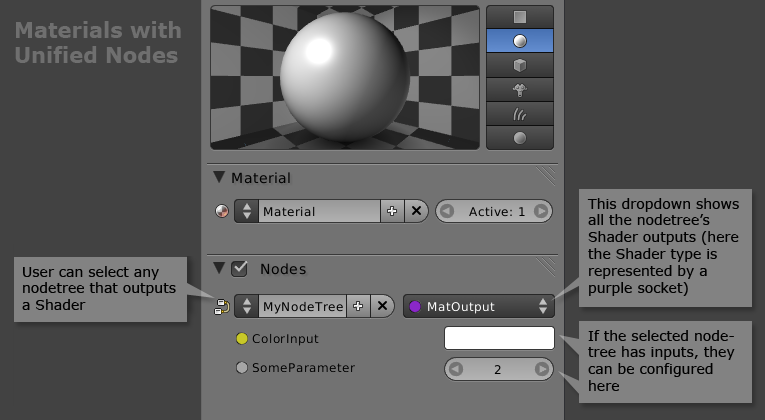

Mockups

Implementation

This is how I would personally implement a more extensible node system.

To unify the currently disparate tree types, we need one single evaluation model for node trees. Right now, material nodes are evaluated per-coordinate, compositor nodes are evaluated once and return a bitmap, and texture nodes are evaluated once to return delegates, and then those are evaluated. If we are to allow texture nodes in shader trees, compo nodes in texture trees etc., then the trees need to be able to work independently of what nodes they contain.

The system I propose is simple in this respect: all trees are evaluated once, and need not be reevaluated until their inputs change. The compositor will work with trees returning bitmaps, shaders will work with trees returning a shader object which can be queried with various coordinates, and textures will work with trees returning texture functions. Any special evaluation logic required by any users of nodes (texture system, material system, compositor) should be implemented on top of this, as data types.

In this example, bitmaps, shaders and textures are all data types in the node system. The system itself need know nothing about what a bitmap is, what a shader is or what a texture is. It needn't know that textures are functions that can be evaluated per-coordinate, or that bitmaps are rectangular arrays of pixels. This decoupling of responsibility will make it easy to extend the node system with more data types.

Data types and overloading

Anything you can do to a color, you can do to a bitmap or a texture. We should be able to write nodes to do color operations and have them automatically work on these types, and any appropriate types introduced in the future. This can be achieved using type promotion.

Each output of a node is like a function. It takes in the node's inputs and gives a result. Say we have a Mix node taking two colors and returning a color:

(color, color) -> color

If we want all nodes that work on colors to automatically work on, say, textures, we can define a converter like this:

texture promote(color) {

return (a texture that is solid color);

}

So now we can connect our node so that it takes two colors and returns a texture of a solid color. But that isn't too useful. What would be good would be if we could take any function taking colors and returning colors and make it take and return textures instead, with no extra work. Well, we can write another converter such that any function (any node)

(color, color) -> color

can be automatically converted to work as

(texture, texture) -> texture

which means that the user can connect a node expecting a texture to the output of the Add node as long as he connects textures to its inputs. What if he connects a color to one input and a texture to the other? Easy, the color is promoted to a texture and the function is used as above.

This can be implemented using higher-order functions: we can define the Texture datatype itself as a function:

(TexCallData -> color)

And the same with shaders:

(ShadeCallData -> color)

... and then we can simply define a rule which converts any node with inputs of type (A, B) and outputs of type (X, Y) into a node with inputs of type ((Q -> A), (Q -> B)) and outputs of type ((Q -> X), (Q -> Y)) for any Q (in this example Q would be TexCallData or ShadeCallData). This would mean that any node working on colors would automatically work on functions of colors, which shaders and textures both are.

I believe this would be hugely useful in both making unified node trees more feasible, and making the node code more inviting to developers.