利用者:Ack-err/GSoC 2013/Proposal

目次

Towards a full action replay system (GSoC 2013)

Name

Vincent Akkermans

Email / IRC / WWW

- mailto:vincent.akkermans@gmail.com

- WWW

- http://ack-err.net

- IRC

- ack-err

Synopsis

This proposed project aims to bring several enhancements to existing code with the objective of making full action replay possible in the future. More concretely it aims to finish the macro system, enhance RNA descriptions, fix operator re-execution by capturing contexts, and implement a user action log system for visualisation.

Project details

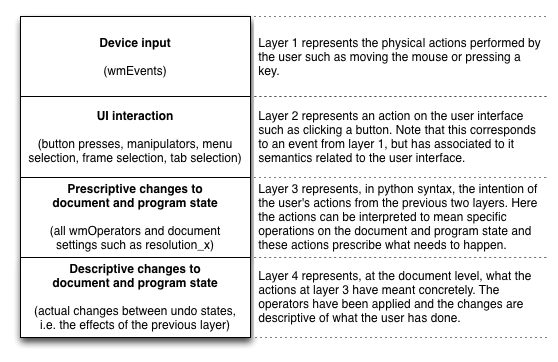

This project proposes to refactor or enhance parts of the code so that each user action is clearly represented at four separate layers that are semantically orthogonal whereby each layer is an interpretation of the previous. Figure 1 depicts and explains these four layers.

Figure 1. The actions of users represented at four different semantic layers.

Replay of the user’s actions can happen (to varying degrees) at any of the four layers by serialising and later injecting the objects that represent the actions at the layer at hand. At layer 1 replay would entail injecting wmEvent structs. This would replay the visual aspects of the user’s performance and would be reliant on setting up the blender windows the same as when the session was recorded. At layer 2 replay would be most difficult and make the least sense. It would entail modelling changes in UI and activation of key bindings. At layer 3 the changes to be performed on the document can be modelled as python function calls or assignment statements. Blender already has the functionality to achieve this and a prototype that logs all user actions in a python syntax was already implemented. Blender’s current undo system could be seen as an implementation of layer 4. Changes to the document are kept in complete versions in memory or on disk. However, not all changes are included and the history is finite due to memory constraints.

To achieve accurate and complete modelling of a user’s actions at layer 3 all actions need to have an unambiguous and complete representation. Python syntax is perfect for this requirement because it is both unambiguous and the statements can be readily interpreted by Blender again. As it stands now there are three major hurdles to implementing full replay functionality at layer 3. Firstly, not all operators can be represented completely. For example selecting an object, represented in python as the function bpy.ops.view3d.select(...), does not include the object that is selected as an argument and as such its operation depends on the description of the user’s action at layer 2. Secondly, the RNA descriptions are not complete. An example of this is the ImageFormatSettings.file_format property. Lastly, the execution of logged python commands still depends on the contexts. However, if the RNA descriptions of all the members of the bContext struct are sufficient they can be represented as Python paths and the bContext structs can be represented as a Python dict.

Advantages of implementing layer 3 replay with respect to the other layers are a) replay is robust and does not depend on the the configuration of the Blender windows, b) replay can be done from the terminal, c) the replay log is interpretable without actually replaying it (i.e. it can be visualised and analysed on its own), and d) the replay log can be adapted and then replayed.

Benefits to Blender

The benefits to Blender are here divided into three categories.

The benefits of a log system for user actions at layer 3 are the following.

- Visualisations of the process can be made that are not based on the UI and can be used to give teachers, fellow blenders, or colleagues insight into how assets were made [1, 2, 3].

- Analyses of the action sequences (the creative process) can be made to describe the content at a high level. This could be useful in asset management systems where content can be retrieved based on queries about how the assets were made (e.g. all assets where in the creation process a character X was linked and then animated).

The benefits of a macro system are the following.

- A sequence of operations that should be applied to several objects could be easily prototyped through the UI on one object and then applied exactly equally to the other objects.

The benefits of a full replay system at layer 3 are the following.

- The undo system can be based on an efficient representation of prescriptive diffs whereby an undo state is retrieved by reapplication of the action sequence.

- The undo system can be implemented as a Directed Acyclic Graph (DAG) where several separate creative paths can be explored and later on merged. Additionally, the DAG would allow for operations to be changed at an earlier stage without undoing the operations that were performed after it [4].

Deliverables

Implementing a full replay system is too involved to be achieved in the GSoC timeframe. However, what I feel can be achieved is the following.

- Finish the macro system.

- Add many of the currently missing RNA paths.

- Develop a method of serialising bContext structs to Python dictionary and vice versa.

- Develop a log system for user actions at the level of layer 3.

- Develop a method of saving the action log in the blend files.

- Develop simple prototype visualisations of action logs.

- Address or document the corner cases in Blender that do not follow the translation of actions through the different layers in Figure 1.

- Tentative design documentation (requirements) for a replay system.

Project Schedule

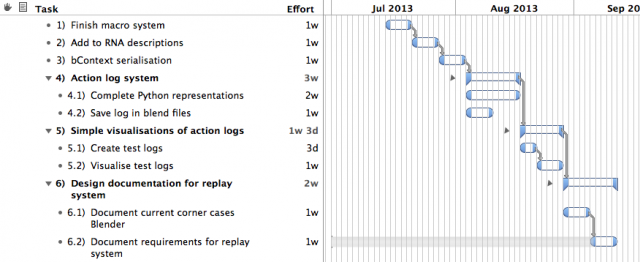

I'm available to start development at the start of July till mid September.

Figure 2. Tentative timeline.

Bio

I’m a 27 year old PhD student from the Cognitive Science Research Group at Queen Mary, University of London. I was born in the Netherlands and lived in Barcelona for about three years before moving to London in September 2011.

My background is in music technology and composition, which I studied at the Utrecht School of the Arts. Throughout my studies I focused on algorithmic composition and music systems that adapt music to the situation in an interactive situation like in video games. I then moved on to work in Music Information Retrieval, which involves the analysis of audio signals and using that analysis to compare and find music.

In September 2011 I started my PhD studies at Queen Mary and there I focus on how representations of creative processes can be brought into the domain of automated reasoning. This involves amongst other things capturing the actions that led to a creative work and then making this data accessible for people through visualisations and for machines through semantic web technology.

My spare time I spend on going to concerts, seeing exhibitions, and generally tinkering with tech and art.

Blender experience

I have started using Blender around 6 months ago for simple modelling and animation. I have also used Blender for several laser cutting projects (employing Freestyle’s SVG export), but it proved rather difficult to achieve accurate and easy to use output.

Rationale for applying

My PhD research is based on Blender and I therefore have a vested interest in working with the Blender foundation and contributing the development I do back to the project. This is because a) the outcome of my research (and possibly the data collection) would be more widely spread and the development efforts would not be lost, and b) the collaboration with the Blender foundation would ensure that I do not miss opportunities in my research due to feedback by users and developers. Also, I greatly enjoy working with Blender and intend to work with it extensively throughout my entire PhD period.

I feel I am a good candidate for GSoC because I am an experienced developer that has participated in many non-trivial projects ranging from microcontroller C programming to distributed web service design and Java/OpenGL video games. A selection of these projects is presented in the next section.

I’ve submitted two patches for Blender. The first patch DRYs out the operator redo panel so it can be reused in several editors [#35018] and the second patch synchronises the undo stacks and the operator stack so that the operator redo panel shows the last operator upon an undo or redo [#35059]. Development experience

My programming experience is extensive. I have programmed in C/C++, Java, Python, Clojure, Matlab, Prolog, Max/MSP/Jitter, SuperCollider, Common LISP, Javascript, Cocoa/Objective-C.

Selected Projects

Hackney House Ecosystem

The Hackney House Ecosystem project was an interactive audiovisual installation comprised of three networked touch tables. Visitors of the Hackney House could play a simple game with the aim of making the ecosystem grow.

- Description and pictures - http://ack-err.net/projects/10/

- Code - https://github.com/theleadingzero/QMHH

Floating Point

Floating Point was an interactive installation that I collaborated on with United Visual Artists. It was commissioned by Nike and was on display for a week in a park in Hackney, London in the summer of 2012. The installation allowed participants to create music by jumping on three massive trampolines.

- Video - http://vimeo.com/47902125

- Code is available upon request.

Freesound

Freesound is an online community of sound professionals and enthusiasts who upload and share sounds under a creative commons license. The website currently has over a million users and over 150.000 sounds. My work on Freesound consisted of managing the release of a completely rewritten and redesigned version of the website which was launched in September 2011.

- Freesound website - http://www.freesound.org

- Code - https://github.com/MTG/freesound

Canoris

Canoris was a set of web services for the analysis and synthesis of sound and music. It integrated many of the Music Technology Group's technologies and aimed to have as much of an open exchange of technologies possible with any interested party. As the Canoris project leader I was responsible for its inception, design, implementation, management, and promotion. The project was launched in October 2010 at the Music Hack Day Barcelona.The project finished mid 2011.

- Music Technology Group website - http://mtg.upf.edu/

- Code is closed source, but several API client libraries remain on Github - https://github.com/canoris

Freesound and Canoris were built on top of several proprietary C++ libraries for the extraction of information from music and sound signals and to calculate similarity between these signals.

Come el Coco

Reflection and introspection are powerful ways of making sense of daily experiences and learning. Also, reflecting on your creative process helps improve your artistic methods and hopefully the creative output as well. Come el coco was the first exploration into how to build authoring tools that capture the creation process and visualize it to be reflected upon.

- Videos - http://ack-err.net/projects/2/

References

- Nakamura, T. and Igarashi, T. An application-independent system for visualizing user operation history. ACM (2008), 23–32.

- Grossman, T., Matejka, J., and Fitzmaurice, G. Chronicle: capture, exploration, and playback of document workflow histories. ACM Request Permissions (2010).

- Mendels, P., Frens, J., and Overbeeke, K. Freed: a system for creating multiple views of a digital collection during the design process. ACM (2011), 1481–1490.

- Chen, H.-T., Wei, L.-Y., and Chang, C.-F. Nonlinear revision control for images. ACM Press (2011), 1.

Publications

- Akkermans, V., Wiggins, G.A., and Sandler, M.B. The Observable Creative Process and Reflection-on-action. (2013), in CHI 2013 workshop evaluation methods for creativity support environments.

- Akkermans,V., Font, F., Funollet, J., de Jong, B., Roma, G.,Togias, S., & Serra, X. (2011). FREESOUND 2: AN IMPROVED PLATFORM FOR SHARING AUDIO CLIPS. In International Society for Music Information Retrieval Conference (ISMIR 2011).

- Akkermans V. Semantic-Aware Authoring Applications. Master's thesis, available upon request; 2009.

- Akkermans V, Serrà J, Herrera P. Shape-based spectral contrast descriptor. In: Proceedings of the 2009 Sound and Music Computing conference; 2009.

- Akkermans V, Nispen Tot Pannerden, T van. TWO NETWORK INSTALLATIONS :“1133” & “COMPUTER VOICES.” Proceedings of the 2008 International Computer Music Conference. 2008.