利用者:AlexK/Gsoc2013/scheduler

Scheduling

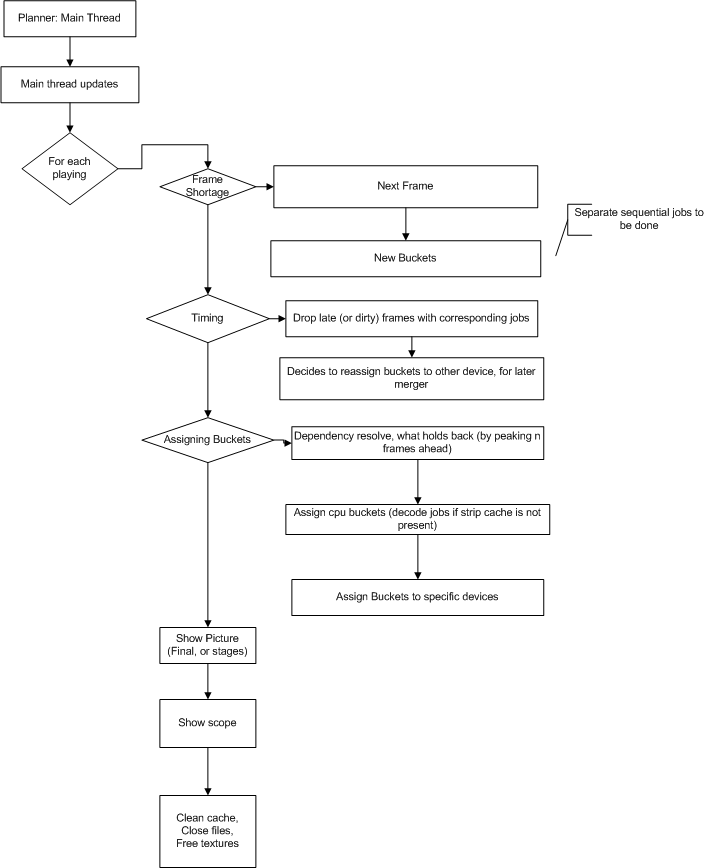

Planner

Main part of scheduling is assigning task ("buckets" in this case). The task of the planner to decide which jobs will go to which device, while trying balancing the workload. To do this, it peaks at the next n frames and decides what have to be done (or even can). For example, if engine starts falling behind, it will drop some work in progress frames (and associated buckets) in order to focus resources on other frames. Also, planner will also give memory transfer commands (to another device). But the main task is to appropriately assign buckets to devices (or groups, like cpu threads) depending on the load.

The planner should run in the main thread. (but if it become necessary, we can offload "nextframe" function to an another thread). It keeps track of the current cache (like strip cache) and frames. For example, we create textures in advance, which are later showed at specific moment.

Also, as this is the main thread, it will handle some thread unsafe calls to the rest of blender.

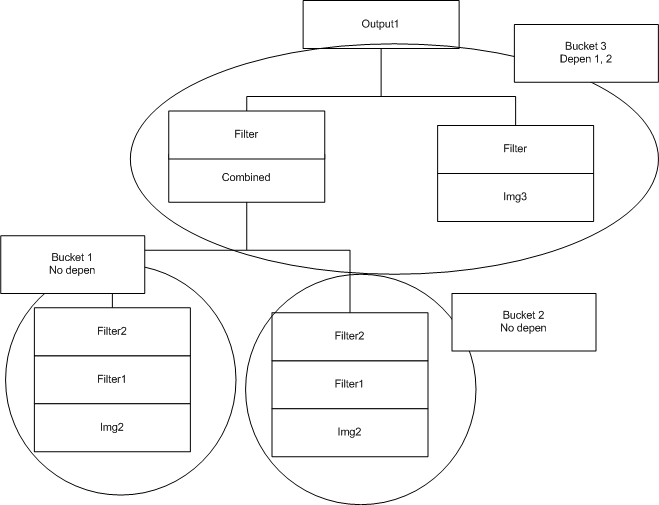

Bucket

Bucket: an ordered collection of tasks with multiple input and a single output. A bucket can be dependent on other buckets. Also, it is a smallest unit which is assign to a device. Each bucket contains smaller tasks: like

- get image of the strip from cache

- apply brightness

- apply color balance

- apply sharpening

Code of each task is device specific. Moreover, it can be subdivided into smaller sub-tasks:

- brightness+contrast

- add brightness

- add contrast

A bucket can be device group specific: like decoding frames on cpu

Each bucket is associated with a frame (or multiple frames). If a frame gets dropped, the buckets associated with the frame also go.

Bucket can be associated with multiple frames (like decoding and sound decoding). Then the result will be go to cache 2. Note: special precaution should be taking for dropping these types of frames.

Cache

There are 3.5 possible level of cache

- cache 0 - ready output

- cache 1 - a strip with all filters applied

- cache 1.x - strip with some modifiers already applied to the strip (good for changing last effects)

- cache 2 - strip (decoded strip)

For now we should support 0 and 2 cache.

Cache can be different quality and size.

Time Segments

The time line is divided into time segments. Strips and their effects are attached to (multiple) time segments for easy search.

Also, cache info is associated with time segments.

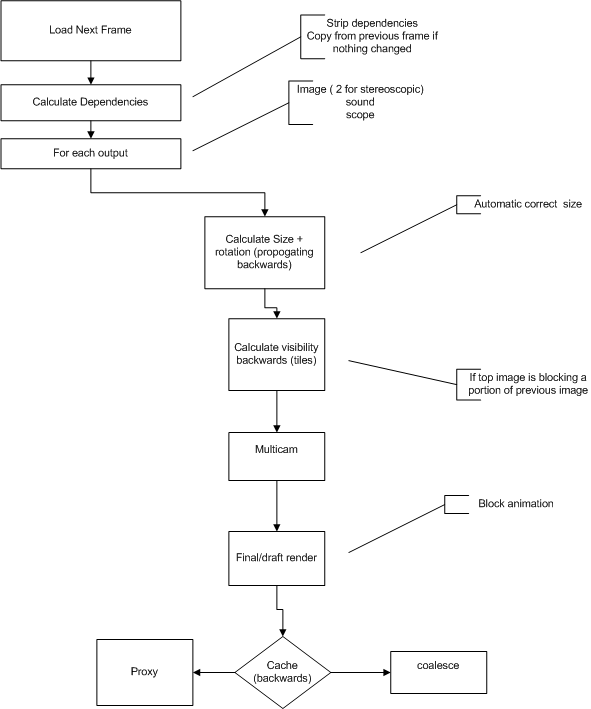

Next frame

Function peaks at the next frame.

Supports multiple outputs. Outputs are independent: for example 2 stereoscopic images and sound. We can add output targets like scopes which are dependent on an output.

We propagate backwards, calculating if we can use smaller size for each strip and if a strip (or portions) is visible. We can optimize based on that. Color depth is global parameter (to ease conversion). Each "kernel" on cpu and gpu will be compiled for different data types (Note, half is not supported on cpu)

We try to resolve caches (with correct size), if we cannot, we fall onto proxies, or real footage.

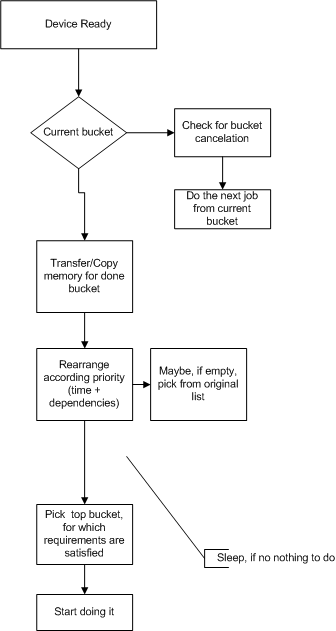

Devices

Devices simply try to finish current bucket (if not canceled). Than a device chooses next available (all dependencies are satisfied) bucket with highest priority (delivery time + if other are depending on it)

Devices can be organized in groups like CPU threads as they share the memory. We can have bucket queue for device groups instead of devices. Also, if it is the first bucket from the frame, this bucket shouldn't be assign before some device is free to take it.