利用者:Dfelinto/Viewport/Sprint-16.09.23

目次

Introduction

Blender 2.8 original design brought to the conversation a workflow-based usability mantra. Within those constraints we went over the ideal pipeline for a few well defined workflows.

Here is an excerpt from a recent viewport development design document which is still valid for this proposal, and summarizes the principles here presented:

“There’s a fundamental difference between an object’s material appearance and the way that object is displayed in the 3D view. Materials are part of your data, just as geometry is. One way to display is full material preview. When you want a high quality rendering of a lit scene, or you are working on the materials themselves, this is the best choice. There are other ways to view your data, useful in different situations or stages in your workflow.

Answer the question: what about your object do you need to see right now? Maybe the polygonal structure of your geometry is important — especially true while modeling! In this case you want to see the faces and edges clearly. Lighting and materials on the surface are less important and more likely a distraction. Sometimes the shape or form of an object is most important — sculpting is the best example. Individual polygon faces are less important, edges and materials are distracting. Or draw in solid black to discern your object or character’s silhouette” (merwin, June 2016)

To align the discussions with the artists and the technology we are bringing up for Blender 2.8 we tried to introduce a few new concepts: tasks (individual components of a workflow), plates (rendering techniques and interactions within a viewport) and minimum viable products (a few main early deliverables of the development cycle). These concepts are each explained in their own section.

Out of those discussions with a small group of artists and developers, we came up with the present design for the viewport. The ideas here presented will evolve during the project and once they start to be faced against real-world implementation challenges.

Three pillars of this project

Performance and Responsiveness

- More responsive navigation (fast drawing)

- More responsive to user actions (low latency editing, updates, selection)

- Handle more complex objects / scenes with ease

- Work on a wide range of hardware

- Be power efficient (update screen only when needed)

Task appropriate shaders

- Higher quality visuals (real-time PBR, rich material preview, solid outlines, shadows)

- Different shading modes for different objects

- Improve usability (visual cues, showing selection, task-centric drawing techniques)

Integration with external engines

The new viewport will allow for more accurate preview of the external engine your project is targeting. Each engine may have different material and shading models, as well as unique screen effects. Whether you’re making assets for a movie or for a game, the idea is to see what it’s going to look like while you work on it.

- Offline renderers: Cycles (i), Luxrender (ii), Renderman (ii)

- Real-time renderers: Blender Internal (iii), p3d (ii), Sketchfab (ii)

- Game engines: Unreal (ii), Unity (ii), CryEngine (ii), Blend4Web (ii), Armory (ii), Godot (ii)

(i) For Cycles support we will use the PBR implementation docs by Clément Foucault as a reference [link].

(ii) Proper export of assets (with materials) to your engine of choice is not strictly part of the viewport project, and neither is the specific support of its shaders. The project will support Python addons so other developers can work on those integrations.

(iii) We intend to retire Blender Internal as a standalone offline renderer, and make the real-time viewport take over its duties. We plan to support its material system and convert existing Blender files relying on the old engine to their OpenGL equivalent shaders seamlessly whenever possible.

Tasks

Task is an individual step that is part of a larger workflow. For example, the texture painting workflow can be split in a few atomic tasks, such as painting a texture chanel, checking the result combined with the other maps, preview a final render.

Each task has also a mode associated to it (e.g., sculpting, painting, editing, …). However the same mode (e.g., mesh edit) may be required for different workflows and tasks (e.g., retopology and asset modelling).

Even though we want to support customization, we will build a pipeline based on artists’ feedback with well defined presets for each task. The list of to-be supported tasks can be fleshed out during the upcoming usability summit.

That being said, a few workflows will be elected for the initial deliverables, and are listed here as the initial minimum viable products.

Switching between tasks should be as simple and fast as picking one from a pie menu.

Plates

Different tasks require different visuals. For example, for layout you want to see the scene lighting, depth of field, shadows and reflections in real-time. For asset modeling you may need a basic studio light setup, polygon edges, special edges such as sharp or UV mapping seams.

Every one of those individual components (depth of field, studio light, front wires, …) is called a plate. They are composed together in a stack or processed in parallel depending on each plate. If you want the edit object to show in a fully lit (PBR) shader, that’s a plate. If on top of this we have a highlight outline for the edit object, that’s another plate. If we have all the other objects in a simplified representation (no subsurf) with a clay shade, this is another plate. If we have depth of field on the viewport, that’s yet another plate.

A key point is that plates are drawn independent of each other. Manipulator widgets or selection outlines don’t need to know whether they’re being drawn atop a simple solid shaded scene versus a full Cycles rendered scene. Separating all our different drawing techniques into independent plates is going to allow mixing & matching & combining of techniques to assist the task at hand. On the developer side, this gives us markedly cleaner and easier-to-maintain drawing code. New visuals can be implemented with little worry about interfering with how other plates are drawn.

An extensive list of plates can be found on the [[Appendix I.

Minimum viable products

The viewport project is structured to gather continuous feedback from artists during its development. In order to balance working in the code infrastructure and showing useful results for artists to jump in right away, we defined a milestone per project pillar.

Each milestone represents the initial code to consolidate a pillar’s full implementation, as well as a fully functional artistic working experience. Those specific milestones will be treated as MVPs—minimum viable products—and should illustrate the benefits of the viewport project for a certain workflow.

The MVPs were chosen based on their gradual implementation complexity, their clear correlation with the proposed pillars, as well as their relevance in an animation studio pipeline.

1. Character modeling

“Performance and responsiveness”

Deliverables:

- Huge performance gain

- Beautiful editing mode

- Groundbreak to illustrate how wireframes are a poor choice for some (if not all) tasks

Plates:

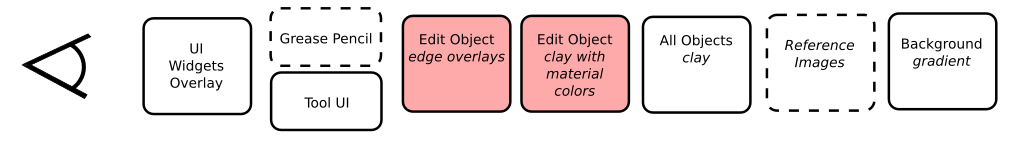

- Optional plates like Grease Pencil are shown here with a dotted outline.

- Tool UI (snap, selected v/e/f, …), proportional editing

- Object being edited will be drawn in a way that stands out visually and informs the modeling task. Shown here in basic clay for early deliverables.

- Objects not being edited are shown for spatial context but drawn in a way that diminishes focus on them.

- Optional reference image (blueprint, face profile, etc.)

2. Camera and staging

“Task appropriate shaders”

Deliverables:

- Reliable and controllable lights

- Advanced screen effects (DoF)

- Flat reflection

- High quality playblast previews

Plates:

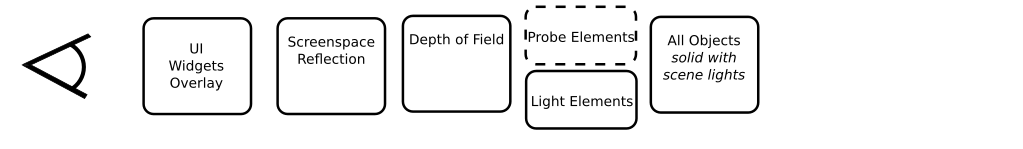

- Light elements (widgets to adjust scene lights)

- Probe elements (to setup cubemap reflections)

- In this example objects are drawn solid (per materials flat color) with scene lights.

3. Texture painting

“Integration with external engines”

Deliverables:

- Fantastic good looking shaders

- Smooth pipeline (no need for switching between Cycles/BI to get the right “look” or options in the viewport)

Plates:

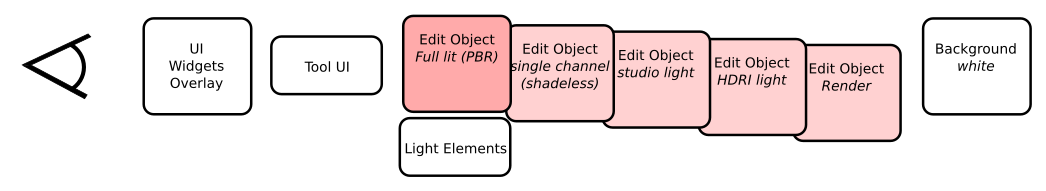

- Tool UI includes painting cursor, brush preview, etc. If preview lighting is used, an extra plate with grabbable light controls will also be shown.

- Edit Object plate will be one of the variations shown. You’ll be able to flip between them as needed.

Pending designs

We plan to to revisit the overall design every time we get to one of the MVPs. For instance, there is no mention of how the customization of the tasks will be, as well as the user interfaces.

We also need to explore what the other workflows may be, what tasks they consist of, and what technology and techniques we need in the viewport to fully support them.

The idea is to validate this proposal with the deliverables, and adapt it accordingly.

Meet the team

The development will be open for the usual Blender contributors, artists and developers. We will have an open call for developers to assist with specific initiatives here and there, and will welcome anyone willing to work together towards the 2.8 viewport goals.

The current team, their IRC nicknames and their roles in the process is:

Mike Erwin (merwin) is responsible for the low level implementation. This has started already with the OpenGL upgrade, Vulkan preparations, and internal API design.

Dalai Felinto (dfelinto) will help coordinate the work from the developers and artists, and take over part of the core development.

Clément Foucault (hypersomniac) will take over much of the PBR implementations. Part of his PBR branch will be ported over as a Cycles alternative for realtime previews.

Sergey Sharybin (hackerman) will handle all the scene data that will be fed into the pipeline. This will happen in parallel to the new Blender depsgraph.

'Blender Studio artists that will be providing feedback and help to validate the design.

Appendix I – Plates

Following are some of the individual plates we anticipate being required. Additional plates can be implemented once the workflows and tasks are better defined in the future.

Effects

- Depth of field

- Reflection

- Color adjustment

- Fluid simulation

- Particles

- Lens flare

- Custom (GLSL)

Elements

(aka Objects not renderable)

- Cameras

- Lamps

- Probes

- Speakers

- Forces

- Motion path

Shading

- Clay (solid + AO)

- Outline highlight

- Solid wireframe overlays

- Depth wires

- Solid color with scene lights

- Grayscale with scene lights

- Real-time (GLSL/PBR) with scene lights

- Real-time (GLSL/PBR) with HDRI

- Matcaps

- Raytracer lit (e.g., Cycles)

- Studio light

- Silhouette (uniform color / solid black)

Filter

- All objects

- Edit object

- Object types (Mesh, Armatures, Curves, Surfaces, Text …)

- Mouse over object (?)

- Mouse over mesh hot spot object (? – mesh manipulator)

- Outline search result (?)

Misc

- Simplified geometry

- No hair

Appendix II – Buffers

Each plate reads specific data from the scene graph and from other plates’ buffers, and writes into its own buffers. The buffers for a typical 3D View will consist of:

- Scene depth (32-bit)

- UI depth for 3D widgets

- HDR color (16f RGBA by default, 32f option in system prefs)

- LDR color (8-bit RGBA)

- Object ID for selecting/masking (32-bit integer)

- Composited screen color (10-bit RGB)

- Previous HDR color for SS reflections

These details are mostly invisible to the user, and part of what the viewport API should be able to gather from Blender “datagraph”. To quell panic about runaway VRAM usage: each plate does not necessarily have its own private buffer, these buffers cover only the 3D view not the whole screen, and only the composited screen color must be double buffered.