利用者:Kattkieru:Blender 25 Suggestions

Blender 2.5 Suggestions

Greetings to you. I hope, reading this, that you're a programmer who has a decent idea of how to modify the inner workings of Blender. I'm also a programmer, but I'm not yet at a level with the source to do most of the edits I have ideas for. That said, I wanted to leave a page of my ideas for Blender 2.5 or higher that I think would be great upgrades in functionality and useful to all users, especially in the professional sphere.

目次

[非表示]Outliner Suggestions

The new Outliner code in 2.43 is an incredible step forward in usefulness for this tool. (Particularly interesting are the selectable / renderable / visible flags -- I hope these have a Python API.)

In looking at Cinema 4D especially (recently many of my friends have switched to it), I think that some of the usability features in C4D's own Object Manager could be... "stolen," as it were.

Here's a list of my ideas:

- The current selection system is somewhat counter-intuitive. "Select by clicking anywhere but the name and icon" -- why? The icon is the likely place any user will click and attempt to drag, and as such it should be the main recipient of click events.

- Clicking the icon should select the object and highlight the row (as rows are highlighted currently by clicking not on the icon or name). Shift-clicking on the icon should be used to deselect or to add to the current selection. This is more consistent with other apps.

- In addition, modifiers, textures, materials, and mesh data should also receive highlighting like this. There is a good reason for this which I will detail later, but it's also just a user interface issue: if you can select objects at the object level and have them highlighted, you should also be able to do the same at the texture or constraint level so that the experience is consistent within the Outliner.

- Drag and drop parenting from within the Outliner. Left-mouse click and drag (possibly with a 100ms hold before dragging, to distinguish drag operations from click operations) on an icon picks up the icon and name, attaching it to the mouse pointer. As parenting operations can be cumbersome, a specific system of drop points will be established:

- Dropping the icon to the left of all icons will unparent child items. (Dropping items without a parent to the left of all icons would do nothing, essentially leaving them where they are.)

- Dropping the icon beneath the icon or name of another Object (not material, constraint, texture, etc.) would parent the dropped item to the recipient. A line would appear beneath the icon and name of the recipient object to indicate that a parenting operation will occur on mouse button release.

- Dropping the icon above the icon or name of another child Object would parent the dropped object to the recipient child object's parent, at the same level as the recipient child object. This is a particularly problematic operation in a lot of software, so it's important to get it right.

- Certain items like Materials cannot be unparented, but could be applied to other objects by holding Alt and then dropping. With the Alt key held a Plus symbol could appear next to the mouse cursor (yes, just like on the Mac OS), indicating that the data is about to be duplicated. Since Blender has a substantial linking system some thought would need to be put into how this duplication will occur -- through linking or through a full copy. My recommendation is that a full copy is used when only the Alt key is held down, but if Alt+Shift are held down before dropping, then a link is created. It would be important to have this be the same across all object and data types for consistency in the UI. People would get the hang of it, and tutorials would pop up quickly enough noting that most of the time you want to hold Alt+Shift when dropping materials, but only Alt when attempting to duplicate an object, for example.

- Similar to the Material copying, Objects could be duplicated and linked with a combination of the left mouse button and Alt/Shift.

In addition, I'd like to reiterate my suggestion (which was posted somewhere else on the wiki) of a filtering system for the Outliner based on user searches. Similar to iTunes, a search bar should be added through which the user can have the Outliner show only the items matching the (case insensitive?) search string. THIS SHOULD NOT BE A LIVE SEARCH. Live searching is unforgivably slow on older machines, and many Blender users are on older hardware. It should instead work as follows:

- User hits Control+F (Find) or selects it from a drop-down menu.

- User types in the search string, hits enter.

- The Outliner display is updated with only objects that match the search string (and possibly their parents).

This could be further filtered by adding data type filtering to the Outliner menu.

In its current state the Outliner right-click menu is, in my opinion, not what users are going to expect. (To be honest, when I first right-clicked and saw Select / Deselect / Unlink / Make Local, I found it both redundant and not useful at all.) I think firstly that Select / Deselect can be removed and replaced with the selection method I detailed above. Furthermore, the menu could be made more useful by giving textual versions of the left mouse click-and-drag functionality:

- Menu could be as follows: Rename, Copy, Copy as Instance, Copy Name, Delete, Unparent.

- Under the three Copy selections, there would also be Paste, Paste as Instance, and Paste Name. These would be greyed out (not available for selection), or not present at all, unless an object or name had been copied previously.

For pasting, the user would right-click again on another object:

- If an object was copied as duplicate (Copy) or as an instance (Copy as Instance), then the menu would be the same as before adding the Paste selections under the copy set.

- In terms of parenting, you could also have Paste as Child and Paste as Sibling. Paste as child would paste the object or instance as a child of the clicked object. Paste as Sibling would only be available on object that are children of other objects, and would be like left click and drop above an object (detailed above).

Another idea that could be worked in here would be for PyDrivers. In the right-click menu, there could also be a "Copy as Driver" submenu.

- At the Object level, this would open a submenu of nine items -- Location, Rotation, and Scale along all three axes. (How would quats work here? I have no idea, but they're not as useful from a user perspective as they require math that can't be done mentally. At least, that's my opinion.) Selecting one of these would copy, to the global Blender clipboard, the python driver string for what you selected.

- For example: I have two cubes, Cube1 and Cube2. I want Cube1's X rotation to drive Cube2's Z rotation. I right-click Cube1 in the Outliner, select Copy as PyDriver->X Rotation. This copies the string, "ob(Cube2).RotX" to the global clipboard. Then the user can go to the IPO window, add a driver, press the Python button, and paste the copied driver.

I would personally like the ability to right-click and paste on text buttons, but that might be asking for too much. ^_^

Outliner and Button Interaction

Part of the left click and drag functionality that would be infinitely useful AND fix a long-standing annoyance in Blender would be the ability to drag from the Outliner into the Buttons window. This is going to be a two-part recommendation.

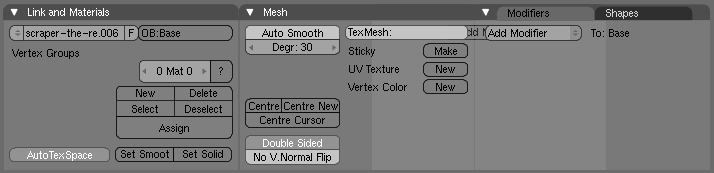

- Currently there are a lot of buttons that start with short text signifiers that indicate what kind of object or data name they expect to receive. For example, in the Material Buttons, under Links and Pipeline->Link to Object, there's a box that says "MA: Material" and "ME:Cube1" on the screen I currently have open. The first suggestion is to do a Blender-wide replacement of those buttons with a new button type: icon button. The icons would match the icons present in the Outliner, unifying the UI and letting the user know, visually, which data types go where.

- Dragging an object from the Outliner to the buttons window, onto an icon button, would replace the text with the name of the dropped object. So for example, if I wanted to replace "MA:Material" with "MA:Redball," I could drag Redball from the Outliner onto "MA:Material" and release; the material on the object would be changed to Redball. The same could be done for groups, mesh object, and Object or Bones inside Constraints and Modifiers.

This is such an important workflow improvement that, regardless of all other suggestions above, I think this should definitely make it into Blender 2.5. It provides a serious improvement in workflow for all users, and particularly improves the workflow in scenes with hundreds of objects. No more typing names, or selecting an object to copy its name for pasting!

One note would be on multiple object selection. The dragging of multiple objects outside the Outliner should not be allowed in most instances (although dragging from the Outliner into an Object Nodes window would be nice -- more on that later). Multiple material dragging should only be allowed onto Node windows. Also, for consistency's sake, the last selected item (the active item in current Blender terminology) should be the only name displayed in the drag item beneath the mouse cursor. To indicate that multiple objects are selected, a number not unlike the number used to indicate the current frame during rendering could also appear next to the mouse cursor, showing the number of items being dragged. It's possible that only Objects should allow multiple dragging, as doing so with Data opens up a can of worms with regards to workflow that I can't see any way around. Also, multiple data selection doesn't strike me as "as useful" as multiple Object-level selection, save perhaps when it comes to deletion.

Outliner Layers

I don't know if the selectable / visible / renderable flags in the Outliner can be set for multiple objects at once through some key combination, but I think that Groups should be special with regards to this.

Groups could serve as named layers in the Outliner, allowing users to template or turn off the renderability for all objects within. I know that some developers have done work with named layers in the past, and I think that this suggestion would satisfy the needs of most users.

Outliner, Multiple Object Selection / Editing, and the new Attribute Manager

Here's where things get interesting and difficult.

The Buttons Window is imperfect. The ideas are sound, and thus far the buttons themselves have been organized quite well despite the quantity of new features that have been added since the 2.3x releases. However, there are a number of problems with the buttons window:

- Some windows extend sideways (Game Buttons) and some extend down (Constraints, etc.). This means that whether buttons are placed horizontally or vertically, there are some instances in which the buttons are automatically pushed off-screen.

- Tabs and button panel groups often overlap or get mixed together, making interaction difficult. For example, sometimes the Links and Pipeline panel in the Material buttons sits right on top of the Material Panel. This is a random occurrence.

- Button window scrolling is currently a global variable and not by section. For example, if I'm in the Game Buttons editing a keyboard shortcut at the very bottom of a long list, then select a camera and switch to Object Buttons, the space appears empty. Only after hitting home do I see the panels in the Camera buttons.

More important than any of the above annoying bugs, however is this:

- Selecting multiple objects does not allow the modification of multiple sets of data.

The list of bugs is one thing, but how do we solve the editing of multiple objects? This requires a new Space type, the Attribute Manager. This is a feature present in all other major 3D apps in some form or another (C4D's Object Manager, the Maya attributes panel, and there something in XSI but I can't recall the name at the moment).

The Attributes Manager would be a new space entirely and would list modifiable attributes for the current level of data selection or the currently active tool. Some examples:

- If an object is selected, then the Attribute Panel would display the location / rotation / scale values for it, as well as the object's name.

- User data could also be displayed here as sliders or number buttons, etc., as per the user data settings.

- Underneath the Object data, the sub-data would be displayed. This could change based on what's selected currently. For example, on mesh objects there would be material information, vertex groups, name of the mesh data, multires, and mesh editing tools. This would change by edit mode, similar to how the button windows do. Lamps would display their settings, and cameras theirs. Constraints and Modifiers could be displayed beneath those.

- In line with the upcoming Tools API, tool options would be displayed when tools are activated. For example, when creating a UV Sphere object, the Attributes Manager would switch to a tool view with the options for segments, rings, and x / y / z sizes, etc.

- Similar functions that are shared between tools would be shared as "global" tool settings:

- Options such as Create at Cursor, Create at Origin, Create Using View Rotation, Create With Normal Rotation (RotX, RotY, Rotx = 0, 0, 0).

- Similar functions that are shared between tools would be shared as "global" tool settings:

- When nodes are selected, the data they contain would be showed in the Attributes panel as well. For example, textures would have their settings there.

With multiple objects selected, variables that match on both objects would be displayed. Any variables that are different between the objects could be displayed in one color, while variables that have the same value could be displayed in another. Changing one of these variables would change the value across all selected objects.

This would be especially great at sub-object levels, like vertices -- suddenly things like aligning a number of verts to an axis is no longer an issue of scaling and then moving the median, but of selecting them and typing 0 in the box for the axis in the Attribute Manager.

I think C4D and Maya have the best workflows when it comes to having an Attributes / Tool Settings panel (C4D being at the top of my list), so I urge the developers to download the demo / educational versions of those packages (if you haven't already) to see how these things work in other software. This is not a minor edit, I know. It would require a lot of planning and a lot of under-the-hood mucking in Blender's internal systems, but I think it's the best way to move forwards.

As far as fixing the current Buttons Window issues, I'd like to recommend adding a new widget type: a layout control. I know that in MELScript there're objects called rowlayout and columnlayout (or something similar) that set up automatic positioning of objects. Something similar could be used to more forcefully keep the panels in check. I think the Attributes Manager in Blender could also use this, so that when displayed vertically only one column of data is shown, but when horizontal the number of columns can be set by the user to space out the buttons in the window. (As a side note, something similar could be added to Python to simplify UI layout, but I'm getting ahead of myself.)

The Holy Grail: Object Nodes

Object Nodes as Constraint

When the nodes system was introduced for compositing and materials, I thought, "Great! This is a step closer to the Maya level of connectivity needed for real complexity!" But then I thought about it a little more. How would Object nodes be implemented in Blender without a total rewrite?

That got me to thinking both about the C4D XPresso system and Blender's own Material nodes. Neither seem to overwrite the original system, instead offering the user an "opt-in" method of adding nodes if and when necessary. This is a step above Maya's solution, nodes in everything, because it allows new users to get used to the system without having to keep all those confusing node connections in their head until they're ready to use them.

So instead of a rewrite of the Blender object system, I'd like to propose something different: the implementation of nodes through a new Constraint type. This constraint would only add Nodes to the object, and possibly create a new Node Tree object under the object in the Outliner. (This would be a new kind of Data type.) In the Nodes Window, next to Material and Composite nodes there would be a third type, the Object Nodes. Only one constraint of this type would be allowed per object, so selecting the object in the 3D window or in the Outliner would make it the active data for the Nodes Window. From there, Object interaction could be developed.

The fun part of all this is that, as part of the constraint stack, nodes could be turned on and off at will, and could also be effected by the rest of the stack. For example, node-based motion could be clipped by a Limit constraint. There are of course issues to be worked out with this idea. I don't know how circuity would be handled. I also don't know how other objects that get added to the node tree would be tagged-- perhaps any object in the node tree of another object would have a "Node Output" Constraint automatically added? I honestly have no idea on this one, nor do I know how C4D handles this issue.

As for how one would add objects to a node tree, why, you'd do so through drag-and-drop from the Outliner! That negates the need to page through huge menus of objects or to add some sort of special system to the Nodes Window for searching. (Similarly, textures and materials could be made drag-and-droppable to the Material nodes.)

Then on to the issue of node connections. Inputs and outputs should not go the way of Maya, I think. Maya's system is cumbersome. C4D's XPresso is the most elegant system I've seen for this yet, forcing the user to only add / show the inputs and outputs they need at any given time. I think Blender should follow suit.

Object Nodes, the Dependency Graph, and Custom Attributes

One of the things I've heard a lot of professionals say they prize above all other features is "the ability to plug anything into anything," or to connect ANY two values of the same type. Partly this would be in driver / driven relationships, but also just for consolidating controls for multiple objects into a single 3D controller with all variables keyframeable on the same object. I've also noticed, over the years, that new objects and new animation channels have had to be added manually by the coders, and still, not everything is keyable.

I propose that a generic animation channel system be adopted. In this system there would be no real difference between, say, a standard float channel attached to an object (LocX, LocY, LocZ) and a custom float variable. Float, Int, and Bool channels would appear in the IPO editor where the channels are names currently float. Under the hood, the custom attributes would use the same code as the default attributes to generate animation data. In fact, on object creation, default variables would be added to a generic object, and the object's use of that information would be defined by some sort of viewer, or behavior code.

A good example of this would be for a Cylinder and a Sphere. Both could have a "radius" variable, but the two objects would internally be passed to different viewers, which would handle the use of that variable differently.

If all object animation channels are dealt with in this way, including custom attributes, then animation would just be a matter of stepping through each object on each frame and incrementing frame values for each animation channel that's unlocked and has keys.

The dependency graph could also be updated using this new system. Currently Blender does not allow the driving of one channel in an object by another channel in the same. However, what should be happening is that each channel should be considered as separate by the dependency graph. It should be possible to drive the LocX variable in an object by its LocZ or by its RotZ. By generalizing the animation channel system, and by checking for circuity, the drivers could be opened up and made a lot more powerful than they currently are.

Lastly, this would also allow users to lock and hide unneeded channels. For example, if an object in a scene never rotates the rotation channels could be locked, preventing their editing and making the updates on each frame skip solving for their values. In small scenes this doesn't have much more than a cosmetic effect (less channels visible in the window) but on large scenes this can have a dramatic effect on realtime update speed.

Hidden channels would still solve; they would just be removed to reduce clutter, and would be assumed to be unkeyable / unselectable. This is most useful for channels driven by other objects.

I recommend that all locking / keying / hiding / unhiding be dealt with inside the proposed attribute manager, possibly overloading it by adding a second tab to its interface. The tab would have a view with two columns: hidden channels on the right, and visible channels on the left.

Python API Upgrades

New GUI API

The more I use Python the more I love it, and changes in the Blender API (particularly changes towards keeping it unified between object types) make it wonderful to use. However, the GUI API is unnecessarily complex. Everything about it feels like a throwback to a much older paradigm, and since Blender and Python are so object oriented I think it's high time to introduce a new API for Python GUI creation, one that includes objects and simplifies the creation of complex UI's with callback functions.

This is something I want to take a crack at at some point in the future, but for now, here's some pseudo code on how I think it should work; the style is stolen straight from MEL.

import Blender from Blender import ObjUI ui = ObjUI.New() # window title perhaps? ui.name = "Object Renamer" # beyond these min sizes, layouts will spread buttons out ui.minsize_x = 250 ui.minsize_y = 300 layout = ObjUI.NewLayout() layout.type = ObjUI.ROWLAYOUT layout.rows = 2 # height of each row layout.rowheight = 20 # space between rows layout.margin = 5 t1 = ObjUI.NewEditField() # name of global variable in which to store the values t1.var = "t1" # this is called when the text is edited (pointer to function object) t1.onChange = t1_onChangeFunc # the second, optional variable says how many rows or columns # the added object will require layout.add(t1, 2) # make it stretch across two columns b1 = ObjUI.NewButton() b1.title = "Rename" b1.onClick = b1_onClickFunc layout.add(b1) b2 = ObjUI.NewButton() b2.title = "Reset" b2.onClick = b1_onClickFunc layout.add(b2) ui.addLayout(layout) # this handles all input, drawing, and callback execution ui.Run()

As you can see, this kind of code would be a lot cleaner to read and easier to modify in the long run. Also, because layouts are used to contain the controls, the UI would scale with the resizing of the window, with no extra work for the script writer.

Permanent Script Space

It would be nice to have certain scripts execute at:

- .Blend file load

- Program Start

- Frame change

These could be scripts that are special, like the space handlers. It would also be nice to have scripts be able to stay persistent between sessions. For example: a user starts a utility script they use a lot when modeling, save their file, and close Blender. The next day they open the document, and the utility script looks as it did when the file was saved. This would be particularly helpful for character "pickers."

GHOST Upgrades

File Selector

Simple fix here -- have the File Selector dialog come up in the same window that the full screen render appears in. It's terrible that the file selector often pops up in the smallest space available, forcing the user to both expand that space and then minimize it back after a file is chosen.

At the least, the position at which the File Selector pops up could be picked by choosing the largest currently-open space. (Usually this would be a 3D window, compositor window, or node editor.)

Multiple Windows

The idea of non-overlapping windows is a laudable one, and until now I've been a big fan of it. However, it falls short when multiple monitors are introduced. Blender needs the ability to create multiple windows, each with their own space layout. The layouts should be linked like all Blender data, allowing the same layout to be displayed on two monitors or for different layouts to be shown in different windows. On the creation of a new window, Blender would populate it with the default layout that's saved with Blend Files when the user hits Control+U.

There needs to be some thought put into how these windows would interact (for example, I think that mouse motion between the windows should be handled as it is in X11, where you don't have to click on the other windows for the cursor focus to pass to them). There also needs to be thought put into how these windows will be saved inside the Blend files, and what will happen if a .Blend with multi monitor windows is opened on a system with only one display. I suggest the following:

- Windows are saved on the display they occupy at save time. Windows beyond the first, main window can also (optionally) have their position saved. (This would make floating character pickers and image windows, etc., a lot easier to use between sessions. Without saved positions the user will likely be resizing the spare windows at every file load.)

- The user should have the optional ability to save the main window's state (size and position) as well.

- When a file with multiple windows on multiple displays is opened on a machine with less, the windows that occupied the missing display will open *behind* the main window on the main display.

In the end, I think the non-overlapping windows philosophy has served Blender well, but it holds the program back in many situations.

User Preferences

To satisfy a large number of users, the user preferences should be made accessible through an easy-to-find menu item in the main Blender window. Instead of redesigning the prefs, the same trick used for the full screen render window could be used to also make the prefs go full-screen, until the Escape key is hit. Naturally, as the prefs do not take up a full window the buttons should be centered vertically in the space.

Materials

Yafray Integration

Already a lot of changes become apparent in the Material editors when Yafray is selected as the renderer. I hope this continues as a practice, particularly since the next version of Yafray will be adding a number of interesting shaders that Blender's internal renderer does not support.

Also of particular interest would be the ability to mix rendering passes between Yafray and the Blender internal renderer. How excellent would it be to have an entire scene rendered in passes using the internal renderer, but to have a GI pass handed to Yafray and then passed to the compositor nodes, all automatically?

This idea can be extended as well. For example: the user could render a GI pass in yafray, a fake caustics pass in the internal renderer, and perhaps a toon lines pass in Freestyle (once the Freestyle integration project takes off). Then, all passes could be composited in the compositor and rendered out into single frames without ever leaving Blender.

Material Presets

Currently the Yafray shaders have pre-set indices of refraction for certain kinds of materials. It might be nice to have the same be present in the internal renderer, and for this list to be expanded to a wider variety of common materials.

Material Library

There is no reason at this point for Blender to not ship with at least a rudimentary library of simple shaders. However, this feature working for the user would require a new space: the Material Manager.

The material manager would be a grid-based layout of all materials in a scene (not unlike a material shelf), even the ones not directly linked to an object. It would also manage all textures; in this fashion textures could be created and retained without the need to link them to a material on object. (An example of the usefulness of this would be with the Displacement Modifier-- why does the user have to create a texture as a part of the material, then turn the texture off, just to use it?) Materials would be drag-and-droppable to objects in the Outliner for material assignment. Possibly, Outliner mesh objects could have a sub-tree of material indices / sets so that the drag-and-drop action could effect only specific faces. Similarly, drag-and-drop on objects in the 3D scene could assign the material to all faces, or if the user is in edit mode with faces selected, only to selected faces (creating a new material group automatically).

Other functions would include the ability to duplicate materials, the dropping of materials into the node editor (to duplicate or link a new material to an existing node tree), and I'm sure many other things I've yet to think of.

Important here would be the library of presets. A menu could hold them all (possibly generated from Python, if the presets can be converted to python scripts and then distributed in that fashion), and adding a preset would create a copy which could then be further modified. Plastics, marbles, woods, glasses, etc. could be added and then extended, while users could submit new shader presets for inclusion in future versions and also distribute their own shader presets on personal sites.

(Character) Animation

IK

The one thing about IK in Blender is that there are no pole vectors for IK chains. Using the track constraints do not alleviate this oversight. Blender *needs* IK with pole vectors for alignment on arms and legs.

Spline IK would also be nice, but is nowhere near as vital as pole vectors.

Character Sets

Another thing that's sorely missed from Blender is the ability to combine sets of objects inside the Action Editor. This is another place where groups could have their functionality expanded a bit -- groups could also function as character sets, after a fashion, allowing nulls and other kinds of objects to appear in the action editor beside their bone / armature counterparts.

Character sets would also pave the way for Joint-based character systems at some point far in the future, as Joints are treated as regular objects in most other packages. Since C4D, XSI, and Maya all support that system, Blender's adoption of it would be a Good Thing. (Personally I don't like Joints, but I can understand both their appeal and their benefits over regular bone systems.)

Bone Constraint Feature Equivalence with Other Software

Bone constraints are currently missing the following:

- Fully functional local constraints. (I know someone recently started at least looking at the local Copy Rotation constraint, but in 2.43 RC2 it's still not functioning properly.) Local constraints need to be applied using the final position of their targets. For example, I constrain BoneA to BoneB with a local Copy Rotation constraint. BoneA is free-floating, but BoneB is the base in an IK chain. If I move the IK goal, thus moving BoneB, BoneA should rotate based on BoneB's constrained position and not BoneB's rotation IPO. Currently this does not happen.

- Location constraints need some sort of offset ability. They also need the ability to intelligently be able to share an object between multiple targets for character / object interaction. For example, in Maya a Point Constraint can constrain an object to two separate targets with two influences. If both objects have an influence of 1.0, then the constraint places the constrained object directly in the middle of those two objects.

Image Formats and Multilayer Expansion

Since Blender is now capable of reading .PSD files, it only stands to reason that the ability to write to .PSD files would be an excellent addition. Loading and saving of multilayer PSD images would be great as most software around can not directly edit multilayer EXR images. Also, PSDs can support 16bit+ color data, meaning users would be able to choose whether or not the 32 bit data which would normally be written to the EXR would be truncated.

Closing Comments

If you've read down this far, you're a champ. ^_^ Thanks for giving my ideas a read-through. I don't expect all of them to get into Blender, but I thought having my voice heard was important enough that I sat down and wrote all this out.

Anyway, thanks again as always to the developers who spend countless hours making Blender one of the best software packages I've ever used. I hope something in what I've written inspires you.