利用者:Kwk/Gsoc2010/OldProposal

Application has been submitted to Google and will further be edited there.

目次

GSoC'10 Proposal for Ptex Integration

Name

Konrad Wilhelm Kleine

Email / IRC / WWW

My email address is konrad @ nospam @ konradwilhelm.de. My nickname on #blendercoders is kwk. My personal web page is http://www.konradwilhelm.de.

Synopsis

Texture mapping using explicitly assigned UV coordinates can be a tedious task; not only in Blender but also in other 3D programs. Once textured using UV, a model must pass animation/deformation tests which involve texture stretching and appropriate blending of seams. Other texture mapping techniques like projection painting require reference images from multiple viewing angles. The actual number of necessary images is related to the number of occlusions in the model’s mesh, thus its complexity. With adaptive, multi-resolution texturing and anisotropic filtering of different texture resolutions across face boundaries it is possible to retain a high texture detail and avoid image stretching in mesh areas with a high curvature. The Ptex texture mapping library implements its own file format, caching- and filtering-mechanisms to texture map multi-resolution (Catmull-Clark subdivision) models using intrinsic per-face parameterization and face adjacency data.

The goal of this project is to integrate Ptex into the Blender painting and rendering pipeline.

Benefits to Blender

Texture artists can concentrate on the texture instead of the underlying mesh geometry. If a texture needs to have more detail at a certain location, an artist can select the corresponding faces of the subdivision control mesh and increase their texture resolution. This is expected to work without distorting the rest of the texture and without adding seams between the different texture resolutions. Since the texture resolution is only increased in the selected area this method is also memory efficient. Due to per-face texturing with Ptex there’s no need to add UV seams to your model. If the artists prefer to draw in 2D rather than 3D it is fine too because one can project any texture faces to 2D and bring them back later.

Eliminating the need to manually add UVs to a model actually implies more freedom in the production pipeline. You can jump back and forth between modeling, animation and texture stages. In fact, artists can even start painting textures not requiring any explicit UV parameterization on the model. This drastically reduces the number of textures that are redrawn only because of changes to UV parameters. In general all of this decreases the overall feedback time and adds more parallelism to the production helps the visual director to implement his vision much more independently of the time of production.

Deliverables

- (a) The Blender user shall be able to view existing Ptex files from within the UV/Image editor and query some information about the internals of the opened Ptex file, e.g. what is the mesh type (triangles or quads) stored with the Ptex file.

- (b) Before actually manipulating Ptex files on a 3D model I think it is best to concentrate on either quad or triangle meshes and be able to texture them.

- (c) As soon as I can apply a Ptex texture to a model, I can start to bring in paint capabilities and a possibility to change texture resolution for specific faces. The user shall be able to paint using standard brushes.

- (d) All deliverables so far only consider integrating Ptex into Blenders UI workflow. There are probably numerous places where Blender’s rendering engine also needs to be modified. To be honest I have no clue at the moment what needs to be adjusted, but the “Project Details” section contains some obvious points where Blender’s rendering engine is likely to differ from Pixar’s Renderman (Walt Disney’s choice and first Ptex integration target).

In parallel to each deliverable I will write end-user documentations on the purpose of a component and on how to use it. Every development insight that I gain and that can help to improve the Ptex integration into Blender will be shared, too. After GSoC or whenever there is time during GSoC I can assist the unit test people to write unit tests for the Ptex integration or at least tell them what the code is supposed to do.

Project Details

Here I explain my more technical thoughts on each deliverable and on the Ptex integration in general.

- (a) Ptex has its own file format (http://ptex.us/PtexFile.html) that can be written and read using the accompanying Ptex API (http://ptex.us/apidocs/index.html). Depending on the mesh type (quad/tris) the Ptex file has been created for, the stored textures need special handling to be displayed with OpenGL since triangular textures are stored in a symmetric quad texture (http://ptex.us/tritex.html). When displaying Ptex files as described in deliverable (a) I need to take care of all of these points. When mapping the Ptex texture to the image window of the UV/Image editor, I get to know the way Blender handles OpenGL textures and I also learn how to extract certain parts from the Ptex texture to apply it to mesh faces with intrinsic UVs later.

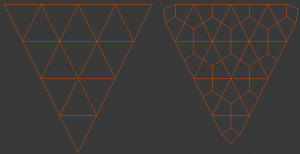

- (b) What about triangle and non-quad meshes? Well, since Ptex is most likely to be used with (multi-resolution) meshes using Catmull-Clark subdivision, I can primarily concentrate on quad-based meshes. As pointed out in “Real-Time Rendering (Third Edition)” on p. 623f “[…] after the first subdivision step [from subdivision level 2 onwards], only vertices of valence 4 are generated […]”. In fact even if the base mesh only consists of triangular faces, the first subdivision level produces solely quad faces. This is illustrated in the following figure.

- (c) Painting involves changes of the actually mapped texture of the face that is painted and writing these changes back to the Ptex file. From what I have seen so far, there is a possibility to “commit” changes to Ptex file (http://ptex.us/apidocs/classPtexWriter.html#c6428429908cee5c9a498757084300b3) but I don’t know of any Undo/Redo stack in the Ptex API. Maybe, this needs to be implemented with Blender.

After a discussing on the blender mailing list, I explicitly want to exclude a memory efficient, dynamic loading and unloading of textures from the Ptex file. To sum it up, I consider it best to keep the life cycle of development and user feedback short. “Maybe to them [the artists] it is more important to elaborate the brush system at first instead of increasing the maximum texture size. Who knows?” (http://lists.blender.org/pipermail/bf-committers/2010-March/026882.html). If the opportunity arises to implement efficient (un)loading at the end of the GSoC phase, I will try to implement it. But at least I will document places in code where one needs to pay attention, for instance if trying to paint to a texture that already has been dynamically unloaded.

There are parts in the Ptex paper where Pixar's Renderman (PRMan) somewhat acts a solution to problems that can arise by using Ptex. As part of my preparation for GSoC I will try to investigate these problems and if a comparable solution to PRMan is already present in Blender. Here are a few of these topics:

- When an anisotropic filter kernel is applied to two faces with different texture resolutions, Ptex tries to make "more consistent normals" on the edges (section 6.2 in Ptex paper). If these edge normals do not perfectly align there will obviously be lighting artifacts. Now in the paper it says that this isn't a problem because PRMan automatically adds micropolygons to fill the gap.

- Ptex pre-samples textures to avoid mipmap discontinuity. It says in the paper that textures with different resolutions have differently dense texture space "grids". One more time Walt Disney relies on PRMan and it's “smooth derivates" feature which makes du and dv values "continuous across the surface before passing them to the shader”.

Project Schedule

Until May 24, before the actual coding phase begins, I will familiarize myself with the blender code base, either by fixing bugs or by beginning small test to integrate Ptex into the compilation pipeline. I will also chat with the Ptex people on their mailing list (http://ptex.us/mailinglists.html).

Until mid-term evaluation on 12th June I plan to implement (a) and (b), the Ptex texture view mode in the UV/Image Editor and the texturing of models using existing Ptex files. I consider the first deliverables to be naturally more time consuming. After mid-term evaluation (c) and (d) shall be implemented, the painting to Ptex textures and the integration into the rendering engine/pipeline.

Bio

My name is Konrad Kleine and I'm a Master student of "Computer Vision & Computational Intelligence" in Germany holding a Diploma in Applied Computer Science from the University of Applied Science in Iserlohn. I currently live in Spain for half a year with my girlfriend, trying to learn Spanish and writing exams (remotely) at the end of March.

I have a strong background in using and writing open source software and working for companies that produce and distribute open source software. Among them are most recently GONICUS and Trolltech (now Nokia). Over the years I've gathered an in-depth experience in software development with C, C++, Python, Qt and OpenGL in multi-platform environments. My contribution to the Blender code-base is small and only includes a patch to ‘Reset "Solid OpenGL lights" not applied after loading factory settings’ [#18252] (http://projects.blender.org/tracker/?func=detail&aid=18252). Apart from writing code I’m totally into music and love to play the electric and acoustic guitar.

I've been into graphics programming since I started my studies back in 2004 and Blender has always been inspiring me. I'm passionate about graphics and thrilled by 3D mathematics.

I'm nearly done with my studies and see my participation in GSoC with Blender as a chance to seriously get into the digital content creation (DCC) tools or graphics programming industry. I also hope that by participating in GSoC I gain a deeper insight into the Blender development and codebase and be placed in a position to help developing Blender in the future. If I get the idea behind GSoC right, it's also about finding new people to work on the project for longer than just the summer.

Here’s a list of relevant projects (either research or open source projects) I have been working on. Most of them did include a lot of teamwork, conception and programming. If a project is not about 3D it is at least about participating in the open source community.

- Classification of facial expressions within video streams

- The most recent student project I am working on is the classification of facial expressions within video streams. We are trying to extract facial feature points (FFPs) using cascades of Haar-based feature detectors and a set of image filters. Until now we’ve batch processed roughly 1000 pictures of 2 individuals, each expressing 7 facial emotions. The outcome is used to train an artificial neural network (ANN) which shall later aid in determining the facial expression. For more information, please visit: http://code.google.com/p/klucv2/

- Multi-Touch-Display

- As part of my Computer Vision 1 course, a fellow student and I constructed a multi-touch display based on Jeff Han's paper Multi-Touch Sensing through Frustrated Total Internal Reflection. As a proof of concept we implemented a photo application using OpenCV for image-based input detection and OpenGL for visualization.

- GPUANN

- In a team of 4 people I was responsible for the implementation and design of an application framework to train and execute artificial neural networks (ANN) on NVIDIA graphic cards using the CUDA technology. The training and propagation algorithm had to scale according to the size of a network. The networks themselves were stored as XML files that were validated formally as well as logically by the application framework.

- QBIC – Play Smart!

- QBIC Is basically a "four in a row" board game, except that you can place your tokens on 16 sticks. This way you can connect four tokens in 3D space and no longer on a 2D plane. The game was a final examination of three of my fellow students and me. We used OGRE as our graphics rendering engine and many other open source components like Blender for the models and The GIMP for the textures. For more information, please visit: http://sourceforge.net/projects/qbic/

- Wiimote head tracking

- Head tracking is a technique to provide an immersive 3D experience through aligning a displayed scene relative to your head. I was so inspired by Johnny Lee’s head tracking demonstration that I decided to code a similar one myself only using GL and Linux instead of Windows and DX. The field of view and freedom of movement are somewhat limited compared to Lee’s solution. Here’s the code: http://bazaar.launchpad.net/~konrad.kleine/wiimote-head-tracking/trunk/annotate/head%3A/main.cpp

- OLPC

- For the “One Laptop Per Child” initiative I’ve wrote a patch to fix a bug in Sugar. The goal was to create an archive of the past N log files during the boot sequence. With slight modifications to code formatting (http://lists.sugarlabs.org/archive/sugar-devel/2007-May/002705.html) my patch was accepted by Marco Pesenti Gritti. The python source code for the patch is on this mailing list: http://lists.sugarlabs.org/archive/sugar-devel/2007-May/002700.html

- ASURO

- A fellow student and I assembled the autonomous ASURO robot and programmed it with a fast yet robust path-finding algorithm.

- Blender Classes

- I offered various Blender classes on 3D modeling, texturing, shading and animation. I also helped fellow students of multimedia programming course which I mentored to use blender as an exporting tool for OBJ format.

- Ptex

- I have contributed a full-featured CMake build system to Ptex to support native compilation on Linux, Mac and Windows with IDE support as well as running the tests on Windows using a modified python script. Packaging is now also possible using CPack. Here is my ptex fork on github http://github.com/kwk/ptex.

Thanks in advance for giving me and other students the opportunity to apply for the Blender project!

I look forward to hearing from you!

Kind regards

Konrad