利用者:Lucarood/Cloth/Proposal

目次

- 1 Proposal for improvements in Cloth Simulation

- 1.1 Benefits

- 1.2 Scope

- 1.3 Deliverables

- 1.4 Development Details (technical stuff)

- 1.5 The Future

Proposal for improvements in Cloth Simulation

The developments proposed here, aspire to make Blender's Cloth Simulator and related workflow more suitable for use in movie productions, with a special focus on cartoon animation and more specifically the needs for the Agent 327 project. Further, this project aims to make the Cloth Simulator a full replacement for Blender's Soft Body solver, thus removing the need to have both these systems with greatly overlapping features, and giving way for one single unified solution.

Benefits

Cloth simulation is a fundamental part of adding visual interest to an animation, however, as it is often the case that these simulations are unpredictable and hard to control, many people resort to manual animation of cloth pieces. This project will provide the user with better tools to control the appearance of cloth simulations, as well as improve the flexibility, reliability, and accuracy to the simulator itself.

Scope

The portion of this project which consists of improvements to the cloth simulator itself, will be limited only to developments in the "pre-solver" part of the simulator. Specifically, changes will be made to the mass-spring model and force calculations, as well as changes to the mass and force distribution and rest state evaluation. This means that the current time integrator (the actual solver), and caching systems will remain unchanged (for now).

The new collision system will retain the current two solver step collision evaluation scheme, where the cloth is solved without collisions, then collisions are computed, and the cloth is solved again. However, the collision detection code will be completely re-written, and most of the collision response as well.

The rest of this project, which is focused on workflow, consists of smaller projects. A redesign of the cloth UI as proposed here: T49145, the design principles of which, easily accommodate for all new features in the cloth simulator. Next, is the implementation of a new Modifier (Surface Deform). And minor changes to the vertex group/weight system.

Deliverables

Most improvements to the cloth simulator, don't result in a directly visible change for the user (e.g. new UI properties). However, these improvements can be witnessed by improved behavior of cloth simulations. On the other hand, the workflow improvements and tools do actually result in more tangible changes for the user. These are the improvements:

- A new and improved mass distribution model. This will happen in the background, and change nothing for the user, but will improve cloth behavior.

- An improved mass-spring model, including angular bending springs. This mostly results in behavior improvements as well, but also has some direct changes for the user. Namely, the "Structural Stiffness" property will be split into "Compression", "Extension" and "Shear", while the bending stiffness will be kept, though it will control angular springs instead of the current linear ones.

- Plasticity will be implemented (the ability for the mesh to retain simulated deformations, such as folds), and will be accessible to the user as a single property in the 0-1 range.

- A new and improved collision detection and response system. This will integrate the object and self collision systems in a single unified solution. The new and completely different system, will require a new UI, consisting only of "Friction", "Elasticity" (bounciness) and "Cloth Thickness", while the collision groups can of course be kept. With the new system, an "Advanced" section should also be added to the cloth UI, where the user can specify the collision detection methods to use (more details on that in the "Collision Handling" section below).

- Addition of an option to select a separate object for the "Dynamic Mesh" feature. This would be a single box next to the "Dynamic Mesh", where the user could set the desired object (the usefulness of this feature is detailed in the "External Rest Position Object")

- UI redesign. This aims to make the UI more intuitive and well organized, and a new design (with code implementation) is already proposed here: T49145

- Surface Deform Modifier. This will have similar behavior to the "Mesh Deform" Modifier, but will enable the bind of meshes to non-manifold proxies (ideal for cloth simulation). This will have a similar interface to the "Mesh Deform" Modifier, with only the "Precision" and "Dynamic" options removed, while an "Interpolate Normals" option will be added, and possibly a "Bind Method" option (details on that in the "Surface Deform Modifier" section).

- Weight Keys. This will add an animateable "Influence" value to each vertex group, identical to the "Value" option in the Shape Keys. This will not affect the existing vertex group system, but every object will have a hard-coded "Combined" vertex group, which is a mix of all other vertex groups based on their influence values. This will enable the user to easily animate the effect of vertex groups, which is useful for many things, such as being able to change pinned vertices on a cloth during simulation.

Development Details (technical stuff)

These developments are divided into two categories: improvements and features to be implemented in the actual Cloth Simulator, and tools that enable a better cloth simulation workflow.

Summary

The development descriptions below are quite thorough, and I may actually have gotten carried away in the details. So for those not wanting to read this super extensive document, here is a little summary of the things I plan on implementing/improving:

- Implement a Voronoi-based mass and force distribution method.

- Do an almost complete rewrite of the currently terrible mass-spring model.

- Implement plasticity, so that simulated meshes can partly retain deformations (has to work with dynamic basemesh, shrinking and such).

- Rewrite the collision system (both object and self collisions).

- Get rest position from separate object (possibly linked copy).

- UI redesign.

- Implement a Surface Deform Modifier.

- Implement "Weight Keys".

Cloth Simulator improvements

Mass and Force Distribution

Currently mass is being applied uniformly to every vertex in the mesh, without taking the surrounding surface area into account. That causes some issues when applying forces at polygon level, causing inconsistencies on meshes with even slightly non-uniform polygon distribution. Further, the method currently used for applying forces dependent on surface area, such as wind, is highly flawed and results in greatly uneven forces being applied to the vertices.

To correct this problem, each polygon in the mesh should have a corresponding list of all vertices it affects and a weight value for each one, based on the percentage of the polygon's surface area that should influence that vertex. The issue however, is how to determine the adequate vertex weights per polygon, in such a way that the distribution is correct even in highly uneven meshes. To compute this, I have developed an efficient algorithm for a Voronoi-esque tesselation over the surface of a 3d mesh, which solves the issue of irregular meshes, as it mostly ignores the discrete polygon structure, and computes the mesh as a nearly continuous surface. This method would guarantee flawless mass and force distribution on the cloth mesh, which is overlooked by most cloth simulation solutions.

Physical model

Blender currently implements the mass-spring model proposed by [Choi and Ko 2002]. The problem with this model is that as with most academic studies, the solutions are developed with highly constrained, specific, and purely theoretical problems in mind, and frequently end up as difficult methods to generalize for use in the real world. For reasons described below, this model is only really suitable for simulating planar meshes with a grid-like polygon structure, representing a woven fabric (theoretically formed by inelastic fibers).

The first issue happens already at the moment of spring assignment, where a grid-like pattern of vertices is assumed. Vertices one grid unit apart are connected by tension (structural) springs, and vertices two grid units apart are connected by compression (bending) springs. Obviously in actuality, it is impossible to represent any desired mesh shape with a perfect grid structure, which is why many failures occur during compression spring assignment in Blender, where springs often are missing, thus creating a malformed cloth structure representation.

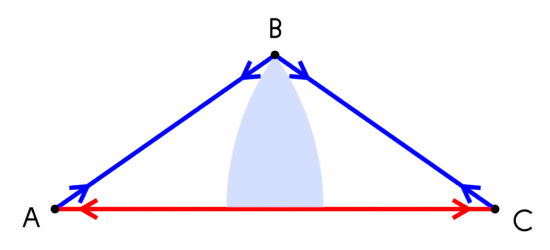

The above image is a cross sectional view of a small fragment of a cloth structure, as modeled by [Choi and Ko 2002], and as currently implemented in Blender. The blue lines represent tension springs (which are analogous to the existing mesh edges), and the red line represents a compression spring (which has no analogous in the original mesh, as it is computed by skipping one vertex in a vertex loop). It is important to note that tension springs only apply forces to pull vertices together, and only do so when they are extended beyond their rest length. On the other hand, compression springs only apply forces to push vertices apart, and only do so when compressed beyond their rest length.

With that information in mind, and considering that the above image represents the cloth in a rest state, it is easy to see that the vertices are under-constrained, and can move through large portions of space, without any spring forces affecting them, thus not being influenced by neighboring vertices, and essentially acting as free floating particles. I have demarcated such area of unconstrained motion for the vertex B, as the shaded area in the above image (note that the unconstrained area should actually continue on the mirror side of the AC spring, but I have not drawn that for the sake of space).

So as a result of all that, it can be seen that the system only provides resistance towards further folding the mesh (reducing the internal angle of the vertex B), but no resistance at all towards unfolding/flattening or even flipping the mesh. The only case where all vertices are fully constrained while using this model, is when the rest state of the mesh is a plane, and thus the length of the compression spring would be equal to the sum of the adjacent tension springs, and as a consequence, either compression or tension forces would always be applied to the vertices in any state other than the resting position.

Another thing to note, is that in this model, the cloth's compression resistance is entirely dependent on the bending resistance, as they are both provided by the same springs, thus very flexible materials would inherently be highly compressible when using this model. This is absolutely undesirable, as it diverges greatly from reality, and also greatly limits artistic control. The problem goes to the extreme, where if you set bending forces to zero, you could compress your entire cloth mesh into a single point in space, without any resistance, and the cloth would happily rest in that absurd state.

Linear Structural Springs

A better model for compression/extension, is only having linear springs between adjacent vertices (which would never fail to be assigned, as they run along existing edges), which would act both in the compression and the extension direction. This would result in much more realistic cloth behavior, and give much greater control over the material properties, as the compression forces are completely independent from the bending forces. This allows for the possibility of adding one more "stiffness" property for the user to set compression stiffness separately from tension and bending.

Angular Bending Springs

For bending, I propose the implementation of angular springs. The forces computed, would thus be proportional to the delta angles between two adjacent faces, which then would be applied along the normals of their respective polygons (not exactly along the normals, but that is a simplification for the sake of "brevity"). While forces with the same magnitude of the total resulting vector, but in the opposite direction would be applied to the edge between the faces, thus generating a balanced system. This model would react in closer accordance to materials in the real world, and would provide signed angles, thus not allowing the mesh to rest in a flipped configuration.

Shear Springs

Shear springs are currently assigned across the diagonals of quad faces, which is fine, and is the desired behavior. However, the code was written before Blender had ngons, so it neglects those and they end up without any shear springs. So a slight change has to be made to account for them, and assign shear springs between all non-adjacent vertices of a face.

Another slight improvement, would be to expose shear spring stiffness to the user as a separate value from the structural springs. This would allow the simulation of a much wider range of materials, such as for instance a fabric with a very coarse open weave, formed from very stiff fibers, which would obviously be very stiff, but would also have a very low shear resistance.

Spring Properties

As already described in the previous sections, after the proposed changes, there would be several independent spring stiffness properties (tension, compression, bend, and shear), which would allow much more control over the fabric's physical properties. However, to really have an absolutely complete range of possibilities regarding material properties, there should be a linear and an exponential component for each of the stiffness values, to allow the accurate representation of materials with a more exotic behavior, such as rubber.

After all the proposed changes (including some of the below), the user would have fine control over the behavior of any material imaginable, ranging from silk to rubber to paper or even something like wire mesh.

Plasticity

Plasticity, is the behavior where materials retain deformations (such as folds), and is the final piece that will enable the cloth simulator to mimic basically any sheet-like material.

It is usually really straight forward to implement, as it is just a matter of reassigning the spring rest lengths/angles to a value interpolated between the previous rest value, and the current state of the cloth mesh, based on a plasticity parameter in the 0-1 range, set by the user. So a value of 0 would mean no influence at all, and the rest position would remain unaffected by the deformations, while a value of 1 would mean that any deformation would fully replace the rest position.

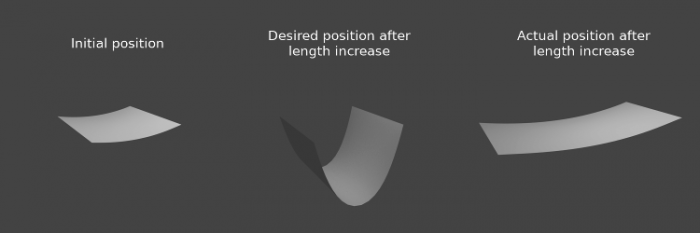

The issue is that in Blender we also have other features that affect the rest state throughout the simulation, such as "Shrinking" and "Dynamic Mesh", which means that we can not simply override the rest state with the plastic deformations, as it would be overridden again by those (and potentially other) features. The solution is to store the plastic deformations as ratios of the rest values, and multiply the rest values by the plasticity ratios right before passing the data to the solver, and then reset the values to the previous rest position, so the other features can properly deal with the mesh.

Collision Handling

The processing of collisions is divided into two steps, the first being the collision detection, where it is determined what portions of a mesh are colliding with others, and the second being the response to the collisions, where measures are taken to resolve the colliding state.

In this section I will mostly make no distinctions between object collisions and cloth (self) collisions, except when specifically noted. Also note that what I will later refer to as "self collisions", are collisions within the the same linked mesh portion, while separate disconnected mesh portions colliding, are considered as separate object collisions.

Collision Detection

The relevant collision detection methods for cloth simulation, can be divided into three distinct categories:

- Temporal collision detection

- Methods in this category are very robust for detecting collisions within one time-step, as they can detect collisions even when no geometry intersections occur. The drawback, is that they have to compare the previous state with the current state of the simulated objects, thus if a collision is not properly handled within a single time-step, these methods have no form of recovery, and the broken state becomes the new frame of reference for the next steps.

- Static intersection detection

- Methods in this category ignore the previous state, and instead check for mesh intersections after advancing the simulation one time-step without any influence of collisions. The advantage here, is that even if collisions have been mishandled for several time-steps, these methods are able to self-correct the state of the meshes. While the drawback is that if a mesh passes through another in a single time-step, and no intersections occur, the collision goes undetected. That might seem like an unlikely case, and it is when dealing with closed or convex meshes, but it is a real problem when dealing with planar sheets.

- Static proximity detection

- Methods in this category are similar to the category above, however, instead of checking for intersections, meshes are checked for proximity (These checks can either be performed on the previous mesh state, or after advancing the simulation like above). These methods try to find contacts before actual collisions occur, by checking polygons for proximity within a determined distance (usually defined as the cloth thickness). These are the least robust methods described here, however they are still useful for keeping meshes stable during a prolonged contact state.

I will not further describe the specific collision methods I am considering, as that would be too lengthy. So, moving on...

Rather than choosing a single collision detection method and using that exclusively within the cloth simulator, I have designed a heuristic that allows the selection of the most suitable method or combination of methods for different collision cases.

The heuristic relies on the wether the meshes are closed (manifold) or open (non-manifold):

- closed-closed collision: Temporal collision methods are unnecessary (provided reasonable time-steps are used), so exclusive use of static collisions is suitable. Further, shortcuts can be taken, as only the boundaries of the collision can be seen (think two squishy balls colliding) and thus the vertices inside the contact surface don't have to be handled very carefully (unless either mesh is rendered with transparency, further notes on that below).

- closed-open collision: Temporal checks can again be skipped. However, vertices inside the contact surface can no longer be ignored, thus this type of collision (which is probably the most common), requires a bit of extra care in relation to the closed-closed case.

- open-open collision: This is where everything gets dangerous, as non-intersecting parallel planar collisions may occur, thus requiring the use of temporal methods, which does not however exclude the use of static methods as well. So this is the most time consuming case to evaluate.

- self collisions: Regardless of the manifoldness of the mesh, these collisions are very similar to the open-open case, but as it is very rare that non-intersecting collisions occur, it can be assumed otherwise, and the temporal methods can be skipped fairly safely (Note on corner-cases below).

While using a selective heuristic for collision methods will greatly improve collision performance and reliability, the heuristic is not perfect. The heuristic tries to be as safe as possible, so sometimes it will determine certain detection steps should be taken, even when it is safe to skip them, and in rare occasions it will wrongly skip certain steps that are only really necessary in corner-cases (such as the mentioned transparency issue, and non-intersecting self collisions).

To make the collisions system as flexible and reliable as possible, I propose the addition of an "Advanced" section to the cloth UI, where users could override the decisions of the heuristic, and manually select the desired collision detection steps. This would enable advanced users to get the greatest performance possible, and to correct issues that may occur in certain corner-cases, while still providing a fast and reliable collision system to less knowledgeable users, in the form of the automated heuristic.

Collision Response

Collision response methods may be several, depending on the method by which they were detected, the integration algorithm being used, and the desired collision behavior (I'm still weighing options here, and am not totally decided regarding what response model to use).

Plausible responses to collisions may be:

- Applying forces to/between the vertices, edges, or faces involved in the colliding state, to push them away from each other.

- Applying changes to the velocity of vertices, to make them conform to the colliding mesh's behavior.

- Directly altering the position of the affected vertices, to position them in a plausible location relative to the collision mesh.

Regardless of what response(s) to use, the force and velocity vectors generally have to be split into perpendicular and tangential components. How the components are determined, also depends on the detection method that found the collision, being most often determined by the normal directions of colliding geometry, or sometimes the vector between the "closest points on geometry", for the colliding portions.

After that, the tangential component is obviously used for applying friction forces (based on the tangential velocity and perpendicular forces). But equally important, and currently overlooked in Blender, is the response in the perpendicular component taking into account collision elasticity. That determines how "bouncy" the collision should be, and should be accessible to the user as a value in the 0-1 range, with 1 being a complete inversion of the perpendicular relative velocity vector (super bouncy), and 0 being complete absorption of the impact forces (no bounce at all, but rather immediate rest).

External Rest Position Object

Currently one can use the "Dynamic Mesh" feature to have an animated rest position for the cloth simulation. This feature reevaluates the rest position at every step in the simulation, copying the underlying mesh position at that frame. This works fine, as long as this feature is not used in conjunction with Pinning.

For instance, say you want to simulate a square grid laying on the XY plane, and you want to pin the opposing edges along the Y axis (edges parallel to the X axis). Now you want to use Dynamic Mesh to have your cloth gradually increase in length along the Y axis (while keeping the pinned edges stationary), so that it droops further, thus you animate your mesh scaling it along the y axis. Now the issue is that you have arrived at a situation where your mesh is deforming like you want, but the pinned edges are also moving due to the scaling along the Y axis.

This issue happens because both the Dynamic Mesh and the Pinning are taking their positional data from the same place, and thus can't be animated separately. This could be solved by taking the rest position data from another object. The issue that arises from that, is that the two objects could diverge in topology, thus rendering the rest object useless. This could in turn be solved by only allowing the use of a linked copy as rest object, which would guarantee mesh consistency, but here yet another issue is encountered, which is the fact that Shape Key influence values are stored in mesh data, and thus can't be animated independently between linked copies. This would greatly reduce the usefulness of the Dynamic Mesh feature, by only enabling the use of modifier based deformations independently from the simulated mesh, while Shape Keys would still directly affect the simulated mesh, and not just the rest mesh.

Here we arrive at the conclusion that we can either allow the use of any object as rest object, do a simple vertex count check to ensure consistency, and accept the fact that if the cloth mesh is edited, the user will have to update the rest mesh as well, or the Shape Key influence values could be moved over to object data instead, which would enable the full use of the Dynamic Mesh while using a linked copy, which would ensure consistency. However, moving Shape Key influence values to object data, would severely break existing things relying on that feature, and may also introduce unexpected issues (DepsGraph?).

Related tools and workflow improvements

UI Redesign

Nothing much to say here, other than it is basically a matter of merging this (after some minor changes): T49145

Surface Deform Modifier

This Modifier will have a similar workflow to the Mesh Deform Modifier, in that you bind a high-poly production mesh to another low-poly proxy, with the intention of transferring the motion from the proxy to the production mesh. Where this differs from the Mesh Deform, is in that it will transfer the surface motion, instead of binding the production mesh inside the volume of the proxy. This Modifier will thus be suitable for transferring cloth simulations from a specially modeled simulation mesh to a detailed garment mesh.

The bind will work by assigning vertices on the production mesh to faces on the proxy, measuring the projection distance along face normals from a vertex to its respective face, and then measuring the barycentric coordinates of the projected point on the face. Later, when applying the deformations, the process is reversed, the vertex is positioned at the barycentric point it was assigned on the face, and is then moved along the face normal by the projection distance measured at the bind (Note that normals can optionally be interpolated, for smoother deformations).

The main implementation is quite straight forward, but you may have noticed that I did not mention how it is determined which vertex will be assigned to which face, because that's where the actual difficulty is found. An obvious choice would be to assign vertices by their proximity to the proxy faces, and that would work well in many cases, but can fail when having closely overlapping meshes (such as a buttoned shirt). The other option I came up with, is to UV unwrap both meshes, and configure the UVs in such way that they fit nicely on top of each other, then use the UVs to determine the vertex-face assignments, and while this solves any ambiguity that the proximity method generates, it adds an extra, time consuming step for the user.

Of course it is completely feasible to have both methods of assignment available as an option in the modifier, in which case, the proximity method would be default, and the user could switch to the UV method if the bind is not satisfactory. (I have also thought of using user defined vertex groups to disambiguate overlapping meshes, but that might be a little sketchy...)

Weight Keys

This is a mixture of vertex groups/weights with the concept of shape keys. The idea here, is to be able to mix vertex weights by influence values. This can already be achieved with the "Vertex Weight" modifiers, but that is really not an optimal workflow when you want to mix many vertex groups. This would be implemented by keeping the existing vertex group system, but adding an "Influence" value to the vertex group data structure, and adding a "virtual" hard coded vertex group which represents the combination of all vertex groups based on each one's influence value.

This way, the normal vertex group system will continue working as usual, without being affected by the new influence values, but certain tools could query specifically for the combined group based on the influences. This would allow many new workflow possibilities, such as the easy animation of pinning vertex weights for cloth simulation.

The Future

This is a proposal for some much needed initial development in the cloth simulation area of Blender. But of course there will still be quite some more room for improvement afterwards. The most obvious things that come to mind when thinking about further improvements to the simulator, are improvements to the implicit integrator code itself, and of course the much wanted "tearing" feature. But these improvements are not paramount for projects like the Agent 327, thus are not included in this proposal and are not priorities, but given time, can certainly be implemented.