利用者:Mx/GSoC2008Proposal

目次

[非表示]Google Summer of Code 2008: Freestyle integration

PROJECT PROPOSAL

Maxime Curioni

Synopsis

The goal of the project is to extend Blender's non-photorealistic rendering capabilities by integrating Freestyle, a stylized line drawing software emanating from research. The integration will have to focus on adapting Freestyle's source code to Blender's architecture and rendering pipeline. One of the challenges of the project will be to make sure that new expressive styles can easily be created while keeping user interaction and parameter configuration adequate for artists' use.

Background

Traditional computer graphics rendering methods (local and global illumination) have attempted to create images that are physically realistic. Non-photorealistic rendering (NPR) is a fairly recent branch of computer graphics that aims to complement these methods, producing scenes in graphical forms and styles traditionally used by artists. By only putting emphasis on certain features, NPR allows for more telling representations of the scenes, infers a greater sense of expressiveness and creative freedom for the artists.

Freestyle is a NPR software developed for a research project dedicated to the study of stylized line drawing rendering from 3D scenes. It intends to be flexible in its design to encompass a whole array of graphical styles, allowing maximum control over the rendered product. Technically, Freestyle uses a programmable paradigm, inspired by shading languages: each artistic form is described by a style module, a series of instructions that transform 3D model features (silhouettes, key edges and intersections, curvature...) into stylized strokes. The underlying language used for style description is Python, already used in Blender for scripting.

Benefits to the Blender Community

Rudimentary NPR can be achieved in Blender either through an export to an external renderer or with the combination of edge rendering and toon shading. Even though artists have been able to push NPR effects to great length with custom material node setups, a more versatile and integrated approach would greatly enhance Blender's capabilities. Blender would certainly gain a competitive edge over other 3D packages. Moreover, many users have expressed in forums their desire to have a better NPR renderer and have shown particular interest for Freestyle.

It is important to note that Freestyle will not offer a complete NPR solution. It can be only considered as a first step in the installment of a general procedural NPR framework, only covering stylized line drawing. A lot of insight will be gathered from this integration experience; that knowledge will surely prove useful when extending Blender further in the future. The implementation of this shader-based project is closely related to the Python node system currently available in the latest development version. So far, Python nodes have been used for shading; this project will push for their use in the compositor.

Deliverables

Blender will be extended with the following features:

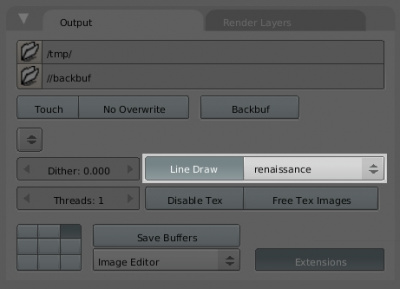

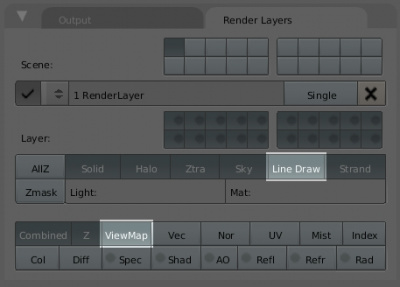

- A] line drawing render layer (merged with the current edge render layer)

- B] view map render pass

- C] view map graphical operation tool

Here are a few mock-ups of how things should look like:

Project Details

Being a newcomer to Blender development, the project will require me to gain comprehensive understanding of both Freestyle and Blender's source code. To make that process easier, I intend on making the integration in small increments, focusing on only certain areas of the project at once.

1) Freestyle as an external renderer

Freestyle will first need to be used as an external renderer, with almost no functional modification. Currently, Freestyle is run through a Qt GUI. It imports 3D models with the lib3ds library and displays its graphical output via the Qt widget libQGLViewer. Stroke rendering is made possible by standard OpenGL drawing routines.

Freestyle rendering will have to be made independent of the Qt interface. Components responsible for drawing will be isolated, so they can be called programmatically. Also, since graphical output can be saved to disk in Freestyle, these saving functions will be used to create the output image, then displayed in the traditional render window.

The geometric data input module will have to be adapted to support Blender's native geometric data format. Two solutions are possible: either use the internal 3ds exporter, or directly recode the area of the application related to loading 3D models. For convenience, I will start with the first solution and then move on to the second, which will be useful for the rest of the project.

In this phase, Freestyle will use an external Python interpreter, independent of the one used by Blender. The code for using Yafray as an external renderer will be studied in detail to get some ideas on how to go about the integration.

2) Render layer for NPR line drawing

Blender currently supports edge rendering as a separate render layer. One of the motivations behind the integration of Freestyle is replacing that layer with a more general and customizable NPR line drawing render layer. Unfortunately, Freestyle cannot totally replace the current edge renderer: the silhouette it calculates is smooth, while the one in the current edge renderer is polygonal. In order to maintain functionality across Blender versions and allow the artist to choose which algorithm to use, the new render layer will have to support both capabilities.

In this phase, the extended render layer will be created and Freestyle will be merged into Blender's rendering engine. Even though Freestyle's pipeline will still be used as it was originally coded, the main difference with the previous phase will be that the NPR image layer will be 'mergeable' with other render layers (in the previous phase, only the NPR image could be visualized singularly). Freestyle's internal rendering engine will be integrated with the current edge render layer code.

The Python layer will be considerably modified. Freestyle's engine will now use the Python interpreter used by Blender. All former dependencies with SWIG will have to be resolved and replaced with bindings to Blender's environment. In addition, the graphical commands used to render strokes will have to be changed to the ones used by Blender.

3) Decomposition of Freestyle's algorithm into reusable functional modules

Freestyle runs in three stages:

- calculates the view map

- operates on the view map (selecting, ordering, chaining, splitting) and creates the strokes

- renders the strokes

It would be useful to abstract these stages into three independent modules, giving users more room for customization and allowing developers to eventually extend the view map in the future (i.e. adding new model feature information).

The first stage is responsible for producing the view map (the graph data structure that encodes the models' topological information in the projected view) as well as other information (depth buffer, line density maps...) calculated on the models. Like other calculations presentally accessible through compositor nodes, Blender would benefit from having the view map directly accessible at the compositing stage: the user could play with the view map 'live' without having to rerender the scene. The view map would come from a new render pass. Some work would have to be done to utilize Blender's current render passes in Freestyle (the depth buffer being the best example) to avoid code duplication.

The second stage deals with operations on the view map and could be achieved with custom style definitions implemented as Python nodes in the compositor. A style definition explains how the view map information is translated graphically into strokes. Freestyle authors have already defined a clear API for style definition. That API will be used as-is and will only be adapted if needs be.

The third stage is in charge of rendering and would have already been dealt with in the previous phase.

Finally, Freestyle authors have already developed many impressive artistic styles. It would be useful to migrate them to the new system and have them available by default: not only would users be able to select them as a style for a render layer, they would serve as basis for new style experimentation.

4) Graphical tools to control view map calculation

One of the purpose of Freestyle is to programatically automate the stylization of model features, collected in the view map. Unfortunately, artists might want a larger control over what part of the model would be included. It would be a nice addition to Freestyle to graphically interact with the view map.

For the course of this project, the control will be limited to selecting which edges/vertices to include/exclude from the view map. The selection with be made in edit mode, for lack of a better option. The edges/vertices selection process will reuse the code already developed in Blender for edit mode.

Blender currently allows vertex groups but lacks edge group support. An edge group data structure will therefore be incorporated into Blender to allow for the graphical edge selection. Like vertex groups, edge groups will directly be accessible and configurable from the 'Editing' section.

5) Extra documentation and debugging

The integration process will be reported through posts on the Blender wiki. Much effort will be placed on documentation, particularly trying to make the information available as early as possible. The style definition API will have to be clearly defined. A tutorial on the new render layer usage and style creation will have to be written by the end of the project. In the final days, the focus will be on getting user feedback and fixing potential bugs. Additional documentation could be produced due to mentor's request.

Project Schedule

First, regarding my availability for the project, my classes end on June 13 but I'll be totally free from school on June 27. During that whole period, I can commit to at least 20 hours of work per week. Having no internship planned for the summer, I can work on the project full-time from then on, until end of August. The only exception will be during the SIGGRAPH conference, that I will be attending. I have tried to lay out a realistic timeline for the project, based on former coding (and debugging...) experiences.

The timeline of deliverable submission will follow the steps mentioned above in the project details:

| 0) In-depth study of Blender and Freestyle, communication with mentor | Apr 21 - May 25 | (5 weeks) |

| 1) Freestyle as an external renderer | May 26 - Jun 8 | (2 weeks) |

| 2) Render layer for NPR line drawing | Jun 9 - Jun 29 | (3 weeks) |

| → deliverable: A] line drawing render layer | ||

| 3) Decomposition of Freestyle's algorithm into reusable functional modules | Jun 30 - Jul 20 | (3 weeks) |

| → deliverable: B] view map render pass | ||

| 4) Graphical tools to control view map calculation | Jul 21 - Aug 9 | (3 weeks) |

| → deliverable: C] view map graphical operation tool | ||

| 5) Extra documentation and debugging | Aug 19 - Sep 1 | (2 weeks) |

Bio

I am a 24 year old Master's student studying computer graphics at Tsinghua University, in Beijing, China. Before that, I completed my engineering diploma at the Ecole Nationale Supérieure des Télécommunications in Paris, France. I am interested in many fields of computer science, particularily all areas related to visual computing (computer graphics, image processing, computer vision, computational geometry...). My Master's thesis will focus on real-time rendering techniques.

I have done three major computer graphics projects for school, two in OpenGL (implementing Catmull-Clark and Doo-Sabin subdivision schemes, recalibrating 3D meshes with their corresponding texture maps) and another based on PBRT (implementing Henrik Wann Jensen's hierarchical BSSRDF evaluation). I have also done some web programming work in the past three years, mainly in PHP and Ruby on Rails. I am confident I have sufficient knowledge and experience in C++ and Python to carry out the project.

I am a newcomer to the Blender community, both as a user and a developer. Last summer, I read the "Essential Blender" book and have studied many video tutorials from Blender tutorial sites. I intend to get up to speed with the source code in the next two months. The technical challenges ahead, the participation in an open source project and the level of expectation from the Blender community are all important motivation factors.