利用者:Nazg-gul/DependencyGraph

目次

[非表示]Dependency Graph

注意

Proposed solutions seems to work "on paper" and were not checked on the real life yet. hence some fluctuations on the planning/features are possible. Don't become too much overexcited.

|

Abstract

This page is related on the dependency graph upgrade project. It covers an introduction to previous work done in this area by Joshua Leung and Lukas Toenne, giving some technical information from their proposal. But mainly this page is about how do we move forward with this project from now on.

Introduction

The number of research and design proposal were done in this area. for example here are proposals from Ton Roosendaal, Brecht van Lommel and Joshua Leung. They sees on the issue from different points of view, but all of them agrees that new dependency graph should:

- Be capable of representing all ID data types (in one way or another), making sure it's not only working for objects but also for materials, images etc.

- Be capable of representing more granular dependencies, such as relations between two bones of the same rig.

- Be threadable (for evaluation) on it's nature.

Some of the current dependency graph limitations are clear from the list above, but here's full(er) list of the issues in the current dependency graph which should be studied in order to create an ultimate design of the dependency graph:

- Current dependency graph only represents object ID datablocks.

- Real-life example: Driving one bone with another one from the same rig doesn't work.

- It is kind of threadable now, apart from some special cases such as cyclic dependnecies and metaballs (which requires single-thread pass still).

- Behaves really doggy with physics caches.

- Real-life example: Cache invalidation is totally out of user control, same applies to the automatic caching. Most of the time during animation playback one just ends up manually invalidating the physics cache.

- Physics simulation doesn't fit at all into the dependency graph currently.

- Real-life example: Force fields are rather just a huge bodge on a bit smaller bodge, not friendly for threading, reliable caching and so.

- Instancing is also really dodgy.

- Real-life example: It is currently a mix of dependency-graph driven approach with a ad-hoc usage. For example objects needed for the duplication are updated in the main scene update function, and when one needs this dupli-list parts of original DNA might need to be overwritten.

- Another real-life example: all the instances sharing the same time, meaning old neat feature called "local time offset" is not possible by the design.

- Proxies are even much of a bodge than instancing.

- Real-life example: Only a single instance of a proxy-fied character is allowed, plus only armatures could be proxy-fied at this moment (no materials overwrite and other neat features).

- DNA is the storage so far, and what's even worse some runtime stuff is stored in DNA.

- Real-life example: Particles are rather pure horror, mainly because of all that psys_render_{set, restore}.

There are likely much more issues with the current dependency graph, those are just main ones which are becoming really annoying now.

Current state

Most of the issues listed above were already tackled and tried to be put into a code during GSoC-2013 project by Jushua Leung. That was quite nice starting point, taking into account all the research made by Joshua. However, that code was staying decoupled from Blender sources for quite long time.

Later last year Lukas Toenne put the code form Jushua into Blender itself in the depsgraph_refactor branch. He did quite nice job on making code more readable (mainly approach was to use Cycles-like C++ usage where you only use minimal required subset of C++ features, avoiding void* magic and simulating object inheritance in C).

The code became a bit more maintainable and understandable after that, but it still didn't do anything useful (no actual node-callback functions and so).

Design overview

In this section the design is gonna to be overviewed just briefly, just outlining the major aspects of how all the things comes together. Design with all the technical details could be found at the end of this page.

In order to support all granularities we want the depsgraph itself would be changed comparing to it's current design.

Nodes

Basic Overview

Nodes in the dependency graph becomes more an "operation node", not "data node". It means nodes will enclosure some context (at least it seems we need the context, maybe in final implementation it'll be done a bit different?) and a callback which makes sure the corresponding entity (object, mesh, bone etc) is up to date. This way all the update process becomes more or less a black box for dependency graph (this is covered in more details later).

Node types

The number of different node types is needed to represent scene as a nice granular graph. In the original design documentation from Joshua there were two sets of nodes:

- Outer nodes, which represents scene on a broad scale. Basically, they corresponds to an ID block.

- The use of such nodes mainly comes to different queries (when you need to know what the datablock depends on, or what datablocks are depending on the given node). Plus such nodes helps with update flushing, making it easier to tag all operation nodes for evaluation when the datablock changes.

- Inner nodes, which represents the relations between entities on a fine level. This nodes defines the steps and their order needed to perform when something changes in the scene.

Original proposal also covers several types of the outer nodes, namely:

- ID nodes which simply corresponds to an ID block from the scene. Just to explicitly stress: those nodes corresponds to any ID block, not just Object or Object Data.

- ID groups which is a cluster of ID datablocks, in order to address issues when there cyclic dependencies between datablocks.

- This type seems a bit of a mystery, not sure final implementation would have them and for sure initial implementation would likely not be aware of such a node types.

- Non-id blocks corresponding to a steps needed to be performed before actual datablocks could be evaluated.

- Examples here could be rigid body simulation step.

- Subgraph which obviously corresponds to another dependency graph.

- This type doesn't seem to be in an original proposal from Joshua, but it's somewhat crucial to have for dupligroups.

Inner nodes are basically a representation of different parts of the outer node, in other words inner nodes represents different components of a corresponding datablock. Inner nodes holds a callback which is responsible on making sure corresponding component is up to date.

Examples of inner nodes (from the ultimate granularity point of view):

- Object transform. This node is responsible on getting object matrix (solving parenting, constraints)

- NOTE: we actually might want to decouple constraints evaluation into a separate steps as well, but this is not really clear if it's any useful at this moment)

- Geometry. This node corresponds to an actual geometry evaluation (for meshes it's a building DerivedMesh roughly speaking).

- NOTE: In the future we might want to have multiple geometry nodes per object, making it possible to evaluate individual modifiers at a time. This is somewhat crucial for nodified modifier stack.

- Animation. This node corresponds to animation system update (f-curve evaluation, driver evaluation etc).

- It'll be likely multiple animation nodes per datablock, this is because f-curve are usually expected to be applied on the original object, but some drivers (especially bone drivers) are expected to be applied after the corresponding data is evaluated (so it's possible to drive something with a final bone location).

Storage

Crucial thing to solve is where we store the data needed for evaluation.

On the early stage of work we can stick to old habit of relying that DNA stores all the data. This would let having more granular evaluation on earlier project stages, without rewriting half of existing blender code.

Mainly that assumption would be valid for until we'll want to split modifiers/constraints into separate nodes. But once those things are split we'll need to store intermediate matrix/DerivedMesh somewhere.

Easiest thing for this seems to allow dependency graph node to have a storage, which is being used by the evaluation callback.

NOTE: In the future node storage might be replaced with something more global-ish, copy-on-write aware centralized storage used by all contexts (different windows, render/viewport etc).

Instancing

Instancing is always a huge PITA, mainly as how was mentioned above some stuff is a part of dependency graph, and some stuff is being on-demand evaluated.

Duplivert, Dupliface

It is not that much an issue for dupli-{vert/face} for which recent changes to the duplication helped a lot already (by not overwriting actual DNA values). This duplications are now mainly making it so Blender Internal (and maybe Cycles?) doesn't need the actual DNA to be overwritten for work.

Dupliframe

Dupliframes is not that an issue as well by the looks of it. It basically requires the same changes to Blender Internal API to avoid a need to copy object matrix of a duplicate to original DNA.

Also because duplilist creation happens after the scene is ensure to be up-to-date evaluating object matrix for each point at a time also seems to be rather legit from threading/storage point of views.

Dupligroup

Dupligroups is what bigger attention is required.

First of all, currently objects in a group are sorted in an order they need to be evaluated. This worked fine for until threaded dependency graph update happened. Now the same threaded update needs to be done for objects in a group.

Most straightforward way to do this is to have a scene (sub)graph stored in the group which would define how to update the group. This seems to be quite straightforward with subgraph dependency graph nodes: dupligroup becomes a subgraph node in a scene dependency graph.

For until local time offset is bring back, having such a node is sufficient. But in order to support local time offset (and maybe some more features?) we'll need to have a storage which would contain a state of an objects of given dupligroup at a given time. The same dependency graph node storage could be used for this, but don't feel like it's what would be implemented in the final code. Some more details could be found in the Copy-on-Write section.

Proxies

Proxies are the least developed aspect of this project.

It currently seems to be more a pipeline/linking issue than a dependency graph issue. From the dependency graph point of view proxy is just a transparent copy of an "actual" depsgraph node, with own storage. Depending on what exactly we'll want to use proxies for, it might be needed to have a dedicated outer node type for proxies.

Most of the complexity currently seems to be in a synchronization of the proxy with the linked datablock (to reflect changes in rig, or material or whatever else). It's not so much a rocket science, just needs to be done carefully making sure all part of blender are updated accordingly.

Most of the confusion in this topic might come from the idea of being able to "proxy" a linked material and do tweaks needed for a particular shot. The thing here is, it's not an actual proxy, it's more like an "override". Making a proxy is more or less creating a new entity, which isn't that useful in a context of given example -- there would need to be a way to use material proxy instead of original material in a linked object, which isn't so easy as well.

So for this we'll need to have some overrides techniques, which could be done with a python, ID properties or something else.

Local graphs

The idea of local graphs is to separate objects state for viewport and renderer in order to solve threading conflicts between them. The same local graphs approach might be used to support local time offset for dupli-group, different time in different windows and so.

Simplest way to achieve this is to simply duplicate all the storage and graphs and store them per-window/per-render engine. Such approach solves the threading conflicts, but it also introduces rather huge memory overhead.

Better approach here would be to use an advantage of having EvaluationContext which currently only defines what the graph is being evaluated for (viewport/final render/preview render) and have some storage in the context.

The proposed approach here is called Copy-on-Write, which re-uses the datablocks if they're used for read only and duplicates the datablock when write happens. Some extra compression might be applied here, for example RLE or copying only data which changed.

Fuller proposal could be found there

Animation System

Animation system should fit nicely into the proposed dependency graph with the idea that it'll have dedicated operation nodes. This way order of f-curves/drivers evaluation is nicely declared by the dependency graph itself.

The only major thing to solve here is to understand in which order drivers evaluation and object update is to be performed.

Current code around this is rather a huge mess, allowing drivers to be evaluated from all over the place, leading to some nasty issues.

Currently it seems the proper way to go would be:

- F-Curves are evaluating before object update, altering original object properties (original location, rotation etc).

- Drivers are to evaluated after the object update, using final transform as an input, likely applying output on the final transform as well.

- This might be incorrect in context outside of the armature bones, but at least there should be a way to do drivers updates in that order.

Node groups animation

We currently do have issues with Cycles rendered preview which ignores changes of animated properties inside node groups. This is because Cycles updates only actually changed data and for node groups it's not possible to detect which node group affects on which shaders.

Solution here is also possible by putting shader (and material>) nodes into the dependency graph, so when changes happens to a group, the update flushes automatically to all shaders which uses that group.

Updating

As was mentioned above, nodes becomes a black box for dependency graph. This makes it really easy to schedule nodes for updates and handle them from the centralized space.

Basically the updating will happen in the same way as it's currently done with threaded dependency graph traversal:

- Centralized task scheduler is used to keep track of nodes to be updated.

- Centralized nature of the scheduler helps a lot with balancing the work between threads by avoiding spawning more threads than CPU cores and so.

- Just to mention, basically the idea is to use centralized scheduler for most of the object update as a replacement of OpenMP threads.

- Node is getting scheduled once all data it depends on is up-to-date.

- Roughly speaking, node is being scheduled when all nodes current one depends on are traversed.

- To be clear, scheduling basically means putting the node to the queue to be evaluated, actual node update could happen later, once all previously scheduled nodes are handled.

- When scheduler worker thread handles the node it just calls a callback stored in the node.

- This way node is really a black box, as an opposite to current state when worker thread checks for type of the node and so.

Layers Visibility

It is really important to keep dependency graph visibility layer aware. So that way it is possible to hide layers with heavy objects in order to increase interactivity of the interface.

Currently it's done in quite a nasty way:

- When first time some layer is visible, the objects from it are forced to be re-calculated.

- When object is on invisible layer and tagged for update it's derived mesh simply get's freed. No re-tag would happen in the future so it'll be up to the viewport thread to create derived mesh for that object.

- Driven armature on an invisible layer wouldn't have proper update when becoming visible.

- Think there are more and more cases when current behavior will fail.

From loads and loads of thinking here it seems the only reliable way to solve this would be to decouple "ID is up to date" flag from "this ID needs evaluation at current depsgraph evaluation". So generally speaking depsgraph should be aware of what data is out of date and what data is needed for the currently visible scenes/layers.

This doesn't seem extremely difficult and proposal here is:

- Tag IDs when they are up to date and let the depsgraph clear outdated data (as per Brecht's proposal)

- Store layer visibility in the depsgraph nodes (not sure they're currently stored in new depsgraph, but they are definitely stored in the old one)

- When adding relation from A to B (B depends on A), visibility of node B is copied to node visibility A.

- Main idea here is to make it so invisible dependencies of something visible appears to be visible from the depsgraph point of view.

- This would need to be recursive visibility flush by the looks of it.

- When doing dependency graph evaluation we execute callbacks from nodes which corresponds to IDs which are not tagged as up-to-date and which are currently visible.

Now, we want to avoid depsgraph traversal when nothing changed. Currently it's done by checking if some datablock was tagged for update. Quite the same idea would work with the new approach as well:

- Changing scene visibility would tag depsgraph as "visibility changed". Depsgraph traversal happens in that case (in the scene_update_tagged) and "visibility changed" flag clears from the depsgraph.

- Tagging ID for update (well, clearing flag that it's up-to-date) would also set a flag in the depsgraph that traversal is needed.

- If no visibility changes or object update tagging happened no depsgraph traversal happens.

For sure it's not totally correct here to think in terms of objects with the granular updates. But it seems for the granularity we'll just need to decouple "object is up to date" flag into per-component up-to-date flags.

Render Engine Integration

It is important to integrate dependency graph with the render engines. Mainly for:

- Letting render engine to know that something changed in the scene so it'll update it's database

- It is to be stressed here that render engine should be able to receive "partial" updates. So, for example, moving camera in the viewport does not trigger BVH rebuild. Ideally render engine should know what type of ID and what exact ID changed.

- Also ideally

- Render engine should be able to traverse scene graph in the dependency order, same as scene update routines does. This is to support threaded operations with the scenes in a clean fashion. In terms of Cycles it'll mean, i.e. that getting derivedFinal for objects would happen from multiple threads.

Now let's look into this two aspects in details.

Notifications Mechanism

Let's start with putting all the requirements:

- Render engine should be able to know that something changed in the scene.

- Example: Blender Internal does not support partial BVH updates, so when any of the objects changes it needs to recalc full BVH. It doesn't make sense for BI to know what exact object changed.

- Render engine should be able to know what exact ID changed.

- Example: This is how Cycles rendered viewport currently works -- it only updates datablocks which are marked as changed.

Current implementation (with bpy.data.objects.is_updated and bpy.data.objects['Foo'].is_updated) works pretty well. Not currently convinced this is to be changed.

The only issue with current approach is that tagging something as updated "optimized" for Cycles. This means when changing something in the interface (like, material settings) only sliders which affects Cycles (well, and BI as well) render will tag something for update. If Cycles doesn't really care about that value then nothing would be tagged so no extra refresh happens.

To address all this things, here's a proposal:

- We keep python API the same as it is in current master branch.

- This way we don't break any existing addons, renderers etc.

- It is pretty simple to re-implement in new depsgraph branch: depsgraph already keeps track of nodes which are directly tagged for update.

- This means it's already simple to support things like bpy.data.objects.is_updated. Supporting queries to particular ID would need to be tweaked a bit, so instead of direct access to ID->flags it'll check whether the ID is in the "directly tagged for update" list of current depsgraph.

- Well, actually. That's a bit more tricky, because that check needs to be done after flush, but it's more or less just implementation detail which seems to be easy to address.

- We add proper dependencies between node trees, so it'll be possible to distinguish which exact materials/objects are to be updated when changing something in the node group.

- We would probably want to support some query API, so render engine would be able to quickly check if the thing which was changed actually affects anything what is required for rendering.

- This is kind of more proper approach than "subscribing to change notifications" briefly discussed in IRC. The thing here is, render engine is interested in all the changes which affects render result and it's not possible to preliminary exclude some notifications. It's more about helping render engine to see if changes actually affects on anything or not.

- So for example changes to a node group which is used by several materials does trigger re-render, but if artist changes values in a node group which is never used (or used by invisible objects) then no re-render happens.

All that seems to be simple to be quite simple to implement, doesn't break compatibility, addresses all the needs we want. At least in theory.

Scene Traversal

This is that kind of things which would be rally useful, but which is not essential before initial merge.

Anyway, basic idea here it to make it possible for render engine to traverse scene from multiple threads and request for derivedRender of the objects without causing any threading conflicts.

It is kind of possible already if all the logic is e-implemented in render engine side, but ideally it should be something easier.

Main stopper here are the duplilists, handling of which via python API (including C++ RNA) is just unsafe from threading point of view.

Unfortunately, don't have proposal here with which i'll be happy for now.

Baby steps

Dependency graph consists of multiple topics, touches almost every part of Blender code and it's not simple to oversee all the things, the project is to be break down into more manageable parts.

This doesn't mean previous work is to be thrown away, more like opposite -- use the current state of the work as a milestone, but for actual integration into master branch break the work into smaller steps.

This means the design of the dependency graph stays the same because it could totally represent current non-granular depsgraph. And the idea here is simple:

- First we finish the new depsgraph system code, making sure it all nice maintainable.

- We wouldn't have user-visible benefits from that, we'll just be sure dependency graph is being built correct, node callbacks approach works and so.

- Then we go granular in areas it's needed first.

- For Gooseberry project it'll be tasks related on rigging/character animation.

- Go granular in all of the rest of areas (modifiers, etc).

- We'll also put non-object ID blocks to the dependency graph as it's needed

Progress

Here is the break down of the project, and progress of each of the steps. Breakdown might change during the project.

- Milestone 1

- doneMaking sure the branch compiles, runs nicely with the old dependency graph as main evaluation thing, have a way to use the new depsgraph.

- in progressCleanup and simplify code as much as possible, make it easy to maintain.

50%

50% - in progressChange the existing new dependency graph builders to represent current granularity of the dependency graph.

15%

15% - in progressMake sure current relations represented in a new dependency graph (with all the callback approach) works smoothly.

17%

17% - to doSolve animation system issues (order of drivers/f-curves evaluation)

- in progressStart going granular, making first goal is to be able to drive one bone with another one in the same armature

5%

5% - to doGlue together with Lukas work of Alembic, Particles etc, make sure cache really works with the new dependency graph.

- Milestone 2

- to doContinue going granular, solving issues with constraints+drivers interleaved with armature bones

- to doWrapping up granularity(?) solving possible fake cyclic dependencies between object components.

Implementation

This section is aimed to describe implementation of nodes, relations and how it all comes together. It mainly aimed for developers, but technical users might also be interested in it.

注意

This section is under heavy development at this moment, it'll get filled in parallel with actual work in the dependency graph branch.

|

Issues

- Need to ensure depsgraph deletion doesn't access freed data

Armatures

At this moment armatures are the only entity in the new dependency graph which has more or less complete granular updates.

This section is about components, nodes and relations involved in armature evaluation.

Components

There are number of components involved into armature evaluation.

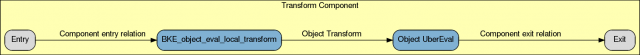

Transform component

Transform component is responsible on getting armature object matrix, by constructing it from object location, rotation, scale and applying possible constraint stack on them. It is also possible this component depends on the animation component which happens in cases when properties needed to construct object world matrix are animated. In this section we'll leave animation component.

There's an example of the transform component for armature object in the picture above. Currently all the update happens in so called "uber update" node, which is an equivalent of object_handle_update() function called for an object with OB_UPDATE_OB flag. All the rest nodes in there are currently dummy nodes only needed to keep track of dependencies.

Main reason why granularity doesn't happen here yet is because there'll need to be a way to store and pass intermediate object matricies. So, for example, it'll be possible to update constraints stack result without re-evaluating animation and object local transform.

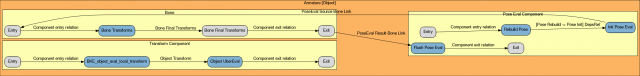

Pose component

Pose component is responsible for making sure armature pose is totally up to date and ready to use by others. Roughly speaking, it's responsible on delivering result in the same way as legacy object_handle_update() would do if an object is tagged for OB_RECALC_DATA.

There's an overview picture of pose component in the picture above. To be noted here: this node represents armature without bones, so the scheme is not terribly complicated, Bones will be overviewed in the next section.

This is the component users like Armature Modifier (or other things which needs to know final pose of the whole armature) should depend on.

All the operations in this component are quite straightforward and all of them have proper callback in the new dependency graph branch:

- Rebuild Pose operation is responsible for making sure object's pose is up-to-date. Internally the callback uses BKE_pose_rebuild() if there's no object pose yet or if it's tagged for recalc.

- Init Pose Eval operation prepares the whole armature for evaluation. That said, it clears runtime evaluation pose channel flags (i.e. POSE_DONE) and initialized IK solvers.

- Flush Pose Eval operation finishes evaluation and flushes all the changes which are not needed for pose evaluation but might be needed by other users.

- That said, this operation releases IK solvers, calculates pose channels chan_mat matricies.

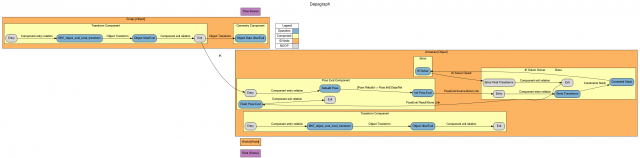

All the bone evaluation happens in between of Init and Flush operations. Order here is controlled by dependencies of the root bones from the Init operation and Flush operation from the tail bones. This is illustrated a bit on the diagram below.

This diagram contains Bone Component which will be explained later.

One last bit here is dependency from animation. Currently pose component depends on animation operation node associated with action stored in the object ID.

Here is the question, shall we consider animation on armature level here?

Bone component

Bone component is responsible on making sure single bone (or more precise, pose channel) is up to date and ready for use by others. The idea is whether bone is a part of IK solver or not, dependency from the bone channel will always make sure final pose matrix is evaluated.

That said stuff like bone parent should use bone component as a dependency, without worrying about anything else.

Bone component might consist of the following operations, which are in the linear dependency (should be executed one by one):

- Bone Transforms gets bone transformation based on its' parent, but doesn't take constraints into account. Basically it's just a call of BKE_pose_where_is_bone() (but without constraints) for the corresponding pose channel.

- It's a bit more tricky internally, because i.e. for edit mode it copies matricies from the edit bone. Plus for case the bone is a part of solver nothing is to be performed at this state yet.

- Constraints (note: which isn't shown on the diagram) is responsible for evaluating the constraints stack of the corresponding pose channel.

- Implementation of those two callbacks are a bit tricky at this moment. This is because we don't have storage in depsgraph yet and we can't evaluate just parenting or constraints separately, so we only call BKE_pose_where_is_bone() from one of those operations, but not from both. This helps keeping dependencies between contraints and other entities be correct and have proper updates still.

- Bone Final Transforms is actually a NO-OP operation, which only needed to make sure the bone component's exit operation depends on the final bone evaluation. This NOOP is not needed for armatures without solvers, but if bone is involved into solver bone component exit operation should depend on the result of the solver. Relations between operations in this case are described in the next section.

Now, we need to talk about parent relations. This is actually a bit tricky and depends on whether IK solvers are involved or not. Basically the rules here are:

- Root bones depends on the Init Pose Eval operation of pose component. So evaluation of root bones only starts when all the preparation steps are done.

- Parent relation for bones which are not part of IK. In this case child bone depends on the bone component which corresponds to the parent bone. Which means child will use final parent bone matrix as an input.

- Parent relation for bones which are part of IK. In this case child can't depend on the final result of parent component (because final matrix of parent would be only known after solving the IK). So in this case child bone depends on pre-solver operation of the parent bone channels. Depending on parent bone configuration it'll either be Constraint operation (if parent bone has constraints) or it'll be Bone Transforms operation if parent doesn't have constraints.

- NOTE: We might consider adding pre-solver NOOP node to the bone component, so adding relations will be really easy.

One thing to be mentioned here is that currently some of the constraint dependencies exists between constraint target and Pose Component. This is just because of the lack of intermediate storage, so evaluation of the whole constraint stack is forced. In the future it'll be changed.

This this basically wraps up description of what bone component is.

IK solver component

This section will cover both IK and SplineIK solvers, because they're actually quite close to each other.

So the idea here is that dependency graph has an IK Solver component, which depends on all the bones involved into the IK chain and which performs solver on the chain.

Currently IK solver component consists of a single operation, which calls BIK_execute_tree() for the root pose channel of the IK chain (it is BKE_splineik_execute_tree() for case of IK solver).

This means if the armature is branching an two leaves uses IK constraint which goes to the same root, there'll be only one IK solver operation for those two bones.

Now, IK solver operation depends on the pre-solver transformation of the bones, which makes it so the IK solver node depends on either Bone Transforms or Constraints (depending on the existance of the constraints stack of the bone, same as parent relation) operations of the bones which participates in the current solver.

To make it so bone component represents final bone matrix, Final Transform operation will depend on the IK Solver operation.

There's no need to worry about IK targets for the solver, they're already handled in the bone components.

Simplest case of the IK solver is illustrated on the next diagram.

TODOs

There're number of TODOs for the proper armatures support:

- Review animation integration into the pose evaluation, shall we split animation f-curves so they applies on individual bone channels?

- Drivers are not hooked up at all

- Solve stupidness of "fake" dependencies, which only needed because of lack of intermediate storage (this probably not so much crucial, it doesn't prevent artists from using depsgraph)

- Make sure depsgraph is properly rebuilt when settings changes (i.e. currently changing IK Solver constraint dept doesn't rebuild depsgraph)