Dev:2.5/Source/Architecture/Window Manager

目次

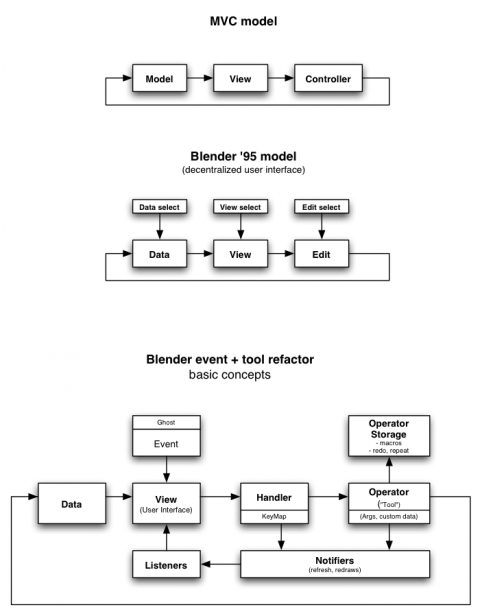

[非表示]MVC model

MVC Model

The MVC model is short for "Model, View, Controller". It's a UI development paradigm which proposes to strictly separate code for "Model" (the data) from "View" (the interface, visualizations) and from "Controller" (the operations on data). Read more about it in Wikipedia.

Blender Data-View-Edit Model

In the period 1994-1995, when in the NeoGeo animation studio a redesign started of their in-house tool "Traces", a similar model was developed. It was coincidentally similar to MVC - we never heard of that then! - with an important exception though.

The Blender "Data View Edit" separation can be found in the entire Blender UI. The most important implications of this concept were:

- Create a stand-alone database with a default set of data management tools (Blender Library, or Blender Kernel in code).

- Allow the UI to visualize such data flexible; by selecting some subset of this data, it can be visualized as buttons, 3d windows, diagrams, data browsers, and so on. The user selects the view method flexibly.

- Editing is always on the data, not the visualization of it! Although that could seem slower in cases, it makes the tools much more flexible, predictable, and uniform.

The main exception was that the "UI" (in this case buttons) was on each level, to allow data selecting, view selecting, tool selecting. It also meant that there was no central registration or handling of events, each view or tool could get own sub-queues with event handling. This is one of the main limitations that should be solved for the 2.5 project.

Blender 2.5 MVC Model

This diagram shows a more elaborate description of how Blender 2.5 will handle events and tools.

- The "View" now is the only place where event handling happens, this is the new WindowManager in Blender.

- The "Controller" has been split in two parts. There's a "View" related component (the Event Handler) and a Data related component (the Operator). Separating these two is important for re-use of Operators, like for macros, history, redo, or python.

- Events are strictly separated from "Notifiers". The first means user input - or timers, external events - which have to be handled each, and in order of occurrence. The latter, Notifiers, are merely suggestions, and are only targeted at the UI ("View") to function properly.

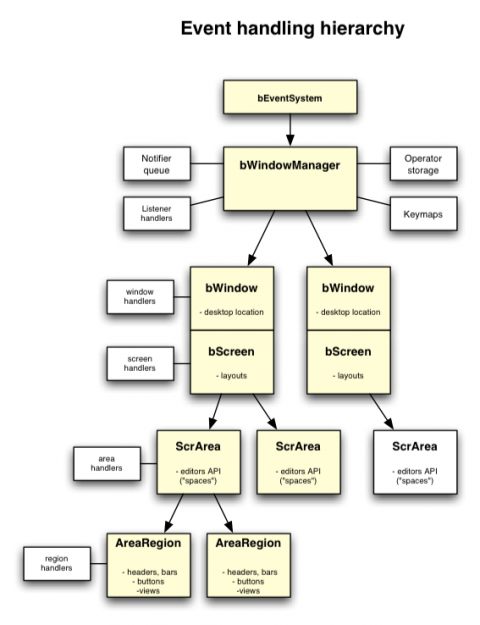

Window Manager and Event flow

Blender was designed as a single-window system, providing non-overlapping subdivided layouts ("Screens, Areas") to manage the various editors and options. For 2.5 we will tackle a couple of weak points in the original design:

- Opening a 2nd window (render window) was horrible and tedious code, with no uniform handling. An optional render-output window should be handled like any regular "Screen" in Blender.

- The subdivision system only provided support on Area level, one per editor, with fixed header size. This gave context problems (where do we show related button options), space problems (headers were too rigid and small), but it also created problems when the need arose for further area subdivisions, such as channel lists for animation curves. Now, each Area should be able to split itself again in many "AreaRegions". Such regions also can contain buttons (to solve abuse of floating panels) or provide multiple views (a 4-split 3d window) with a single context.

- The definition and handling of Areas and Spaces (editors) should become clean, allowing custom or plugin-able editors. A local "Area manager" should take care of its own region subdivision, allowing it to be like a fully independent editor within Blender's Window Manager.

- Although the subdivision lay-out has proven its use - and being used now in other programs - there's occasions when it becomes too rigid, limiting users or certain useful multi-window setups. Allowing multiple Blender Windows - each with subdivision Screens - will allow giving windows a individual context like for EditMode, or showing different Scenes. It will allow multi-monitor setups with each an own graphics card. It will even allow one Window to be in animation playback, whilst the other window shows a frozen frame for editing.

This has lead to the design and implementation of a Window Manager for Blender. Each Window Manager (there can be multiple!) holds the old Global data, the Blender database and 'current file', and manages the various windows and the event/notifier system. Events get propagated by the WM to a hierarchical tree, with events being handled globally, per window or screen, per area or per area-region.

Note: on .blend file reading, an attempt has been coded to try to match multiple-window WindowManagers to single-window ones, or vice versa. The current convention is to try to "match" with the existing window layout, trying to prevent opening/closing windows. This is under development though.

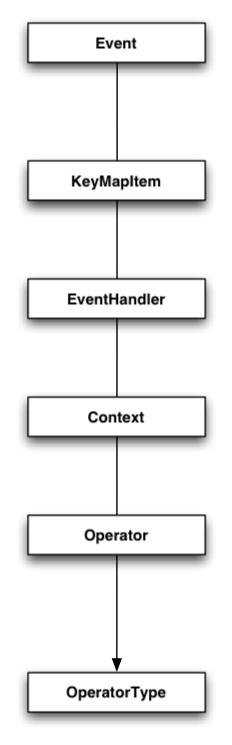

Event - Operator pipeline

This diagram shows the main building blocks for the event/operator system.

Event

- keys, mouse, devices, timers

- custom data (tablet, drag n drop)

- modifier state, mouse coords

- key modifiers (LMB, H-key, etc)

- modifier order (Ctrl+Alt or Alt+Ctrl)

- gestures (border, lasso, lines, etc)

- multi-event (H, J, K etc)

KeyMap

- own keymaps for windows, screens, areas, regions

- one handler can do entire keymap with many items

- items in keymap store OperatorType identifier string

- keymaps also can be created for temporary modes (grab, edge slice)

- default maps built-in; user maps override

- keymap editor in outliner?

EventHandler

- can do boundbox test

- event match test using keymap

- context match using handler-operator type callback

- swallows or passes on events

- default handlers are added by internal configure code

- temporary or modal/blocking handlers for menus or modes

- operators can add handlers, python can add, etc,

- special event handlers for notifiers, buttons, ...

Context

- Blender-wide uniform definition for how to retrieve data

- replacement for the ugly G global and other globals

- every operator gets Context as argument

- Scene, Object, and modal contexts possible

- Window, Screen, Area, Region contexts

- 'get' functionality, like GetVisibleObjects using bContext

- Custom contexts possible for Python scripting

Operator

- The local part of Operations

- stores custom data (temporary) and properties (saved)

- Operators can save in file, for macros, redo, ...

- Operator calls don't draw, but send notifications

- Operators can add itself to new modal handler (like Grabber: on Gkey it adds new handler with own keymap)

- points to Blender builtin bOperatorType

OperatorType

- Unique identifier string ("WM_OP_ScreenDuplicate") for storage

- interaction callbacks for starting menus, add modal handlers

- exec callback should be able to redo entire operation using Operator properties + bContext

- poll callback to verify if Context matches.

Operator design notes

When adding a new Operator in Blender - or converting old tools - the code should strictly separate the actual operation from all possible options and handling of Operators. Simply said, this is the first thing to design:

Any operator should be able to perform with only a Context given. This is crucial to conform to the operator specifications, which should be re-usable, stackable to macros or history, savable in files, or used by scripting. It means that any relevant user input has to be stored in the Operator as properties, and the "poll" callback of the operator has to be designed to verify for a proper context.

It is especially important to decide what belongs in the operator state (the properties), and what belongs to context. Balancing both well defines the practical (re-)use of operators, and its meaning for handlers.

In theory it's possible to make a Context-less operator like "translate all objects in all databases 10 units". Or, a complete state-less operator like "translate the selected objects in this view to the 3d cursor".

Here's an example of how operators could be designed:

| Operator | Context | State properties |

| Split Area | Active area | direction, split point (0.0-1.0) |

| Add UV Sphere | 3D window | location (XYZ), radius, subdivision (UV) |

| Extrude | 3D window, editmode mesh, selection | extrusion type, translation (XYZ) or axis + units |

The state variables in operators are all Properties, saved optionally in files. Such properties can be listed by default in an Operator stack (or "last command Panel"), not only showing what happened, but especially to allow to redo or repeat using buttons. The new Python API then can even automatically print out the associated Python command line in a console!

In some cases, operators are not single actions, but get handled during a temporary mode. During such modes, the property changes will be made visible until the user applies the action. To allow for this, the operator-type has two optional callbacks: "invoke" and "modal". In those cases you probably want to split the execute call into three as well: "init", "apply" and "end". Since those calls are only needed for modal operators, they're not stored in the OperatorType definition.

For example, the Split Area operator then gets structured as following:

|

Functions:

Callbacks:

|

Events vs Notifiers

By definition, Notifiers are only interesting to send to the interface, to refresh or change views, internal modes, etc. Notifiers are allowed to be cached even; a dozen of "redraws" then will only result in 1 draw.

Most importantly, while handling events no drawing should take place, for that you have to use notifiers.

A good notifier definition doesn't say what should happen, but what actually happened. Exceptions are general notifiers like "Redraw" (call drawing callbacks) or "Refresh" (re-initialize configuration). However, a bad notifier would be "Redraw objectbuttons", better is to use something like "Object ID=xxx changed" or "Context Active Object changed".

Managing Context

There's only one bContext struct, allocated on start of Blender, and from there on propagated to the rest of Blender. How context will survive during the next months we'll still have resolve, but here are initial specs:

- The Context struct itself should not be exposed outside the WindowManager, but made accessible with function calls.

- Access to change Context should be limited respecting hierarchy. The active Area for example, can only be set inside of WindowManager.

- Context is always valid! If it stores a pointer to data, you should rely on it to exist correctly.

- For Python scripts, we could look at ways to disable modules based on context, to prevent drawing while handling events, for example.

- Changing Context happens immediately, and it will send itself notifications of such. This is why the operator poll() is crucial, rely on it to always check Context entirely, so you can handle pointers to data without tedious checks.

- Bigger Context changes (new Screen, new Scene) might work better as Notifier, to prevent active event handlers to make errors.

- Currently, a Context change is not stored as Operator. This will limit construction history use, but will still enable clean Macro definitions.

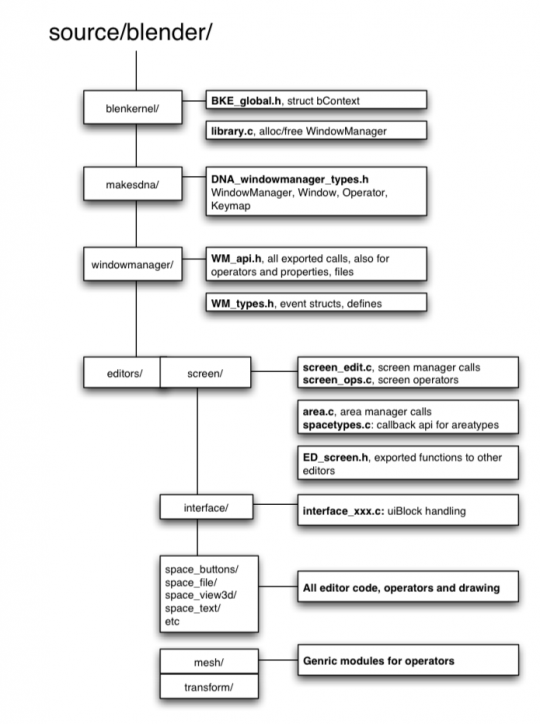

Code layout

To the right find an (incomplete) overview of the current code structure. The old directories blender/src and blender/include have disappeared, and were split in 2 parts;

- windowmanager module for all general windowing/event/handler/operator stuff

- editors module, for Screen layouts, Area definitions and editors themselves

- todo: split up WM api further, to show which part can be revealed to editors

- todo: check for bad level calls, so a Blender can be built without WM and editors

Open Issues

- How to configure other devices, such as tablet. How those get mapped to mouse buttons?