Dev:2.8/Source/Depsgraph

目次

Blender 2.8: Dependency Graph

This document covers all the design specifications of dependency graph to support all new features of Blender 2.8 project.

Introduction

Let’s start with making it really clear what the dependency graph is, what it is supposed to do and what it not supposed to do under any circumstances. The main goal of dependency graph is to make sure scene data is properly updated after any change in the most efficient way. This means the dependency graph only updates what was dependent on the modified value and will not update anything which was not changed. This way artists always have the scene in a relevant state with the maximum update frame rate possible. Dependency graph is doing that by preprocessing scene - creating graph where nodes are entities of the scene (for example, objects) and edges are relations between these objects (for example, when Object A has a parent Object B, the graph will have an edge between nodes which corresponds to these two objects).

Roughly speaking, the dependency graph is responsible for dynamic updates of the scene, where some value varies over time. It is not responsible for one-time changes. For example, the dependency graph is responsible for f-curves evaluation, but not for edge subdivide operation in mesh edit mode.

Overview

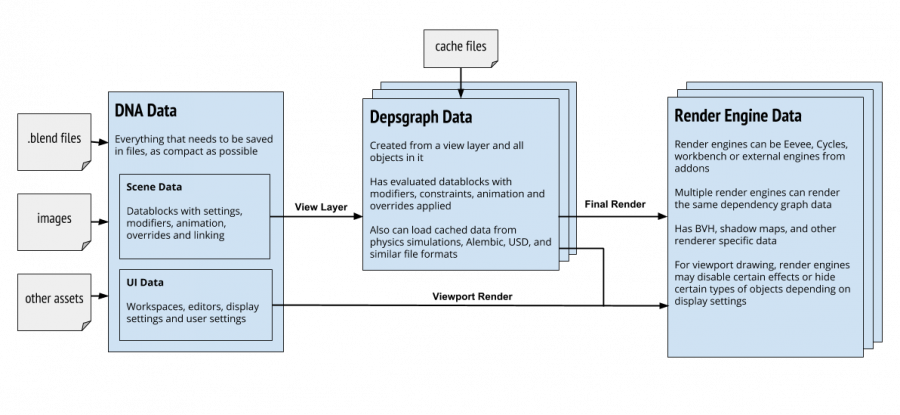

The new design overview is quite simple and is shown on the following picture.

The changes from existing design in 2.7x series could be summarized as following:

- DNA data is directly delivered from .blend file, It is as simple and small as it needed to represent scene in an external storage.

- DNA data has no runtime fields,

- Dependency graph applies all the required changes (modifiers, constraints, etc) on a copy of DNA data. Such data we call generated.

- Dependency graph stores evaluation result on itself. None of the changes are applied on the original DNA.

- Render engines are working with generated data provided by dependency graph and are never touching original DNA.

- Render engines will have generalized and centralized API to store engine-specific data.

All this is needed to support features like overrides and scene on a different state on different windows. It looks quite simple, but this causes some big design decision to be made to cover all possible tools and corner cases.

Dependency graph ownership

Before answering the question of who owns dependency graph let’s cover workspaces really briefly.

Workspace is a way to organize artists workflow. Active workspace pointer is stored per window, so window always has one and only one active workspace. Workspace itself defines render engine (which could be the same as Scene render engine, but it’s not always the case). Workspace also defines active scene layer (layer which is visible in all editors of this workspace).

Those are really important statements, because both render engine and active scene layer defines way how objects are evaluated to their final state. This is because render engine can have some simplification settings, and layer can have some overrides.

The decision here is that it is window who owns the dependency graph (for the interactive operations, for final render see paragraph below). When artist changes workspace, or selects another render engine or scene layer, window is asked to re-create its dependency graph which corresponds to a new window/workspace state.

For the final F12 render ownership is a bit simpler: dependency graph is owned by the Render structure.

This way we have both fast as possible scene evaluation for the visible data and support all needed context-related overrides.

Generated data and copy on write

Dependency graph always performs evaluation on a copy of original data. This is the only way to support data to be in different states at the same time and the way to solve threading conflicts between render and viewport threads (for example, low-res character in the rest pose in one viewport and hi-res animation playing back in the other viewport). There are several questions to be answered here.

What are the data structures for generated data?

For a long time Blender used original Object DNA structure to evaluate animation data and evaluate transformation of the object and used DerivedMesh to store geometry itself. Use of DerivedMesh had some annoying aspects, mainly that some algorithms needs to be implemented twice (for actual Mesh and for DerivedMesh). Also the fact that DerivedMesh is dangerous to be exposed via Python API made us to introduce bpy.data/meshes.new_from_object() which is used by any external render engine and exporter scripts.

The idea is to utilize the same DNA structures as stored in .blend file (with possibly extra data filled in) for the generated data. This has following advantages:

- We have one single API to access original data (which might be required for some tools) and for final generated data. Render engine will simply not care about where the data is coming from since it’ll be handled in same way in any case.

- It will naturally allow us to have overrides on transformation (instead of porting transformation to DerivedMesh which will make DerivedMesh almost the same as Mesh)

- This will be a natural way to support proxies on everything.

One extra point to be raised about DerivedMesh is that drawing code will be moved outside of DerivedMesh. This will make DerivedMesh really same as Mesh and there is no point of having DerivedMesh at all.

When is the generated data created?

This is quite tricky one. On the one hand we want all the data to be created as late as possible. Ideally, creation of new data should be happening from threads. However, with the current dependency graph design we have to know all the pointers evaluation functions are dealing with at a dependency graph construction time.

There is quite easy work around that. At a dependency graph construction we create shallow copy of the corresponding datablock. Shallow as in we only allocate pointer of a size of the structure but don’t fill it in (for example, we allocate structure of type Mesh but we don’t duplicate any vertices arrays). All the contents of the duplicated block are copied from the evaluation threads.

Here is a brief code snippet to visualize the idea better.

ID *DEG_create_shallow_copy(ID *original_id)

{

ID *local_id = MEM_callocN(sizeof(DataType), "shallow copy for depsgraph");

/* Store original ID so we can do the following things:

* - Detailize the copy at evaluation.

* - Go from evaluated object from the original one (some tools needs that)

*/

local_id->newid = original_id;

return local_id;

}

void DEG_finish_copy(ID *id)

{

/* Go into all possible ID types, copy stuff like mesh vertices and so. */

}

void DepsgraphNodeBuilder::build_object(Scene *scene, Object *object)

{

/* ... */

Object *local_object = DEG_create_shallow_copy(&object->id);

add_operation_node(&local_object->id,

DEPSNODE_TYPE_TRANSFORM,

DEPSOP_TYPE_EXEC,

function_bind(BKE_object_eval_transform,

_1,

scene,

local_object),

DEG_OPCODE_OBJECT_UBEREVAL);

/* ... */

}

static void deg_eval_task_run_func(DepsgraphNode *node)

{

/* ... */

ID *id = node->getCorrespondingID();

if ((id->eval_flag & DEG_COPIED) != 0) {

DEG_finish_copy(id);

}

node->update_function();

/* ... */

}

Abandoning DerivedMesh will require us to update modifier stack, but conversion should be rather straightforward.

How to avoid heavy geometry duplication?

Initially we can copy everything, including vertices, faces and so on. Such approach will let us to have things up and running quickly. Later on, we can allow sharing pointers to geometry in a similar way as we do for DerivedMesh created from Mesh without modifier. Points here are:

- We always create “container” (such as Mesh structure). These structures are quite light-weight and we can have lots of copies of them without having memory penalty which would be a stopper for anyone.

- Such approach is quite simple to implement (we have everything we need in the CustomData module already) and yet it is still fitting nicely in the dependency graph design (and noi alters to the design will need to be done to support such referencing)

The way how modifiers work already ensures that they don’t write to a CustomData layer which is referenced from original data block. Modifiers will do similar way after porting them to dependency graph copy-on-write, without anything special needed to be implemented in modifiers. Such always-copy-container structure (like Mesh) also allows us to have per-object VBOs for drawing stored in the Mesh itself.

How about data compression inside of the generated datablock?

The answer here is: memory is becoming cheaper much faster than we tackle all Blender 2.8 projects.

Mesh objects

The idea is to evaluate all mesh-type objects to a Mesh. This way we can use the single API accessing generated data and eliminate temporary objects being created with bpy.data.meshes.new_from_object(). This makes external render engines and exporters to work more uniformly.

Non-mesh objects

While it is straightforward to evaluate mesh objects to Mesh, it is more tricky to deal with non-mesh data such as curves, NURBS, meta balls. Proposal here goes as following:

- We evaluate all this data as-is, so we don’t need to change anything of surface evaluation.

- We convert evaluated result to Mesh after geometry is calculated. We do this unless render engine tells us it knows how to handle particular non-mesh object itself.

This approach allows us to have non-mesh datablocks handled by all render engines quite easily without need to add exceptions to all the possible render engines.

TODO: Think how we can speed up evaluation here.

Copy on write for non-ID data

This seems to be required to have some non-ID data covered with copy-on-write. Example of this goes as:

- Layer collections, to store evaluated override values

- SceneLayer (perhaps) to store some context/evaluted specific data such as geometry statistics.

The way to process with this is to first make a CoW copy of the ID block which owns that data and then copy the data from within the new ID datablock. This way we don’t need to specially modify dependency graph to support non-ID outer nodes. However, the tricky part here is to free the data. The only way would be to sore some special flag that non-ID data was copied and needs to be freed together with the ID which owns it. Still more clean that modifying dependency graph design.

Integration with render engines

There are various aspects which need to be known and stored by various render engines. The most common things across all render engines are:

- Object transform

- Geometry itself

- Materials

These things are provided by dependency graph evaluation. Just to state it again here, each render engine has access to dependency graph, which contains evaluated data which is to be rendered by that engine (this dependency graph is coming from Window+Workspace combination for interactive rendering and from Render structure for F12 render). This means, there is a natural way of having same scene visualized in multiple states at the same time (so it is possible to have simplified viewport sued for animation and Cycles rendered view without conflicting data). The evaluation of those components happens in the same exact way as it always used to be and the only major change here would be change in modifier stack to make it to work with Mesh datablock instead of DerivedMesh.

Per-render engine data

This is important to support some render-engine data managed by dependency graph. This is so we don’t have to manually free/invalidate GPU objects from depsgraph flush as it happens currently. Instead, we should introduce Shading component for objects. This component is responsible for tagging pre-calculated GPU arrays (or other render-engine data) as invalid when something related to shading/rendering changes. This component is not responsible for actual data evaluation.

This component is dependent on the following:

- Geometry component (so VBOs will be tagged for re-creation when topology changes)

- Transform component, so if render engine data depends on world position it could also be updated.

- Materials which are used by the object.

- Scene layers/collections, so object can have proper overrides and visibility applied.

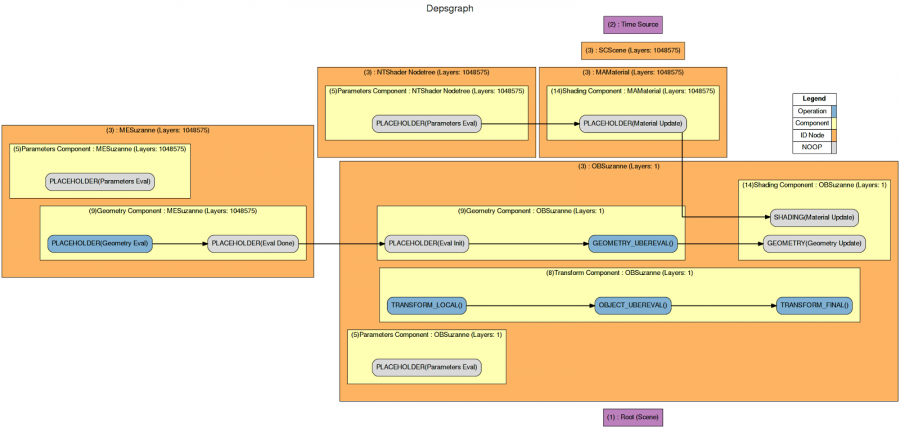

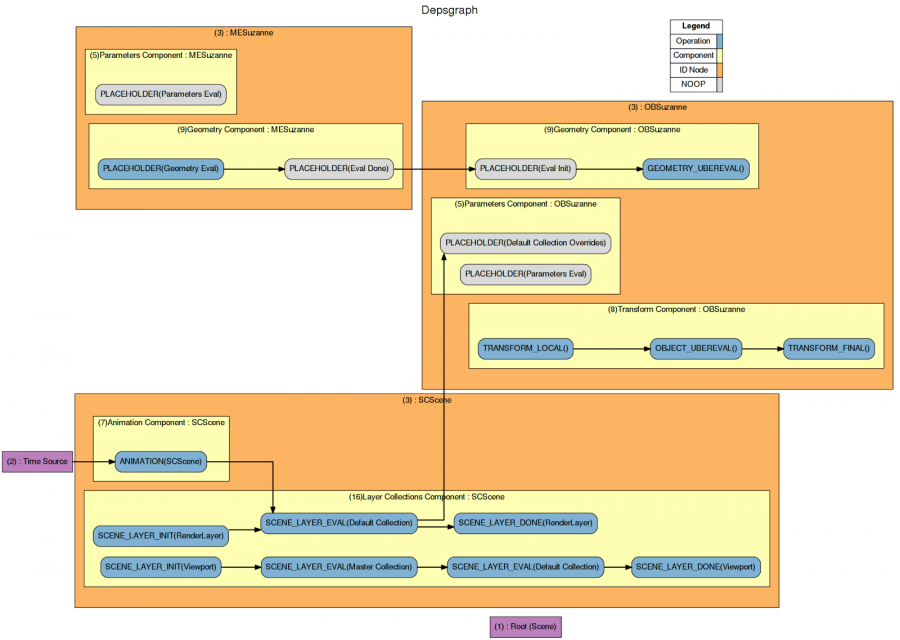

It is possible to split invalidation tagging in a way so we know what exactly was changed (so render engine can keep VBOs for geometry when only material changes). The image below briefly visualizes depsgraph with such operations and relations.

The render engine specific data is stored in Mesh and is expected to be a “subclass” of the following structure:

enum {

DEG_RE_MATERIALS_UPDATE = (1 << 0),

DEG_RE_GEOMETRY_UPDATE = (1 << 1),

...

};

struct DEGRenderEngineData {

int flag; /* Bit-field of enum above. */

};

struct Mesh {

...

DEGRenderEngineData *render_engine_data;

...

};

This way dependency graph update would not require to know anything about specifics of the render engine itself and will be able to inform any render engine about updated data. It is up to the render engine to allocate render_engine_data when it’s needed. If it is NULL, then nothing there is up to date.

Iterators

The way for render engine to iterate over evaluated objects is to use DEG_OBJECT_ITER() macro from C/C++ side and its analog from Python/C++ RNA sides. This macro will iterate over all objects which are to be rendered by render engine so the latter does not need to worry about doing all the visibility checks and things like that.

This macros will also iterate into duplicated objects and mark them as coming from dupli system so render engine will know that the object is coming from dupli.

This macro will also include objects from set scene and mark those objects as such. This will allow viewport renderers (such as Eevee) can do special tricks for removing selection outlines from those objects.

Integration with layers/collections

Those flags are one from the very basic things which layer collections are defining. What is important here is that object can be in multiple collections and each of those collections might have different visibility/selectability flags, so some sort of “solver” is needed here (it might be really simple one, like using settings from the most bottom collection which has given object). What we don’t want is to implement such a “solver” in every render engine.

One of the early implementations was flushing this flags from layer collections to objects on the actual change. This worked, but:

- Changing selectability flag might need to require render data to be re-evaluated (to make it so object has no outline and things like that).

- Very similar solver are required for more generic overrides.

So currently these flags are flushed on object using dependency graph evaluation system. This somewhat uniforms this kind of evaluation.

This approach has following downside: re-arranging or adding/removing layer collections requires dependency graph to be re-built. If that becomes a bottleneck, we can support partial graph rebuilds for certain changes.

NOTE: Current way of flushing is making me unhappy, it is possible to simplify it somewhat and make it behave same as generic overrides. See the section below for the solution.

Overrides

This section covers overrides which are defined on layer collection level and which are applied on all objects from that collection.

One of the important statements here is: we want overrides to be animatable. By this we mean that, i.e. color of override might be changing over time (not the fact that we are overriding something). In order to support that, we need a full support from the dependency graph.

NOTE: Here we talk about animation. Drivers on overrides might work, but that is really easy to run into dependency cycle here.

The idea here goes as following:

- Layer collections go to a dedicated component of scene ID node and are evaluated one by one sequentially. Evaluation of layer collections merges/copies ID properties (together with selectability/visibility flags) from parent/previous layer collection.

- Layer collection animation goes to scene animation component of dependency graph.

- This is so we don’t have to make collections a real ID.

- Object’s parameters component will have per-collection operation node which flushes visibility/selectability and overrides from corresponding collection to object itself.

This way we keep all evaluation local to data block which needs it, avoiding possible threading issues. This is briefly visualized on the following picture.

NOTE: The example above does not include relation between object’s parameters operations and its geometry/transform components. Such relations will be constructed similar to drivers’ relations.

Invisible objects

It is important to avoid updates of invisible objects, so it is possible to get maximum FPS even on a complex scenes during animation process. However, it is no longer possible to rely on the layers mask as it used to be in pre-2.8 Blender.

What we can do instead is to use object visibility flag instead of layers bitmask. This is a bit tricky to implement because object visibility is evaluated from inside dependency. What we can do is to have two “waves” of updates: first do visibility flags updates (when any collection is marked for update) and then do rest of the updates. This way in the dependency graph we simply replace bitwise layer comparison with object visibility flag check.

Local view

Local view is gone in the way how we used to think of it. Instead, it will be a temporary collection created “on-fly”. We can utilize overrides and invisible objects optimization to keep it working by creating two nested collections. One with all the objects which were not selected at the Local View operation invokation. This collection will have visibility disabled. Second nested collection will have all selected objects and visibility will be set to Visible.

This way we don’t need anything special from dependency graph to support local view.

Streaming

It is important to make cache streaming a native feature of the new dependency to be able to replace whole subtree with a cache stream (most likely, Alembic).

Let’s assume the following: streaming is an explicit mode of the dependency graph, and dependency graph gets specially re-built when artist says something is to be streamed from cache.

This is the opposite to some early ideas of having runtime switch in the dependency graph. But it has following benefits:

- It does not require any extra checks on evaluation to see what is to be evaluated and what is not. Simplifying the code and increasing maximal possible FPS.

- It is always predictable where the data is coming from. There is no way that some frames are fast because are coming from cache and some are slow because they are not cached. Such a mix-up was always a pain for physics cache and for Gooseberry we ended up sticking to policy “don’t try to re-cache on-the-fly”.

Avoids possible mixture of out-dated cache with real scene.

- Cache settings should belong to some ID block in the scene. Possibilities:

- Layer collection can replace itself with cached version.

- Dupligroup can replace itself with cache.

This way we can either replace individual objects with a cached version or replace the whole collection of objects.

From the dependency graph point of view, caching is a special node, which reads cache file on evaluation. The read data is converted to CoW-type of object without any original datablock which then gets feed to the render engine via depsgraph iterators. This way render engines do not care whether object is coming from cache or from real scene.

Data masks

Data masks were used to check which custom data layers needs to be evaluated for the current display. This was originally based on iterating over windows, checking all open viewports etc.. Was quite ugly. And then switching drawing modes needed special updates because those might have been needed to have extra custom data layers.

Now, when dependency graph is stored either per window+scene layer (for the viewport) or per (scene+scene layer) for final render such datamask acquisition becomes esier. Basically, what we do is we check what render engine needs for the current context (so Clay only says it needs just meshes, while Eevee might request having UV maps). This seems to be enough, because overlay engines (edit mode overlay i.e.) does not need extra CD layers rather than geometry.

Duplication system

Basically the idea here is to move duplication system to a dependency graph iteration process. So instead of iterating over all objects, then checking whether object is a duplicator and then requesting for the list of duplicated objects we simply tell dependency graph iterator that we want to include duplicated objects.

Duplicated objects are tagged with FORM_DUPLI already, so render engine cas still do some special logic to optimize those out or treat them differently.

Particles

Particles were requested to be covered by Dalai, not sure what’s special about those.

Basically, particles should become a user-guideable duplication system, which is being integrated into regular duplication system described above.

Some special mode drawing might be needed here to have decent visualization prior to system being fully set up (so we can see some “dots” of particles as an overlay in Cycles viewport).

Everything is a node

This is something we need to move to, but this is a long way to go and wouldn’t put it as a target for first usable 2.8 release.

The idea of node-everything is to have CoW system in place first. Then we can extend it to support multiple copies within same datablock, so modifiers can share same input, modify it differently and calculate final result from this.

More detailed design of this system will be worked on later.