Org:Institute/Open projects/Mango/ColorSpaceNotes

目次

[非表示]Color Space Notes

Radiometrically Linear

Radiometrically linear (often called just "linear") means that if in reality we double the amount of photons, the color values are also doubled. Put another way, if we have two photos/renders each with one of two lights on, and add those images together, the result would be the same as a render/photo with both lights on. It follows that such a radiometrically linear space is best for photorealistic rendering.

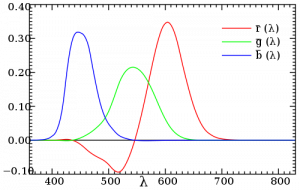

However, there isn't a single such linear color space, there is still variation here. In real life there is a spectrum of colors, depending on the wavelength of the photon. In practice images are usually stored as 3 components, usually RGB values. Where the R, G and B channels are located exactly on the spectrum can vary. This is usually specified in terms of CIE chromaticity values and white point, and these values may be different between color spaces even if the color spaces are both linear.

Linear Color Spaces

- Blender linear color space is defined as using the Rec709 chromaticities and D65 white point (this is the same as e.g. the the default Nuke linear color space). This matches the sRGB and Rec709 display spaces, so conversion to them is only a 1D gamma-like transform that is applied to each RGB channel independently.

- ACES linear color space has different chromaticities as defined in the ACES documentation.

- S-Gamut (Sony-Gamut) has again different chromaticities, corresponding to the sensors in the Sony F65 camera. So the R channel in this color space would be what the red color sensor measures, and similar for the G and B channels.

Conversion between these linear color spaces is simply a matter of applying a 3x3 matrix transform.

Conversion to Display

Besides the choice of linear color space, it's also important to have a reference transformation to some reference display device, by which we can judge colors. There are standard transformations here, but for best results artistic choices come in as well.

Part of those choices are related to fitting colors into a the limited gamut of e.g. an sRGB monitor. It can't display all the colors we can see in real life, so to compensate for that we might map them in a particular way that is perceptually closer or makes bright colors fit inside the dynamic range when the monitor can't actually display them. The other part is that you might want a particular look that doesn't match reality, but maybe is similar to film printing or some other color grading choice that you want to apply across the board.

Typical display output might be sRGB monitors, Rec709 HDTV's, digitial projectors or film printing.

ACES

ACES is a new standard that includes the ACES linear color space, and transformations to and from various devices. Documentation and code is in the aces-dev github project. The Sony F65 camera used on Mango was built around the ACES standard.

A big part of this standard is the RRT, a reference rendering transform through which images should be displayed, in combination with a transform for the specific device. The RRT is a film print emulation, to give a film-like look. There's a paper about the design of the RRT.

OpenColorIO and CTL

OpenColorIO is a library for color management, which will be integrated into Blender. For each project you can use a color configuration file which defines all the color spaces and their transforms to and from the references space, along with the role of each color space.

The ACES transforms are defined in CTL (color transform language) as developed by ILM. The aces-dev project includes the code to use these transforms, and they can be converted to OpenColorIO lookup tables as well.

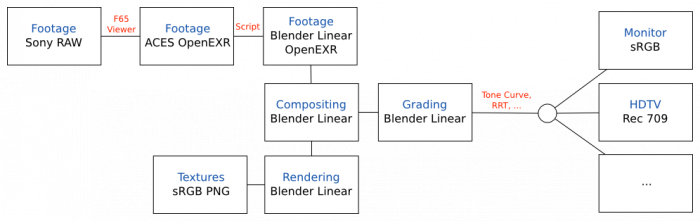

Possible Mango Color Pipeline

Probably the simplest solution here is to keep "Blender linear" as our working space, since existing material colors are already defined in this space, and color correction nodes were made under the assumption that this is the working space. Converting our ACES footage to Blender linear should be quite simple as a command line batch job and not lead to any information loss.

For conversion to sRGB display we'll probably want a more advanced transform than currently exists in Blender. A good tone curve to map bright colors and a film-like look (using the ACES RRT or something else) would be part of the default display transform, and this is what OpenColorIO can provide.

For image textures we could simply keep using the standard sRGB to Blender linear transform.