Community:Science/Robotics

目次

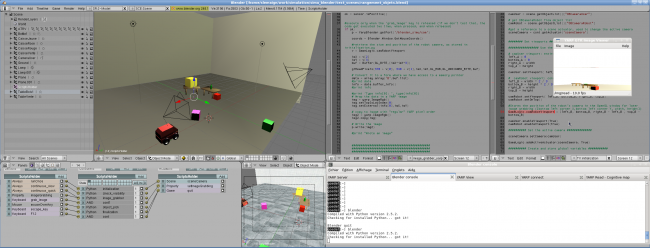

Blender For Robotics

This Wiki is dedicated to robotic projects using Blender in some way. It's goals are:

- to collect all information that is generic for all applications of the Blender software in the context of robotics research or systems.

- to form enough critical mass in the robotics community, in order to influence development of Blender, in directions that make Blender more usable for robotics.

- to list and briefly explain the currently ongoing activities and projects, and link to their websites.

Hence, this Wiki is not the best place to post and discuss scripts and patches for Blender that are useful for robotic applications; you'd rather use the Blender for Robotics mailing list for that purpose. This mailing list is also the best place to distribute your opinions, questions and suggestions to a set of robotics people, about how Blender's design, implementation and user interface could be improved in a way that would make the use of Blender in robotics applications more attractive.

What do we want to achieve?

This section discusses the expectations behind this Blender for Robotics initiative, as well as the ongoing activities and collaborations.

The big picture

Because the programming of complex robotics systems has a lot in common with the computer animation of simulated creatures, it makes sense to advocate the use of Blender within the robotics community (both research and industry!), as well as to advocate the implementation within Blender of robotics algorithms (motion control, inverse kinematics and dynamics, robot task specification, sensor processing, etc.) that have proven their value in robotics. In addition to these functional opportunities, Blender has another potentially very valuable tool for robot programming, namely its Game Engine, a graphical programming environment to define the behaviour of actors in a game, allowing to interface to the world via sensors and actuators, and to control the actors' actions via controllers. The result could be a very flexible and powerful robotics integrated development environment that could become the open source foundation of various toolchains in robotics and other engineering domains (automation, traffic control, gait analysis, motion capturing for animation, etc.).

Here is a summary of the different ways that Blender can be used in robotics, from simple to more advanced:

- Visualisation: to show (a virtual version of) the world, representing the real robot world, up to a specific level of detail and realism. This is a useful tool to understand better what is going on in the real system, e.g., by visualising what the robot (thinks it) sees. Similarly, the visualisation can show the output of planners, that provide (the actions of a robot in) a set of possible virtual versions of the real world.

- Simulation: run a program in Blender that could also run on a real setup, in order to get a qualitative idea about its behaviour and effectiveness.

- Emulation: simulation in the loop, with the aim of representing the real world to a guaranteed quantitative level of detail. For example, to try a robot control algorithm first on a Blender version of it instead of on the real robot, in order to tune its control gains or check its behaviour in a wide variety of situations that are costly or dangerous to create in the real world.

- High Level Architecture component: one or more Blender executables act as emulators/simulators of parts of a bigger system, which has a Coordinator to keep als parts synchronized on the same virtual time.

None of the current Blender for Robotics projects has chosen to implement this HLA architecture. (Here is more explanation about the consequences of that architecture.)

Active projects

- The Modular Open Robots Simulator Engine, maintained by LAAS-CNRS in Toulouse, France. Status:

- One dedicated full-time engineer working on the project, until June/July 2012

- Various robots and robotics sensors implemented in the simulation

- Modular architecture based on Blender and Python files

- Compatibility with various middlewares: sockets, yarp, pocolibs, ROS.

- Driven by, and validated in, multi-robot cooperation scenarios

- Ported to Blender 2.5 and Python 3

- Robot Editor provides a tool that allows easily to create new or convert existing models of robot hands. Collada in version 1.5 has been chosen as the common content exchange format.

- KDL (Orocos' Kinematics & Dynamics Library) integration into Blender, with focus on improving Blender's IK (inverse kinematics), with constraint-aware and dynamic algorithms.

- R2D3 Robotic Development 3nvironment, with a simulation of SCORBOT ER-5 Robot.

- Fanuc robot LR Mate 200

Normalized interfaces to robotics hardware

A first step towards the above-mentioned big picture is the definition of normalized (harmonized, or standardized) interfaces and naming of all common objects used in robotics contexts. For example, the class of actuators and sensors that we want to interface with, the set of control algorithms that are common in robotics, or the motion interfaces to the kinematic families of most common robotics platforms (arms, hands, as well as mobile platforms). In principle, this is a task for the whole robotics community, and not just for the subset that is interested in Blender; however, very little concrete collaboration has taken place in this context until now, so maybe this smaller sub-community can make the difference

What does Blender already offer?

Here is an overview of the generic aspects of the robotics components that are already available in the Blender Game Engine (outdated documentation, i.e., for 2.49 only), actuators, sensors and controllers:

- actuators in robotics have basically four control modes: position, velocity, acceleration, or torque. (Also current is an actual control mode, but only when one wants to take the dynamics of the electric motor into account!) The torque control mode requires a dynamic model of the robot that the actuator is acting on, in order to calculate the position update of the robot under the applied torque. Position is the simplest to interface in Blender, currently, since computer animation packages typically work only at position level. Velocity and acceleration require some for of state information to be integrated into the Game Engine. (Or does that exist already?). Anyway, (most) actuator interfaces are rather simple, at least at the setpoint specification level; for non-position interfaces, Blender must be extended with integrators that transform the instantaneous velocity, acceleration or torque inputs into (a time series of) position updates.

- sensors in robotics can be (roughly) of two different kinds:

- proprioceptive: that means, 'sensing the internal state' of the robot, and that almost always boils down to the complement of the 'actuators' above, that is the sensing of the position, velocity, acceleration and/or the torque at the robot's joints.

- exteroceptive, that means, 'sensing the interaction of the robot with its environment'. This category carries many sub-categories:

- distance sensors (laser range scanners, for example)

- force sensors (at the "wrist" of a robot arm, the "foot" of a humanoid robot, or the "fingertip" of a robothand)

- camera's, that take images of the scene around the robot.

- Again, each of these categories is not too difficult to give an interface.

- controllers: the number of robot control algorithms is rich, but still enumerably finite, so some initial standardization would be possible to: 1 DOF PID control; simple trajectory planning to reach goals; full dynamic control of humanoid robot armatures;...

The Inverse Kinematics (IK) in Blender provides a selectively damped least-squares algorithm, which is applicable to highly redundant tree-structured kinematic chains, as found in humanoid robots and mobile manipulators.

Via a Python script, a controller in the Game Engine can communicate with external programs (e.g., TCP/IP sockets, or any other middleware), which is an essential feature for the seemless integration of Blender as a visualisation and/or simulation component in a more complex, distributed robotics system.

The 2.5x developments of Blender dramatically improved its configurability, not just in improving the Python interface to all internals of Blender, but also to provide opportunities to build customized interfaces for various robotics applications and development work flows. The customization can take multiple complementary forms:

- configuration of windows, layout, properties, keyboard shortcuts, etc.

- adding new panels or GUI elements.

Expected/Wished features

Please mention your lab/contact after features!

- Controllable by external middleware (like ROS, YARP, VRPN, Purple, ORCA, CORBA, ICE, ...) (KULeuven, LAAS)

- On-line visualisation of robot experiments: when connecting a running robotics demo to a corresponding simulated version in Blender, the latter could graphically display a selection of all available measurement values that the demo programmers want to monitor. (KULeuven)

- Improved support for realistic inverse and forward dynamics. (KULeuven)

- Easier user interface to build robot systems, and to program the motions of these systems, including motions that are based on current sensor measurements. (KULeuven)

- Improved simulation of robotics sensors.

- In conjunction with the point above, imprecise readings and measurements from sensors and with respect to actuators -- the real world does not provide 26 digits of precision to its actions. It makes for a better simulation and transition to an actual robotics platform is the inputs and outputs have a small bit of noise (it also forces the developer to pay attention when designing control systems! ;) (douglas-dot-thom-at-gmail-dot-com)

- Good, open, durable file formats for robots. Since version 1.5 of COLLADA introduced kinematics, it may become a good candidate.

- Repository with reusable components, i.e., models of robots, sensors, actuators,... (KULeuven, LAAS)

Internal Wiki Links

- Getting started tutorial

- Game Engine capabilities

- Creation and modification of UI panels

- View Control Panel

- Image grabber

- Integration with middleware, e.g., using Yarp for accessing cameras in Blender.

External links

- Blender command-port to remotely control Blender with Python commands.

- Forex Robot

- Blender and robotics videos @ Vimeo

- Camera Frame Simulator simulation.

Pages in the Robotics namespace

<dpl>

namespace=Robotics titlematch=%

</dpl>