利用者:Roger/PhysicalRenderer

目次

Goal

The goal of this paper is to establish a pragmatic framework for implementing a physical-based renderer that can use Blender 3D information to render a scene. We also want to make the rendering process suitable for OpenCL implementation, and structure the computational pipeline in such a way as to make a massively parallel pipeline available. We also want to have a solution that gives a predictable render speed, or consistent render process, that can be benchmarked on the user's machine, so that the software can give a duration estimate We want a solution that uses, or will need to use, a predicable amount of RAM, to avoid the dreadded "Calloc returns nil" out of memory and crash message. We want a solution that gives the user a choice over quality, by varying some parameters that affect render output quality and time to render. The solution must use the Blender Render API, possibly in conjunction with a materials library for additional information about material characteristics.

Approach

The real world can be thought of as consisting of nearly-infinite bunch of atoms that respond to stimulation by a photon. A camera renders a finite bunch of pixels, whose HSV corresponds to the average light emitted from the scene to the camera. Therefore, we can break down the process into:

- Shape the geometry of surfaces and volume characteristics in the space

- Simulate the materials and textures used by objects in the space

- Break up the space into computable regions

- For each Simulate the lighting characteristics of the physical space

- Make a view that the camera sees

- Collect the light that would reach the focal plane

- Distort the image as it would be through a comparable lens

To shape the geometry of objects, we have Blender, of course. For materials and textures, however, we may need a different language from that currently used, as real objects react very differently as described here.

Real-world theory

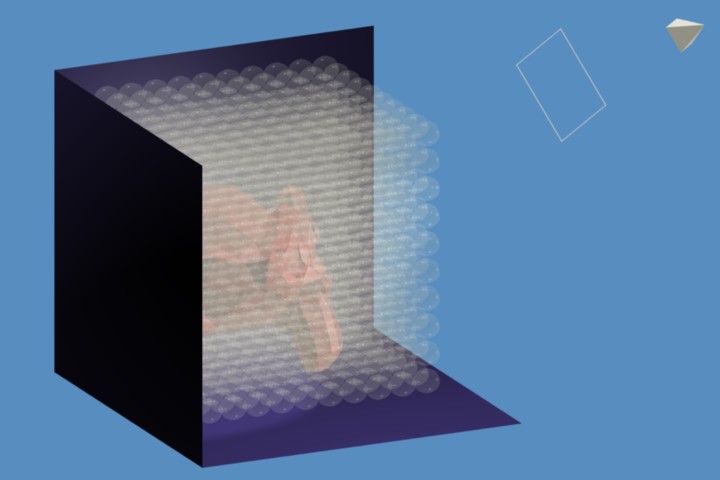

A real space can be thought of as an assembly of atoms, represented as little spheres within a computational domain (bounding box). Photons strike the atom, and the atom emits a photon of a different characteristic. Since we cannot model individual atoms, let's define a computational sphere, or region in space, that contains atoms of some nature. Photons enter each sphere from any/all directions. Within the sphere, those photons collide or interact with an object (atomic assembly of molecules) within the sphere to produce an ouptut set of photons, each radiating outward. I will call each sphere region an Atomic Computation Unit (ACU), and the transfer function F, whereby, for a given Input set of photon stimulatino Pi, the sum of L Lamp sources, and any emmission characteristics of the material M, an output set O is produced. O= F( {Pi}, {L}, M ) Typical kinds of functions that can be combined may include:

- clamping style, that no matter what frequency of input they get, they emit a compar5able level of a specific color;

conversion style that convert one frequency (such as infrared) to some parmetric value (temperature)

- blocking style, that block light and put out black for the "backside" of the material

- scattering style that disperse a photon into a broader set of spectra across the surface and thus scatter light in many directions.

- Assumption: Some reasonable limit for the space to be computed (which bounds the region of included atomic units) inclusion of atoms (light unit). Outside this bound, the space is surrounded by a global illumination shape, either a sphere or a box. Between the GI surface and the bounding box region is space in which light is perfectly attenuated (transmitted).

- Assumption: rather than dealing with individual photons, we can describe a lump or set of them as an energy unit and spectra.

Therefore, we need to know the temperature of the material, so we can calculate its new temperature and any heat-related emission. It also implies some sort of rate of energy input, an ambient temperature and some sort of dispersion and/or conducting factor.

- Assumption: While not distributed uniformally nor randomly within a bounding box, we can assume that the ACU are distributed in a grid-like arrangement, for ease and ensuring a finite compute space.

Each ACU distributes a set of photons from all three directions. Each photon has a Hue Distribution, or spectra, of energy frequencies. While we are mostly concerned with the visible spectra, we need to be condered with all spectra, as some materials can covert frequencies (heating iron with infrared radiation will eventually make it glow with heat). In addition to spectra, each photon has an intensity/energy level (single, or distributed across the frequency range?).

- Just like a graphic equalizer sums up bands of spectra, we can use some sort of stepped function to describe a range of frequencies.

Lamps within an ACU

The current Blender Omni lamp set to energy level 1 and color white can be thought of a white orb that is sending out a uniform level of frequencies in equal magnitude in all directions. Thus, an Omni lamp adds a uniform level of energy and spectra to the output

ACU computation overview

Each ACU receives a set of photons from its neighbor in the corresponding direction. The ACU is a function that produces its output based on the net interaction of materials, surfaces, and lamps within that region. The resultant photon at f(x,y,z) is equal to the energy entering the ACU, the dampening of the material within the ACU, plus the energy emitted from material inside the ACU, plus the energy/spectra of emitted by objects inside the ACU.

ACU size/resolution

If the size of each ACU is small enough, we get a fine-grained resolution of the actual light bouncing around inside the space. Just as Feynman observed, then, placing an observer (camera) anywhere within this space is a simple matter of projecting which photons would enter the eye of the observer. The computation of "reality" does not change based on the camera angle.

Ideally, we want to choose a small enough ACU such that it becomes simple to calculate the radiated O function. The ACU should contain only one lamp, an atmospheric (world) atom, or a piece of a single surfaced face.

ACU Emission Computation

Input Color (Frequency) modulation

If you think of the ACU as a sphere, then we can UV unwrap that sphere into a flat map, where we can specify certain characteristics about the ACU perimeter. One aspect is emission. A shiny red wagon (paint) emits red near the normal, but then all spectra as you view it from the side. So, it's spectral distortion/modulation would look like this. ファイル:PhysicalRendererSolidSoftRed.jpg So, if you multiply the input spectrum by this map, you get an output spectrum. The multiply operation effectively modulates some of the frequencies based on the face normal. Looking straight down on the face, for white light input, and you get red for that area; at an angle you get more white.

Input Energy modulation

Light passes through a clean (vaccum) volumetric space rather cleanly, and is not diffracted, or spread, out to other areas of the output. However, air contains dust and dust takes some of that light and scatters it. Using our same UV mapping presentation, we can think of making a map that says, for an input energy, how much of that energy transmits straight through, versus how much is scattered. A vaccum would be a white dot at the opposite (bottom) side where the photon exits. A water molecule would shade some of the rest of the sphere.

Output Energy computation

Again this is normally a multiplier, between 1.0 and 0.0. We cannot use a value greater than one, because normal materials do not spontaneously create energy.

However, we have light sources. Like a Chinese Lantern, you can think of an omni lamp as having a glowing white globe. The output values are the energy of the lamp.

Surfaces

Hard surfaces are not one atom thick. They are many atoms, and thus the surface of anything looks more like a carpet than sheet metal. This depth, and light passing through these multiple layers at different angles, provides many opportunities for realism. For example, raw cut wood, finish cut wood, 50-grit sanded, and 400-grit sanded wood all have different depths to their surface, of essentially the same material. If we could specify "Roughness" to mean the depth of the ACUs, and "Grain" to specify the depth variation to those hills, and the renderer could stack ACUs that deep, replicating them and then varying their influence according to Grain. The Grain is of course a texture. Just like mapping a texture to Nor simulates depth within the surface, I would consider these two synonyms.

Subsurface Scattering

Skin is made up of many layers. Each layer has different transmission characteristics. Normal materials have a zero transmission for the anti-normal, in that they do not pass light down through and thus have a backside. However, the top layer of skin (epidermis) is not perfectly opaque, and thus has a transmission UV something like that shown. Figure:PhysicalRender-SkinTop.jpg The next layer is a layer of fat, which is a bright yellow/white. The next layer is red (and blue) capillary blood which is also non-opaque.

Technically, the modeller should model all three layers separately, and assign those materials to each. The renderer can then compute the true light spectra of photons as they travel through the backside of the ear (example) and thus add to the light of the surface that is viewed by the camera. If a small enough ACU is chosen, then we can even get varying transmissive SSS based on mesh depth.

Therefore, the renderer should not cull out ACU's which are within a bound volume, but instead assign them a material for the bounding mesh (blood, in this case). For really really solid materials, you will need to paint the bottom of the UV black, so that no light passes to those behind or below them

ACU Shape

while it is nice to think of an ACU as a sphere, with energy/spectra measurements made at some equidistant space from a center, I think it would be easiest to start with just arranging ACU's in a grid-like array, and thus each ACU touches 26 of its neighbors (a cube surrounded by other cubes). Since we don't have any "dark matter", an ACU can only get and give energy from one or more of its 26 neighbors.

If we use a sphere, we can unwrap that sphere and use the UV Texture to describe the spectra reflection characteristics of that material relative to the face normal. Normally, the diffuse color is reflected straight up, or normal to the face. Then, as the angle of viewing incidence increases, more and more spectra are reflected, resulting in the color getting whiter as the light glances off the surface. The hack for this in traditional CG render has been to blend in a circle of specular color, but with a spectrum dispersion for standard types of materials, this won't be necessary.

We therefore have a quality choice of how many points to compute/sample from the ACU perimeter. A minimum of 6 (planar), 26 (cubic), or as many as the UV Texture resolution. One could even argue that we could go higher by averaging adjacent pixels. Therefore, we have nearly infinite range of ACU samples, however 32x32, or 1024 points, should be sufficient.

ACU Examples

Reflective: a perfectly reflective ACU maps any input from a neighbor out to the every angle output. So, if the mesh face normal within that ACU was straight up, the O for the top would equal the I for all other points output. The bottom would map input to be black as the standard/typical backside of any mirror. However :) there are two-way mirrors, so the backside really should be somewhat transmissive. This is a simplified SSS issue, so perhaps two different materials should be used or available, or you model a two-way mirror as one mirror and antother plane, facing the other way, with an almost inverted transmission map.

Light Sources

The power of light as it radiates outward decreases as the inverse square of the distance, less any blocking/absorbtion done by anything in-between. So, we need some sort of transmissive index for each ACU that says how much the light decreases as it goes from one input spot to all the output spots. We know the size of the ACU so we can compute the distance. If we multiply that by a white-black map, we can specify how much of the input energy is lost by the time the photon reaches the output spot.