「Dev:Ref/Requests/Render」の版間の差分

細 (1版 をインポートしました) |

|

(相違点なし)

| |

2018年6月29日 (金) 02:45時点における最新版

Note that implemented and obsolete requests have been moved here.

目次

- 1 Enhanced Edge Detection

- 2 User-defined bucket rendering order

- 3 Enhanced Edge Rendering

- 4 Controlling Shadows:

- 5 Timecode, frame number, blend name, scene name, etc, stamps over render:

- 6 Auto-Border (speed-up for anim tests):

- 7 Renderdaemon Integration

- 8 Smarter caching

- 9 Select Path to Yafray in Blender

- 10 Halo Clipping Option

- 11 Halo and ztransp fix/enhancements

- 12 Render the 3D View and Previewrendering

- 13 Static Particle Textures & Skinning

- 14 Realistic Sunlight

- 15 Smart Format Options

- 16 Alpha In Shadow Pass

- 17 Ray Tracing Option Defined Per Render Layer

- 18 The ability to have the client integrate with the Big Ugly Rendering Project

- 19 The ability to have Freestyle set (contour)line thickness according to local grey values(light->dark= thin->thick)

Enhanced Edge Detection

Edges are not always rendered when Freestyle Lines are selected in camera side, 'Visibility' mode.

Currently, if two planes with the identical material reference (object) coincide, edges that run through both uppermost surfaces are not always drawn. Sometimes, lines are not detected or are pruned from being rendered.

The same situation can occur if two planes only meet one another at an edge, but one plane tilts, or "folds", via a relative axis along that edge, in a keyframe animation. Sometimes a marked freestyle edge can have varying lengths of itself rendered.

The Blender system itself seems to produce these errors, before submission to any supported rendering engine.

User-defined bucket rendering order

The bucket renderer currently orders rendering in a fairly sensible way - it seems to be based on the amount of geometry in each bucket, which is often the most complex and therefore usually the focal point of the render. However, sometimes it gets it wrong - either you have a very complex background, or you'd just like it to render a specific part first (without doing a border render, then re-rendering).

I propose that a method of allowing the user to choose the order be implemented - I imagine that clicking on a certain unrendered bucket during rendering would mean that that bucket would be rendered next after the current one has completed.

--Indigomonkey 19 March 2007

Enhanced Edge Rendering

I (TedSchundler) can, and probably will work on actually implementing this. Hopefully others will refine this so that when I (or whoever else wants to get involved) do start coding, there will be a clear plan for what should be done to make people happy.

Right now users don't have a lot of control over the edge rendering in blender. Basically they can control color, and (for lack of a better word) aggressiveness. With the current implementation of edge rendering, what is rendered is determined by running an edge detection function (like what programs like the gimp do when you use their edge detect filters) on the rendered images zbuffer (depth buffer). This can be expanded, but is fairly limited, so I have another idea in mind -- actually rendering the edges (ploygon edges). The actual drawing of edges would be performed by first figuring out what edges to draw, then drawing them like a paint program (connecting edge loops where possible to make a uniform stroke).

Edges would be drawn:

- At the edges of objects, there will always by an edge on a triangle or quad. The edge shares two faces -- one which faces towards the camera, the other which faces away. For the edges around an object, this test can be used to determine what to draw.

- Edges used by exactly one face

- Edges with an angle > ' degrees between faces (i.e. to draw the sharp edges of a box)

- other edges (i.e. the outline of a character's pocket) can be forced to be drawn as a property of the edges (adding on to the current properties of creases and seams)

Edge properties:

- The thickness / weight of the edges can be defined by the user per object, and separately for the automatic outermost outlines (which are typically thicker) and forced lines.

- If the thickness / weight is a function of the distance to the camera or constant.

- Brush/style to use (size, etc.)

Brush Properties:

- Maybe there should be a brush interface so first a simple brush could be developed, then more advanced ones added later.

- Color / texture (texture would be an interesting option to make cool brush effects rather simply.)

- Autosmooth (make minor adjustments within the thinkness of the brush to smooth lines) (may be complicated to code)

- Drawstyle

- Straight

- dashed

- dotted

- Wiggle (for a hand drawn effect, have the line wiggle a bit and not be perfectly where is should be...maybe this could be combined with redrawing the edge multiple times based on the weight for a sketchy look)

Other requirements:

- Brushes must be scaled automatically to handle non-square pixels (i.e. anamorphic wide screen or field rendering)

- GUI Implementation ideas? (maybe like the "Draw creases" option there should be a "draw rendered edge" option)

Other random ideas: As I understand EmilioAguirre is planning a C version of his sflender SWF export. Perhaps this could share code with that project (since effectively this is generating vector data, it could be used for Flash or SVG output of a rendering).

-- TedSchundler - 29 Aug 2004

I agree that putting the UI option under edge properties would probably work. Drawing toon lines based on polygon edge angle would be a great improvement over the current method. At least for my experience in toon shading.

-- RyanFreckleton - 31 Aug 2004

I wrote a proposal about sketch and toon rendering with Blender, you can fin it here: http://www.neeneenee.de/blender/features/#21

At the bottom of the article are interesting links to other sketch and toon renderers.

-- Blenderwiki.EckhardM.Jäger - 18 Oct 2004

Controlling Shadows:

It would be very useful to have the ability to have more control over lights and the types of shadows they can cast. The controls would be the hue of the shadows, the intensity of the shadows, the blurriness of the edge of the shadow and the last an exponential blurriness from the source of the shadow casting object.

The last one needs explaining. The beginning of the shadow would be sharp, the further away the shadow is from the casting source the shadow would become blurry. This could be controlled by a curve.

--Xrenmilay 13 January 2006

Timecode, frame number, blend name, scene name, etc, stamps over render:

I think the latest SVN, 245.14, has a whole Stamp panel that address this feature. Yeah! --Roger 16:47, 4 February 2008 (CET)

Ability to stamp additional info over render in a suitable place is really great when dealing with large projects. You can do this with a video editor but if you could do it directly from Blender, chances of mistakes are minimum. Of course you should be able to choose what to print, and where. -- Blenderwiki.malefico - 05 Sep 2004

Hmm...this may be a good idea for a sequence editor plugin (assuming sequence plugins know what the current frame number is, I haven't personally coded one). Additionally, boxes for what will be cropped off on TV display (like what is displayed in the 3D view when looking from the camera) would be nice. -- TedSchundler

Would be a good idea to put the info as data in the imagefile and have a seq-plugin render it at will. This way the renders can be used without the data as well. Targa (.tga) allows an extra 256 bytes for comment. And worse case it can be stored in a .Bpib like file, the .Bdip (.Blender data in picture) the tga data can point to that file. -- Blenderwiki.JoeriKassenaar#25Oct104 JK

Take a look at these patches: http://projects.blender.org/tracker/index.php?func=detail&aid=4884&group_id=9&atid=127 and http://projects.blender.org/tracker/index.php?func=detail&aid=5485&group_id=9&atid=127.

--BeBraw 20:49, 22 December 2006 (CET)

Auto-Border (speed-up for anim tests):

It would be extremely useful to auto adjust the border(in render) size and position, to speed up high quality anim tests. You can render (internaly) a wireframe image, then compare with the previus one to learn where the changes occur and adjust the border render to cover this area. Is obius this don't work with shadows, AO, radiosity, etc, but can be very usefull to test materials on animations at fast way.

-- Blenderwiki.caronte - 14 Sep 2004

Renderdaemon Integration

This request is a combination of changes that together make it possible to have an easy to use renderdaemon (networkrenderer) in blender:

-- RoelSpruit - 23 Oct 2004

Smarter caching

Blender's renderer could be sped up enormously by more efficiency in caching/discarding data. Ton and I had a chat about this earlier, but I thought I'd at least write this down here for others to see, and as a reminder.

Currently in Blender, as far as I'm aware, the entire scene is re-built for every rendered frame - every frame of an animation and every time the render button is pressed. Of course, not everything in your scene changes between frames or renders, so this is quite wasteful of processing time. Blender in its current state, efficiently uses very little of it - very few of my Blender scenes take up more than 20MB of memory. On the other hand, these days large amounts of RAM are cheap and commonplace. Even on this little Powerbook there's 1GB of RAM - that's a lot just sitting empty that could be used to speed up Blender.

Ideally, in all parts of Blender, nothing should be calculated unless it really needs to be calculated. A large proportion of the rendering process is spent just setting up the scene. Reducing this render set-up time can help speed up animation, and also repeated test renders (which could potentially lead to a IPR-like feature for Blender).

Here are some initial ideas of things that could possibly be cached between renders, I hope someone with more technical knowledge than I have can correct/expand upon these:

- Renderfaces -- Dependent on: object deformations (perhaps the DependancyGraph can help here?), added/removed objects, object transformations (maybe it would be possible to just transform the renderfaces?), objects with materials affecting displacement, ...

- Octree -- Dependent on: added/removed objects, object transformations, object deformations, ...

- Shadow buffers -- Dependent on: added/removed objects, object transformations, object deformations, ...

- Environment maps -- Dependent on: added/removed objects, object transformations, object deformations, changes in materials, (ote: if changes to the scene are out of view of the environment map, then they probably shouldn't cause a recalculation either)

- The images themselves -- If a pixel in the rendered output isn't any different to how it was in the last rendered frame, then why bother rendering it again?

- Halos?

- Radiosity solutions?

- Other AO or raytracing data?

Similar concepts could also be used in other areas of Blender outside the renderer, for example:

- Armature deformations: The animation of the deformed objects could be cached after being calculated for the first time, and only re-calculated when changed. This would help to give nice fast preview playback that you could still see within Blender from different angles etc

The cache could be stored as a chunk of Blender data in memory, and perhaps optionally saved to disk with the .blend file (could be useful for network rendering). Maybe it could also get cached to disk if there's not enough memory. Of course, a user would need control over what gets cached, and when, to account for differences in systems, amounts of RAM, etc. Perhaps it could even be nice to make a little 'optimise' button that would configure all the cache settings for you, after detecting the amount of RAM etc. in your system, similar to how modern computer games can auto-configure their graphics settings.

-- MattEbb - 10 Dec 2004

Select Path to Yafray in Blender

Until now, using Blender with Yafray on a USB drive or a computer without admin rights is impossible. Yafray has a -p flag with which one can set the path to the Yafray installation. Maybe this could be incorporated into Blender with another path in the File Path menu.

-- ChristophKoehler - 20 Jan 2005

Halo Clipping Option

The Blender 2.37 release corrected what was supposedly a bug in 2.36, which caused halos to be clipped if the center of the halo was not visible. This correction is good in most cases, but in some circumstances it would be beneficial for this halo clipping to be optional, specifically when the halo is being used to simulate optical effects such as the bright corona of an eclipse, or the light from the sun disappearing from the horizon seconds after a sunset.

This feature would most likely not be very difficult to incorporate, considering that all it would involve is a button which undo's the fix in 2.37, and I'm sure many people would be grateful for the addition of this feature.

-- StevenEberhardt - 05 Jun 2005

A hotkey for flipping a rendered image to check perspective.

It is common for concept artists and digital matte painters to flip their images horizontally or vertically to check the image perspective. I would like to have this same feature in Blender i.e a hotkey, which flips the rendered image either vertically or horizontally. I think this would aid users a lot in scene composition and it seems like a relatively easy thing to do.

-- Lemoenus - 13 Aug 2005

There's a flip compositing node in current CVS. Perhaps that's enough?

--BeBraw 20:49, 22 December 2006 (CET)

Halo and ztransp fix/enhancements

urgent - I think

For now RC1 blender 2.43:

-seams appear between ztransp and not ztransp multimaterials on connected faces

-seams disappear when using ancient box-antialiasing, but appear, when halos are behind

-seams disappear when both materials have ztransp

-halos are always rendered behind ztransp materials

Simply to say, we URGENTLY need a replacement for the gone unified renderer option.

For now many things simply can not be done. Perhaps blender has to get advanced volumetric rendering.

(Example (image): Fade out a part of a boiler-casing with smoke and a slightly ztransp fluid inside: There are visible seams enforced by the halo-smoke between connected ztransp and not ztransp faces. The smoke is rendered behind the fluid.

Also see this forumthread:blender.org forum post

- M_jack -- Jannuary 16 2007

Render the 3D View and Previewrendering

When working on animations a fast preview would often be practicable. So an 10 or 12 fps animation would do it, which could be achieved by an option like "render every *nd frame". Numbers of the rendered files should be as if all frames would be rendered to add the missing files on successfull reviewing. Movies rendered this way should have a framerate referred to the full fps movie.

There is that great "render window" (OpenGl) button on the bottom of the 3D Views. An option for preview-render the whole view, like Shift-P does, should be added. Also the option to render the view with the current rendersettings should be implemented, so that "Align Camera to view" is no more necessary. For these renderings it would be more logical to take the framesize form the viewport-window-size, not from the rendersettings. Percentage buttons from the rendersettings should be used.

In the Renderengine selector there could be Viewportshading and Blender Preview included.

The "Adjust frs/sec" button (see image below) should cause rendered avis to be saved with the correct framerate: frs/sec divided by render *nd frame. (It would be helpful if blender got the ability to use decimal framerates like i.e. 12.50. Needed for totally proper ntsc rate too.) Also the "Play" button should be affected to override the interframes, that may be saved from a former rendering and to show the images for the right time intervall.

This would be a great help and speed up test procedures. And since many programms don't need to set a camera manually for the testrenderings, it would help beginners and people switching from other programms to blender.

Here an image how these things could look like: interface image

Concerning "border rendering" (which enables us to render out a defineable imagepart when rendering stills) this should stay active when rendering animations. It's usefull for both just rendering the interesting area and for compositing over a recent rendering that had an error.

-- M_jack - 22 Dec 2006

Static Particle Textures & Skinning

With Blender 2.41 static particles became much more powerful. Strand rendering opened up a whole new world of possibilities. Currently, however, strands can only effectively be used for things like hair or single blades of grass. A few additions to strand rendering will make static particles a great solution for many types of problems.

- A simple change is to change strand width units from pixels to blender units. Perhaps leaving the option for a pixel width would be useful but I can't think of an example. The problem with setting the size to blender units is creating sub-pixel polygons that can cause anti-aliasing artifacts. However, this could be easily solved by making the strand width be a minimum of 1 pixel wide. This way an animation of flying through a field of grass will look realistic. The near blades of grass will be close enough to show the actual strand width, while the distant blades will be only a pixel wide.

- A second change is to allow texturing of strands. Currently strands allow for one-dimensional texturing. Basically a gradient along the length of the strand. This is great for hair, but think about the possibilities of fully textured strands with alpha channels. You could create a wheat field, or even multiple grass blades on one strand. Full leaf textures could be applied to strands on a tree. Right now UV does this except it duplicates the texture on each face of the strand. Having the option to stretch the texture along the entire strand would be awesome.

- Strand rendering should be improved by adding the option to keep the strand's faces perpendicular to the global z-axis. The advantage to this would be the ability to make things such as accurate blades of grass, ferns, etc.

- A final feature that could be added to make strands incredible would be strand distort-duplicate. It would behave similarly to the curve modifier. An object could be linked to from the particle panel and that object would be duplicated onto each strand, the strand would then distort the object along the length of the strand. Very similar to the current object duplicators, but one object per strand and distorted by the strand itself. This would allow for some amazing effects especially with animation.

-- bydesign - 2 May 2006

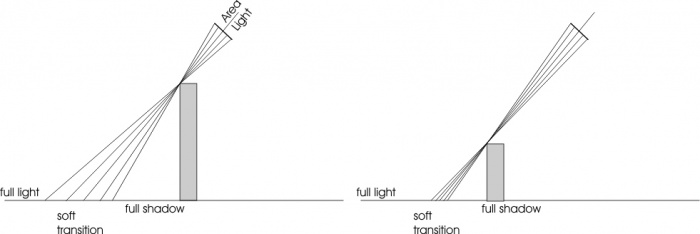

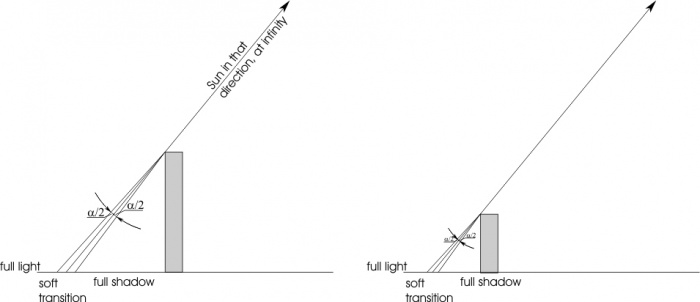

Realistic Sunlight

Well, area light is great, but it is hard to tweak for situations which can indeed be common. Outdoor light by the sun, for example do exhibit blurred shadow boundaries, yet light power is constant with distance from lightsource.

Doing this with an area light is painful, you must place the area light far (at least 10 times the dimension of your scene) make some tries with power and dist, compute the area light dimensions to be sure thet it fits the sun etc. THe result is anyway sub optuimal because light intensity has a noticeable faloff which sunlight do not exhibit.

This is how area light works:

It is apparent that:

- Shadow transition is dependent on both the shadow-casting and shadow receiving objects distance and the shadow-casting and light distance

- Light is distance dependent

A realistic sun should work like:

- Light intensity is constant

- Shadow transition is dependent only from the distance between the shadow casting and shadow receiving object

α is a parameter which should be defined by the user (and which, for our sun, is 0.5 degrees)

-- S68 - 5 April 2007

I'm 100% behind you on this one because it has the potential to eliminate many of the complex lighting setups that I commonly use but are driving me mad.--RamboBaby 23:50, 20 May 2007 (CEST)

Smart Format Options

If the selected format (e.g. JPEG) does not support RGBA, disable that button. If Z is selected on the Render Layer tab, and AVI or MOV or basically anything except Multilayer is selected, at least warn the user that those channels will not be saved. And, vice versa; if PNG is selected, and RGB is selected, advise that the A channel will NOT be saved, and that RGBA is available. Basically build a little more smarts into Blender to help stupid users from making stupid mistakes and then thinking that Blender is broken. --Roger 03:11, 14 May 2007 (CEST)

Alpha In Shadow Pass

Trying to translate the shadow pass location/orientation is not possible since only RGB floats are output (it is possible to define an alpha channel for the pass via set alpha node with color pass or uv pass as alpha input but the results still totally unconvincing). Currently if you want alpha shadows for overlay purposes you have to create duplicate render layers with "Only Shadow" enabled in the material, on a separate render layer, via the "Mat:" field, duplicate light groups must be employed, many of the light properties must be changed, etc, etc... This makes for organizational nightmares when trying to create sets for OpenExr files (standard & and even more so for multilayer) for users desiring to have interactivity between separately rendered files e.g. character in render B picks up and moves objects from render A. Alpha in the shadow pass would allow easy translation of the pass when the character picks up or moves the object without having to create separate scenes and render layers to achieve these overlays. This would also allow the shadow pass to be painted on if customization is needed.--RamboBaby 22:47, 20 May 2007 (CEST)

Ray Tracing Option Defined Per Render Layer

Option to define ray tracing on a per render layer basis to prevent the "Ghosting" that is seen in the diffuse pass for objects residing on visibility flagged scene layers and non-visibility flagged for the render layer itself. This will go hand in hand with alpha in shadow pass for objects such as the floor in a scene so that when a character in render B alters the location of an object in render A, no tell-tale marks are left behind in the diffuse pass of the objects original location. This (along with alpha shadow) will allow for convincing ray traced shadow pass translation without having to create duplicate objects and separate scenes to in order to achieve. This also for sanity reasons.--RamboBaby 23:18, 20 May 2007 (CEST)

The ability to have the client integrate with the Big Ugly Rendering Project

BURP - the Big Ugly Rendering Project

This could perhaps allow for a rendering client to be installed by Blender as a service so you could help out with spare CPU cycles and you can rack up time to use BURP, and then also it could allow you to send files to be rendered on BURP directly from the Blender client. It could probably be implemented in a million different ways. Remi 18:50, 24 August 2008 (UTC)

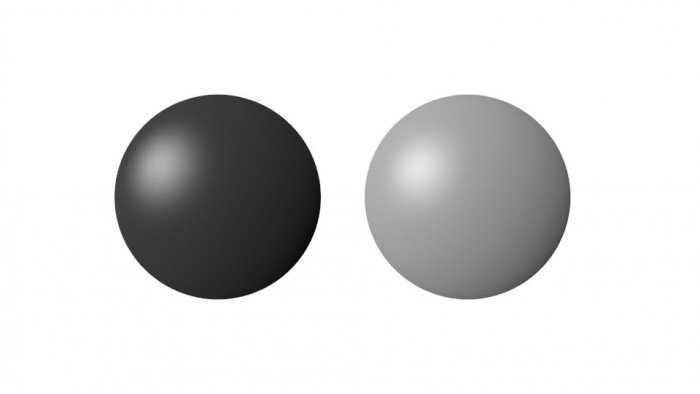

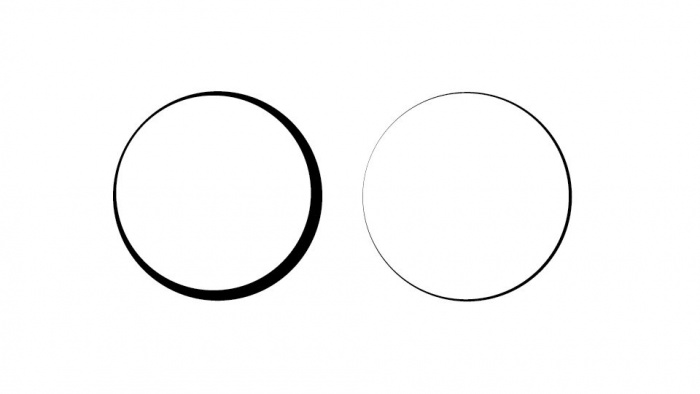

The ability to have Freestyle set (contour)line thickness according to local grey values(light->dark= thin->thick)

This could result in line art according to What I've learned as a Scientific/ medical illustrator. In black and white line art light value determins line thickness. In a scene with light comming from the topleft corner a white sphere would have the thinnest line near the top left and the thickest line near the bortom right. A grey speher would show the same result, but with on averige thicker lines. I hope the next images make my request clear:

Ricerinks 11:18, 11 October 2013 (UTC)