Dev:Ref/Requests/Animation

目次

[非表示]- 1 Shape Key Improvement

- 2 Individual IPO curves

- 3 Transforms

- 4 Paint overlay for camera view

- 5 Ipo editor proposal

- 6 Skinning in an arbitrary pose

- 7 Fast Motion Mode

- 8 Rigging

- 9 Workflow

- 10 Retargeting and external sources

- 11 Visualization

- 12 Shape Keys

- 13 Misc

- 14 Time Based Animation

- 15 Multiple Timeline System

- 16 lip sync project

- 17 Camera Animation

- 18 Shaping up Positions of Bones

- 19 Animating using local coordinates

- 20 Grease Pencil Improvements

- 21 Cyclic Animation Editor

Shape Key Improvement

Let's have shape keys support merged and deleted verticies. As the keys change, new verticies could be duplicated or merged.

Individual IPO curves

Instead of having a gigantic, horrible mess of curves like the Graph editor is right now, let's have an individual Ipo curve editor for each object. A little ipo curve editor could be under each animatable slider, and if that takes up too much room, have it in a drop-down box.

Transforms

Transform Reordering

Current State

Currently the Blender 2.5 system allows for setting the Rotation Mode of an object in Properties Window in Object Context. With these modes the user can control the order in which the object rotates around it's axes. This affects the outcome of the rotation and the ease in which the orientation is achieved. This is a great step forward, but one more step should be taken.

Feature Requests for the Future

Full transform reordering. The user should also be allowed to reorder transforms as well. In other words, to have scaling before rotation but after location. The order in which transforms are processed greatly affects how an object is positioned, oriented, and sized. Certain movements are more easily achieved using different orders allowing faster animation through more flexible and powerful use. This feature is supported by all major production ready animation programs for a reason.

Modes

- TRS (Translate Position, Rotate, Scale)

- TSR

- RTS

- RST

- SRT

- STR

--Bartrobinson 14:11, 5 November 2009 (UTC)

Paint overlay for camera view

This request is for a camera mode that lets you paint over the 3D view. This feature works like a transparent flipbook overlaid on top of the viewport and synchronised to it.

This lets you:

- Roughly sketch your animation poses in 2D before you go in and commit to them in 3D

- Work out your timing before you touch the action editor or any controls in 3D

- Leave on-screen notes as you animate

The idea is to draw over specific frames in the viewport using regular Blender brushes. The resulting bitmaps are associated with frame numbers and shown in sync with the scene during playback. There should also be a way to offset the drawings in time, cut, copy and paste them. These overlays should be linked to cameras, and therefore could be implemented as additional camera data. A hotkey could turn on and off painting and display of the overlay for the active camera.

The system should support the following:

- Blender’s brush panel (it has all the necessary drawing tools)

- Onion-skinning of the drawings

- Loading and saving of bitmap sequences

- Option to switch the display of the overlay on and off while you work with the 3D scene—for actual posing

Reflex appears to use a similar feature (see this screenshot.)

Also, PAP has amazing 2D sketching and timeline tools that could be borrowed.

Nemyax 20:28, 5 July 2007 (CEST)

Ipo editor proposal

The current ipo editor has the following drawbacks:

- Only lets you work with one ipo block at a time

- Always shows unused channels

- Relies very much on the current selection

- Always scales relative to the selection's median point

- Sets interpolation on a per-curve rather than per-key basis

The following table lists some requests that will hopefully help solve these problems and suggests the user interface for them.

| REQUEST | POSSIBLE GUI |

|---|---|

| Edit curves from multiple ipos at once, as in NLA editor |

Show ipo curve names in a 3-level collapsible list: |

|

You can turn off the display of the ipo types you don't need. For that, use the ipo type buttons. These work like the datablock buttons in the OOPS schematic view. If you toggle on the World and Sequence ipo type button, the World and Sequence entries appear in the object list. |

|

|

With many objects selected, the list can easily become cluttered, so there should be an option to hide channel names that don't have curves. |

|

| The list can be updated automatically (as selection changes) or on demand. The Auto Update option can be toggled on and off. The Update option populates the list with the currently selected objects and/or pose bones. | |

| Bookmark frequently used views |

If you work with specific sets of objects or bones, you may want to have quick access to their animation curves. The Add Bookmark option adds the current view as a bookmark and prompts you for the bookmark's name. |

| Improve ipo curve scaling | Introduce a 3D view-style cursor. The cursor acts as the pivot for scaling the selected keys or curves. Cursor snapping options and a numeric input for cursor coordinates are available, as in the 3D view. |

| Set interpolation per key rather than per curve | The Constant, Linear and Bezier types are set depending on what is selected. If keys are selected, the interpolation is set only for those keys. If curves are selected, it is set for all keys in those curves. |

Nemyax 00:15, 6 January 2007 (CET)

Skinning in an arbitrary pose

If you have a model that is not build in T-Position (or any other position not fitting to the rest position of an armature), it would be nice if you could pose the armature fitting the mesh. Then one would go and skin the armature.

This is currently impossible, because the mesh would move if you don't skin in rest position.

Bugman wrote: "It would be nice to be able to set the pose mode rest pose (the "undeformed" pose) to any arbitrary pose. There's no reason that pose should necessarily be the same as the edit pose pose."

See http://blenderartists.org/forum/showthread.php?t=79336

--Soylentgreen 12:42, 22 December 2006 (CET)

Fast Motion Mode

- You can use Motion Blur in Blender for fast motion animations; however, if you want to animate loops that are faster than 1/25 sec than you have to render animation in 'slow motion' and shuffle frames manually. In this flapping wings loop animaton I've used 11 frames out of rendered 12 (MBlur Factor: 0.80), which have been manually shuffled post process in a frame sequence: 1, 3, 8, 5, 2, 4, 10, 6, 9, 7, 11.

- In my example this 'hack' is possible only because only the wings are animated. With additional body animation (such as an up & down bobbing motion), such a hack would not work. Such problems can also surface if you wish to animate fast run cycles, etc...

- My request is Fast Motion Mode, where you will be able to animate fast loops with a slow motion factor (mmm, nice & smooth IPOs :).

- --Igor 13:37, 5 May 2006 (CEST)

Rigging

IMHO, the biggest problem facing armatures at the moment, is controling bone roll angles while in edit mode. As it stands now, the user can change the bone roll by numerical input. If a locked track constraint is going to be used to roll the bone during animation, then we probably want the roll angle in the rest pose to be lined up just as it will be once the constraint is applied. Just like with an IK chain; in edit mode, we place the IK effector at the end of the chain, not in some odd location that will cause the chain to move to the target once we enter pose or object mode.

- I propose we give bones a third point, in edit mode only. This third point would appear as a sphere just like the other two points, but connected to the bone's center with a dotted--or solid--line, and it would indicate the direction of the bone's positive Z axis. The reason a point might be better than allowing the user to rotate the bone like is done in pose mode, is because a point will allow the user to exactly align the bone with another bone that will be used as the up vector (locked track target) in pose mode. Ctrl+N (recalc roll angles) would not be needed anymore, so it could be the hotkey to show or hide these new third points. This way people will discover the change quickly, and will be able to keep the 3D space from getting too cluttered with all the extra points. It might also be good to only show the third point for the currently selected bones. It would also be nice if long connected chains could optionally be forced to lay on the plane indicated by the root bone's three points.

- For consistency and convenience, we should change the locked track default settings from "To:Y Lock:Z", to "To:Z Lock:Y".

- Currently, there is no up-vector feature for character limbs (to indicate elbow and knee direction). A locked track constraint is often used on the root bone of a chain, but this does not work correctly through all 360 degrees of rotation, not even close. A work-around has to be used to get the full range of motion and accurate directional control:

- In a two bone chain, a third bone is added as the parent to the root bone, in the same location as the root bone. It is made to track the IK target, and roll with locked track to another target, so this second target causes the bone and it's children to roll.

- This work-around could be eliminated with a new "UV:" (up vector) field in the IK constraint. The second target would go in the UV field and the constraint would give this special behavior to the root bone of the chain. How this would figure into IK blending and 0 length chains, I have no idea.

-- Wavez - 03 May 2006

- 'ik target snap back option' ik targets should have the option to snap back to the bone they are connected to if they get moved too far.

- 'pole vector constraint' defines the direction knees and elbows using ik bend. with the current other constraints it doesn't seem to be possible to strictly enforce this.

- autorig based on body proportions

- auto repurpose of control rigs to other characters/rigs

- 'rigging mode' where you can move the joints freely and it just extends/shrinks the bone length and repositions the joint.[edit: wouldn't that be editmode?]

- symmetric selection and movement of joints (very useful with rigging mode).

- Slide bones along other bones (ie they don't have to be positioned tipp to tip) for instance slide jaw bone halfway down the head bone. Similar to sliding verts along an edge.

- I have the need to be able to change the local rotation axes of bones, i.e. so the local X axis is not necessarily pointing down the bone. Currently I can fake it by making the bones tiny in length and aligned along my desired x-axis, but the IK constraints don't work unless the bones are in a real chain. -jg

- With the new envelope rigging, it could be interesting to have an "exclude" button that deny the selected vertex from being modified by the selected bones. Actually we must select "Mult" and add those vertex to vertex groups with the same name as the bones of interest.

A faster way of adding/removing vertex groups association is needed. Idea of the implementation interface: - Select many vertex (no matter vertex groups it's associated with) - Click a button/keybind to have a list of "on/dont change/off" buttons with bones names. Deselecting some bone in the list will remove selected vertex from this vertex group if it exists. Selecting "on" will create the vertex group and add those vertex to the group. Adding a lot of vertex to many bones will be much faster and with "mult" fonction, it could be a very quick way to solve some problems. Think of a button to remove selected vertex from all groups and to add them to all groups too. -- FabienDevaux - 29 Aug 2005

- the possibility to recalculate stretch to constraints all at the same time. The stretch to constraints can be quite long to set up.

- Bones with child of constraint once applied do not support to be changed in edit mode. You need to delete the constraint and to reassign the constraint. A global redo child of constraint would be great.

Workflow

- Quick mirror tool for armature, like for modifier stack. Will act only in edit mode and could be applied soon or later. (will prevent from using ugly python scripts). -- Test.FabienDevaux#29Aug105 FD

- bone selection follows the mouse, then click to drag (twice as current method) - 'animate immediate' [edit: wouldn't that be RMB-drag?]

- ability to easily change FK/IK for a bone on a per bone per frame basis.[edit: wouldn't that be a constraint IPO?]

- floor contact/constraints - prefereably with rotation (ie ankle rotation when the foot makes contact) and/or sliding (push a flexible item at the floor and it slides as well as flexes) and/or 'melting' (the spreading of a fleshy pad when it contacts the floor, or the spread of a tire) caused by continued downward travel of the rest of the limb.

- stay in contact constraints -ie have a hand or part of a hand 'stick to' an objects surface, sliding around the object if the rest of the arm is moved. Thus if a character is holding onto a bar, you can drag him along the bar and he will hold on to it. (Optionally have this based on a force constraint, thus if you 'pull' him too hard he will let go...)

- constraint fade in and fade out - the constraint is at maximum for x frames, and has a fade in and fade out for y and z frames. This is useful for anchoring the rear foot in a walk cycle for instance.[edit: wouldn't that be again the constraint IPO?]

- pivoting the character to pivot the future control curves

- Select a 'ghost' or a point on a motion trajectory to go to that frame.

- global/relative/offset keys

- multireference constraint - constraint changes over time, thus parented to a till time x, then parented to b [edit: possible with a parented empty and Copy Loc/Rot constraint and IPO]

- using ipo curves to change how other curves behave (ie a velocity curve)[edit: the speed IPO?]

- cameraview changes on timeline

- freehand a path curve then assign the object, then assigne the keyframes.

- moving a 'ghost' or trajectory as a way to edit the IPO curves.[you mean 'Edit keys' (K)?]

Retargeting and external sources

- pose libraries

- armature libraries

- retargeting motion data (ie bvh)

- Storing all actions so that they are globably available to all characters

- 'record velocity' record the mouse movement to design a velocity curve

- a load animation only button in the bvh importer to have the ability to merge an animation in an existing skeleton.

- a rotate 90° button in the bvh importer.

Visualization

- show the trajectory as you create it (ie if you add a keyframe, then a curve connecting the keys is made).

- 'in place mode' - shows the action without doing the motion in global space

- display of motion trajectory on a per bone basis

- color code joints based on constraint type

- simultaneous showing of the trajectories/ghosts from the original motion and the in progress edited motion (probably

- with different colors/fade levels to differentiate - ie the old one has a higher transparency...)

- crossfade camera shot in realtime...

- see motionbuilder trajectories movie - worthwhile

- 'animation layers' (see description)

- joint/Dot based display (instead of bones)

- custom icons for specifying different handles (makes identification easier) - messiah gizmos and icons are info rich

Shape Keys

As an animator with past facial/lipsync experience on other packages, I'd love to see Blender support an 'average' mode for combining overlapping mesh shape keys. This key mode would be an alternative to the current adding of shape key deltas (average would be my preferred default for all shape key blends). Average blends of shape key positions allows for a much richer facial animation set-up and takes us away from the current, quite ridged, facial movement. It allows many shape keys to be set up for the various facial muscle groups, and then easily combined to form many higher level emotional expressions and visemes (for lip-sync). It's a pretty critical feature for good, flexible, and easy, facial animation. Apologies if this is already a hidden feature somewhere in the UI (but I don't think it is). -Gary 2006

Misc

- Center buttons (for the small red sphere) should be present in armature panel. Actually it's impossible to edit it position.

- ability to parent animation to bone angles [edit: like the action constraint?]

- rag doll [edit: what is that? - basic physics?]

- metaeffectors - effect fields, can be used to override properties such as weight assignments, or define influence

- areas for effects.

- story tool - match bvh clips together for doing previz

- story boarding - 2d stuff - animated textures for expressions,etc. animated planes or boxes with images on them

- 'time discontinuity' place camera shots in different places in story line.

-- TomMusgrove - 19 Nov 2004

- RVK + Posemode:

Relative Vertex Key working together Posemode. Actually, entering in editmode chages the mesh deformed by bones to this original shape. If it was possible to keep the deformed mesh as a relative vertex key in each key position of the armature it would be a simple way to refine the caracter animation, create a muscle like deformations, and more perfect joints control. In addition, bones deformation would become a modeling tool.

Excuse my english.

Paulo Brandao - 2 Out 2005

Time Based Animation

Right now, Blender's animation timeline is frame based. Frame based meaning that an object's animation is built on and anchored to the frame rate of the global animation. This is flawed for several reasons: First, animations will distort when attempting to adjust the frame rate (PAL, NTSC, etc.) This is obviously a hindrance when one works on several projects using different formats and wish to transfer files between them. This limits the transferability of .blend files, a paramount feature of Blender. Second, in the real world, the speed of an object does not change depending on what sort of camera is filming it. As it stands in Blender, it does. Third, the current frame based animation setup limits the abilities of an animator, for they are unable to create animations which cycle faster than the framerate--such as a fly or hummingbird's wings or the rotors on a helicopter or fan. They are forced to use awkward techniques to imitate those effects. And fourth, using a frame based animation system actually increases the amount of work needed to create a physically correct animation.

My proposed system would employ many of the features already in Blender, but currently, in my opinion, are not used to their full potential. The animation timeline already has a time meter. Instead of having to try and find a frame that approximates your desired time, you should instead be able to tell Blender that object x should move from point a to b in 2.5 seconds. The computer should be the one that calculates how many frames should be in between, not the animator.

A possible design for the new timeline would be for frames to be represented by vertical blue lines on the timeline at the time that they render the scenes. This way, those still wishing to use frame based animation will be able to depend on frames. Motion blur could be graphically depicted with a lighter blue box that indicates the time period in which the blur is sampled. "Keyframes" would simply be timestamps, and quite possibly may never land on a frame. The frames would use interpolation data in order to determine at what state the animation is.

First off, the immediate advantage of this new timeline would be that since keyframes are not dependent on frames at all, one can change the framerate on a whim and never need to worry about stretching or squishing the IPOs to fit the new speed. Also, the speed of an object is not dependent on what is filming it. This opens up fresh new possibilities, such as creating faux-high-speed cameras and matrix effects. Instead of having to adjust the time IPO of every animation path, all one would have to do is create a "high-speed" camera that rotates around the scene in .0001 seconds while recording x frames in the process and outputting them at 29.5 frames per second (this would require new code). This is simply not possible with frame based animation--the smallest unit of time for an animation at 25 fps is 1/25 of a second (25 Hz).

On a similar note, if one wanted to show the flapping of a the wings of a fly or the spinning rotors on a helicopter, one would be able to zoom into the timeline, create only a single cycle of the animation, extrapolate it, and viola, it is done. I have read many tutorials on how to fake this effect. The animator should not have to fake this effect! The "reverse-spinning" effect on helicopters is simply the product of motion blur and aliasing by imperfect camera equipment. If you notice, that as the rotors slow down, the effect changes. This is difficult to fake properly.

Also, if you were making a video of an explosion, and at one point wished to slow down at one point and show some detail (with regards to time) animation. In order to make it appear physically correct, you could animate the explosion in "real-time" and then "zoom in" to tweak things during the slow-motion part. You could create a second camera that runs at, say 100 frames per second in animation time but outputs the video at 25 frames per second for the slow motion, or have the first camera simply use those settings at the desired part.

Now for those who are still unconvinced of the merits of time based animation for "normal" tasks, imagine that an animator wished to animate a bouncing ball. Physically, it would be dictated that: d=v*t+1/2*a*t^2 For those less physics inclined, what this means is that the distance the ball (if starting from rest) has traveled from the starting point at time=t would be the distance traveled in the first second times the square of t. So, you calculate the times and distances you need to be proper. With time based animation, you simply find the time (even if it is fractional), move the ball to the proper distance, and insert a keyframe. With frame based animation, you'd have to figure out which frame is the closest to the time, and if it isn't marked off in the timeline, you have to try and calculate it (try NTSC's 29.97 fps), only to have it all ruined if you want to try and reuse it on a project with a different framerate.

The concept of the frame comes from the camera, so put it back where it belongs--back in the camera. Nature doesn't run on frames. By putting frames back into the camera, one can then play with the concept of "apparent time." This is what I mean with high/low speed cameras. "Real time" isn't running at that rate, but the camera is. Right now the two are bound together, and in my opinion are screaming to be freed.

I have never seen anything like this before, so I believe if Blender implemented this, it would be revolutionary. It pioneered a revolutionary user interface--one of a kind, and it's not over yet!

--DoubleRing 05:43, 19 January 2007 (CET)

Multiple Timeline System

This is sort of a "part 2" to my previous proposal. This would be difficult and fairly pointless to implement without Time Based Animation, so I don't expect this anytime soon. I would like people to start thinking about this, however.

With time based animation, we would have a whole slew of time dilation effects open to us. But, there will still be limitations (imagine that). Those in the USA have probably seen this advertisement. This is what the inspiration for the Multiple Timeline System.

With the "apparent time" and "proper time"/"real time" concepts introduced in Time Based Animation (note: I introduced these through an update a few days after I first posted it to the wiki), it would be fairly simple to simulate the slow motion effects in the commercial through an inverse quadratic curve. Now, we run into a problem: how to easily animate the main actor (Dennis Haysbert)? Until this is implemented, we would have to use the crutch of stretching IPOs, but there is a better way!

First, we would have to define the camera speedup through a time ratio. You could do this manually or use the method described in Time Based Animation and let Blender calculate it for you (ideally, the two should just be interlinked--like the color picker and the RGB sliders). Then you'd create a second timeline and move everything that you want to work on the second time. Then, you'd use what I call "time parenting." If you parent the timeline to a camera, what blender would do is stretch and squish the timeline dynamically to maintain a constant apparent speed with respect to that camera. In the timeline window, the green line would advance across the two timelines, and slow down and speed up to indicate the apparent speed of the animation, while the first timeline would always maintain natural time. Of course, you could do this backwards as well. You put the main actor (Dennis Haysbert) into the first timeline, and everything else onto the second timeline. This time, you DON'T time parent, but you stretch and squish the second timeline by hand to your heart's content.

I realize that this would take A LOT of coding, so I'm not demanding anything except consideration. Think of all those music videos where the band is playing in an intersection and the crowd and cars are flying past them in a blur. Ideally, you should be able to manipulate output based on which timeline it is associated with in the composite node system. There would be no more need to rack your brains to work with time effects!

--DoubleRing 06:54, 23 January 2007 (CET)

lip sync project

Camera Animation

I would like to be able to animate the Scale of the Orthographic camera, so I can animate zooming in on an image. Using a 35mm lens, or any lens, introduces some distortion. --Roger 18:53, 7 May 2007 (CEST)

- Isn't that already possible when pressing I->Lens while inside the Camera Panel (F9)? Or do you mean something different? --Hoehrer 11:37, 4 September 2007 (CEST)

Shaping up Positions of Bones

A feature that is missing in Blender is to be able to work with the 'Edit Mode and Sculpt Mode on mash deformed from the bones so that using the Transform Properties will have the opportunity to change the Shapes as you want with respect to the movement of bone

Ita:Una funzione che in Blender manca è quella di potere lavorare con l' Edit Mode e lo Sculpt Mode su mash deformate dalle ossa di modo che usando il Trasform Properties si abbia la possibilità di modificare le Shapes proprio come si vogliono rispetto al movimento dell'osso

image: http://img231.imageshack.us/i/schermata.jpg/

Animating using local coordinates

Currently, it is impossible to animate using local space. Whenever I try to animate something using local space, it always animates it using global space! It would be nice if a local space animating feature was put in in the near future.

Grease Pencil Improvements

Cure the grease pencil attachment to last selected object, behaviour seen as of Blender 2.63 and 2.64 RC1. For instance, while drawing in Cursor mode, any drawings are somehow attached to the last selected object even if it is not actually selected when the grease pencil layer is created. Selecting other objects makes the grease pencil drawings dissappear. An independant grease pencil will help with rapid prototyping of 3D models.

Add a means to rotate the view, as is somewhat possible in trackball mode, yet without the tumbling of trackball mode that becomes more pronounced the closer the mouse cursor is to the center of the viewport. The view plane should stay perpendicular to the view. It's primary function is to make drawing curves easier for people who do not have fancy tablets that can be physically rotated to get into the most ideal hand position for the desired curve. Usually called light table rotation in 2D applications. The goal is to improve the use of blender to rough out 2D animations, which can then be rendered with OpenGL.

Vary colours for next and previous Onion Skins. I suggest a flip, which should work for all but the most neutral of greys - an orange grease pencil layer will use complimentary blue for next drawings in the Onion Skin. The problems for 2D animation when in betweening and having no colour difference are losing track of which is which, and when not desiring a straight in between deciding which line to favor (bias).

--PsychotropicDog 16:07, 20 September 2012 (CEST)

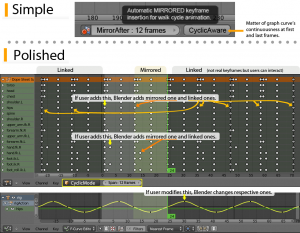

Cyclic Animation Editor

It's tedious to copy and mirrored paste each keyframes when creating Walkcycle animation. I want a feature that makes it easier.

Referring to CyclicEditorIdea, Simple idea is just add mirrored keyframe when add original one. And polished idea to deal with dragging and modifying.