Dev:Ref/Requests/Render2.6

目次

Render Features

We all know that the render engine is...well...not great, but please actually add ideas for solutions instead of just complaining about it.

Auto Render Tiles based on Device

I didn't know this until a few months back, but the render tile sizes are largely decided by the device you're using. I originally learned about this from a BlenderGuru blog article (http://www.blenderguru.com/4-easy-ways-to-speed-up-cycles/), but I've had users confirm that this also applied to them on the #blender IRC channel.

The verdict seems to be: 16px by 16px on the CPU, and 256px by 256px on the GPU. If we swap these, the render time could go up 200% or more. Logically, users prefer 20 minutes over 40; so I suggest the "Auto" checkbox be enable by default for new users, as the only times it should be different is in the case of low memory GPUs (edge case), or the rare CPU that works better with 8px or 32px tiles. In general it'll give better performance than guessing, or a shared tile size between CPU and GPU renders/users.

This affects most users, and would be great at giving a hand to users; preventing mistakes (like when switching between GPU and CPU renders due to feature support); and just generally improve render times.

Any counter arguments?

Fisheye camera for digital planetarium production

I believe, a more advanced camera system would be an advantage for a lot of artists. Also Blender could be used easier in other 3d-sections, like dome productions for planetariums. It is sad, that the Game Engine has a fisheye camera, but the "normal" renderer is still limited.

My wishes for an advanced camera system are:

- different types of FoV (perspective, cylinder, spherical)

- using more than 180° FoV in spherical Fisheyes for images with compression

The reason behind this feature request is mainly, because the current use of blender for digital planetarium shows is limited. Thankfully Ron Proctor at the Ott Planetarium created a camera rig (http://webersci.org/blendheads/?p=13), but it's use is limited. Including a fisheye camera would open blender to a new big industry, which is using 3d software at a daily basis.

(more about the domemaster format, which is the wanted result after a render: http://pineappleware.com/)

Benni-chan September 25 2011

Render Pause Feature

This will allow you to stop rendering when you are busy and need your CPU, and resume rendering when you are away.

tnboma- July 7 2011

Agreed, this feature is desperately needed; especially for animations. AnthonyP- July 9, 2012

Very usefull. Pause now 0%, full speed 100% per night. And what about possibility to "slow down"

render process in the evening?, e.g. to give daily timeframes for x% CPU-capacity only

(for instance 08:00-18:00 25%, 18:00-08:00 100% Sa,Su 100%. Something like a resource planner.

Quark66 16:40, 20 June 2013 (CEST)

Realistic Sunlight

Well, area light is great, but it is hard to tweak for situations which can indeed be common. Outdoor light by the sun, for example do exhibit blurred shadow boundaries, yet light power is constant with distance from lightsource.

Doing this with an area light is painful, you must place the area light far (at least 10 times the dimension of your scene) make some tries with power and dist, compute the area light dimensions to be sure thet it fits the sun etc. THe result is anyway sub optuimal because light intensity has a noticeable faloff which sunlight do not exhibit.

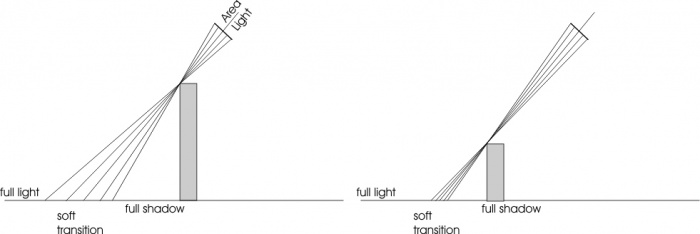

This is how area light works:

It is apparent that:

- Shadow transition is dependent on both the shadow-casting and shadow receiving objects distance and the shadow-casting and light distance

- Light is distance dependent

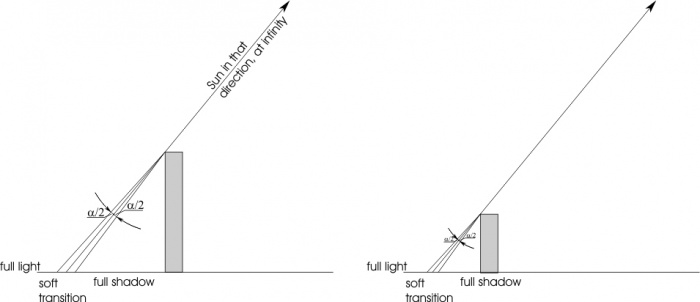

A realistic sun should work like:

- Light intensity is constant

- Shadow transition is dependent only from the distance between the shadow casting and shadow receiving object

α is a parameter which should be defined by the user (and which, for our sun, is 0.5 degrees)

-- S68 - 5 April 2007

I'm 100% behind you on this one because it has the potential to eliminate many of the complex lighting setups that I commonly use but are driving me mad.--RamboBaby 23:50, 20 May 2007 (CEST)

More control over render tiles

At the moment you can choose the order of rendering tiles with a few simple presets - starting from the middle, top to bottom etc. However, as a scene may have some portions of the frame much more complex than the other parts of the frame, there is a big disadvantage when the most complex tile is rendered last. The whole frame rendering is waiting for the final tile to render. In my case it means 23 cores halting and one core rendering. This problem will become bigger the more cores you have.

Changing the preset tile rendering order will help to some extent, but if the complex tiles are in the opposite corners there is currently nothing you can do about it. I have a scene where the cores start rendering a complex part on the bottom, but on the top part there is a complex detail too. In one minute the whole frame is rendered apart from the last tile in the top corner, which delays the total render time of the frame to 1min 25sec. That is a lot in an animation.

My suggestion is a pop-up menu with the frame tiles visible. From there you can click each tile one at a time to specify the priority in which they will be rendered. First you'd click the most problematic tiles and then the easy parts such as sky, so by the time the (for example) two problematic tiles are rendered, all the 35 tiles of the sky are rendered too. That way every tile would be ready approximately on the same moment and the processing power would be used efficiently.

- Samipe - 3. May 2013

I would like to go a step further. In addition to the suggestion above, where you have control over prioritizing a render in the settings and letting it run its course, include a more interactive approach to rendering, like a PHOTON PAINTER: a "brush" that the user sweeps across the rendering frame that defines from where photons/samples/rays are being sent and calculated. That way if I wanted to send more samples just to remedy only one tiny spot of the rendering with fireflies, I wouldn't have to do multiple full or bordered renders with various seeds, then composite them. I could just see the rendering as it is taking place and brush more sample just where I need them. Or if I wanted to have an effect where only a limited and oddly shaped area be rendered more fully and any other area having only a splatter of samples. It would offer so much more direct control over the rendering result while within the one rendering session, rather than excessive compositing steps, and could save much on rendering time.

--Esaeo 20:31, 1 June 2013

SVG in 2D

Sometimes, I want to make a SVG in 2D of 3D things, to obtain SVG like someone else did here: http://commons.wikimedia.org/wiki/File:Tiefdruckverfahren.svg I'd like an export to SGV files, please. I know it is a very strange demand, very difficult to add, sorry. Thanks. Domsau2 02:38, 20 June 2013 (CEST)

Perspective Control

Since I do ArchViz as my primary 3D task, it would be great to have a means, like real-world cameras, to control barreling (perspective distortion) where they use a Tilt-Shift Lens. Just add one more parameter in the camera setup called PC Lens Shift and permit an X and Y coordinate of the lens position relative to the sensor/film plane. Then it would be possible to have views of tall buildings without it appearing as if it is falling away as is done in high-end professional architecture photography.

--Esaeo 19:47, 10 July 2013 (CEST)